Jose Everardo Bessa Maia

Visual Odometry for RGB-D Cameras

Mar 28, 2022

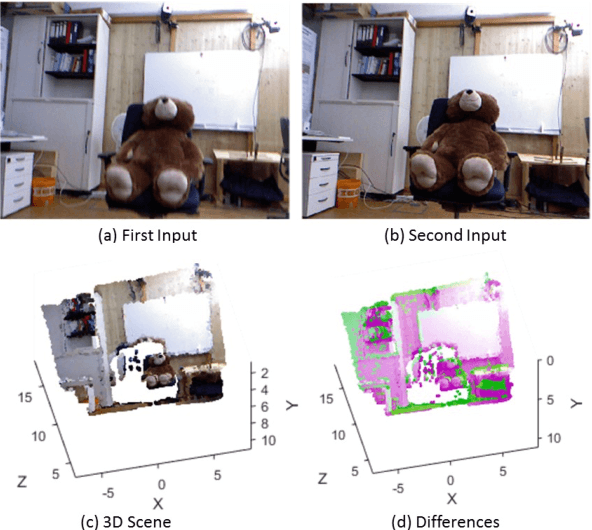

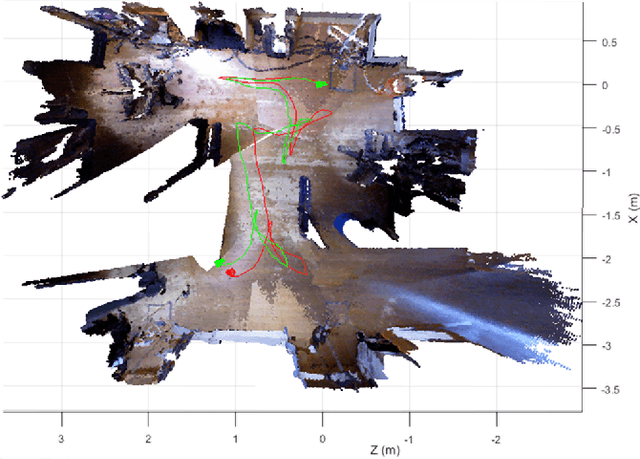

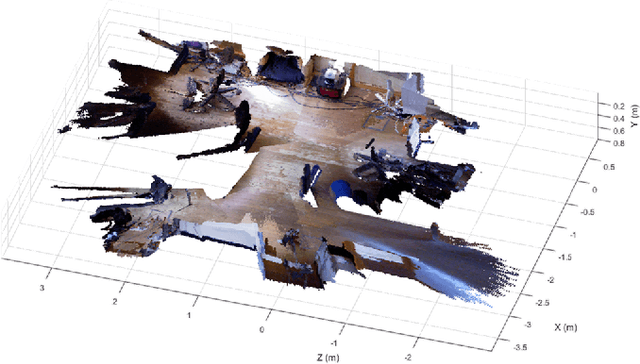

Abstract:Visual odometry is the process of estimating the position and orientation of a camera by analyzing the images associated to it. This paper develops a quick and accurate approach to visual odometry of a moving RGB-D camera navigating on a static environment. The proposed algorithm uses SURF (Speeded Up Robust Features) as feature extractor, RANSAC (Random Sample Consensus) to filter the results and Minimum Mean Square to estimate the rigid transformation of six parameters between successive video frames. Data from a Kinect camera were used in the tests. The results show that this approach is feasible and promising, surpassing in performance the algorithms ICP (Interactive Closest Point) and SfM (Structure from Motion) in tests using a publicly available dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge