José Pinto

Sonar-based Deep Learning in Underwater Robotics: Overview, Robustness and Challenges

Dec 16, 2024

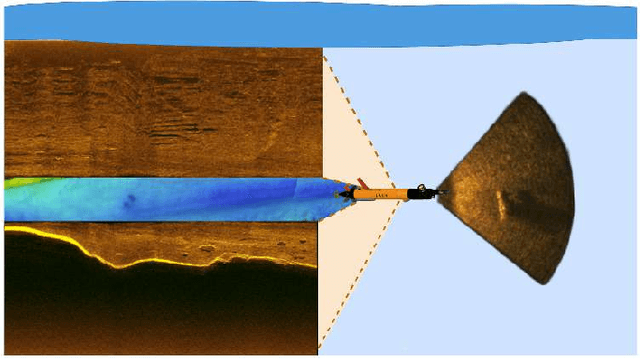

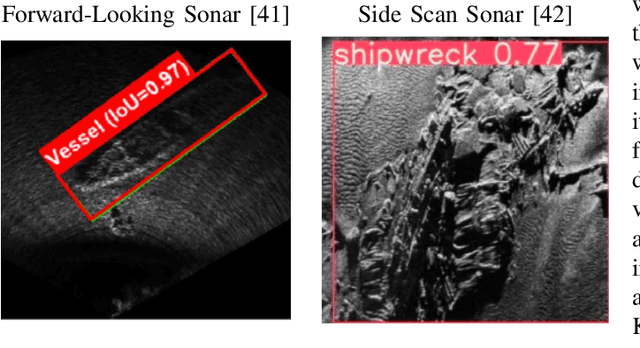

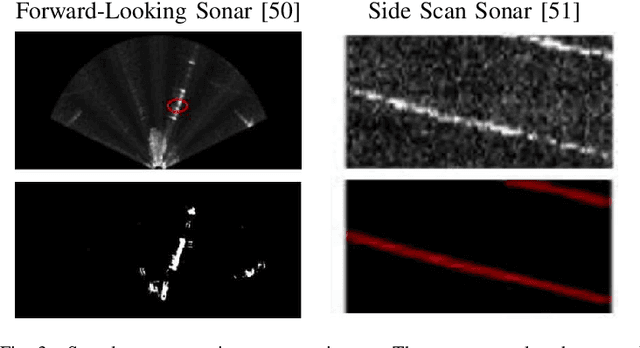

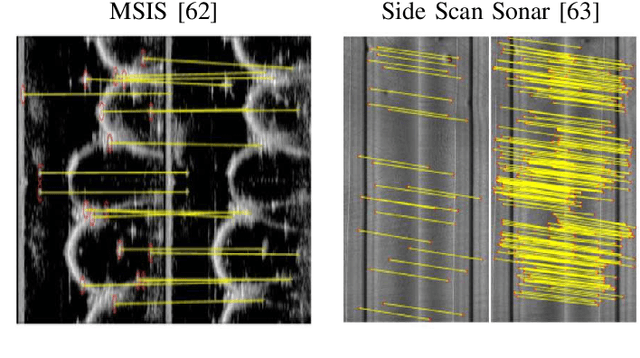

Abstract:With the growing interest in underwater exploration and monitoring, Autonomous Underwater Vehicles (AUVs) have become essential. The recent interest in onboard Deep Learning (DL) has advanced real-time environmental interaction capabilities relying on efficient and accurate vision-based DL models. However, the predominant use of sonar in underwater environments, characterized by limited training data and inherent noise, poses challenges to model robustness. This autonomy improvement raises safety concerns for deploying such models during underwater operations, potentially leading to hazardous situations. This paper aims to provide the first comprehensive overview of sonar-based DL under the scope of robustness. It studies sonar-based DL perception task models, such as classification, object detection, segmentation, and SLAM. Furthermore, the paper systematizes sonar-based state-of-the-art datasets, simulators, and robustness methods such as neural network verification, out-of-distribution, and adversarial attacks. This paper highlights the lack of robustness in sonar-based DL research and suggests future research pathways, notably establishing a baseline sonar-based dataset and bridging the simulation-to-reality gap.

Dolphin: a task orchestration language for autonomous vehicle networks

Jul 26, 2018

Abstract:We present Dolphin, an extensible programming language for autonomous vehicle networks. A Dolphin program expresses an orchestrated execution of tasks defined compositionally for multiple vehicles. Building upon the base case of elementary one-vehicle tasks, the built-in operators include support for composing tasks in several forms, for instance according to concurrent, sequential, or event-based task flow. The language is implemented as a Groovy DSL, facilitating extension and integration with external software packages, in particular robotic toolkits. The paper describes the Dolphin language, its integration with an open-source toolchain for autonomous vehicles, and results from field tests using unmanned underwater vehicles (UUVs) and unmanned aerial vehicles (UAVs).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge