Jorge Amaya

Identification of high order closure terms from fully kinetic simulations using machine learning

Oct 19, 2021

Abstract:Simulations of large-scale plasma systems are typically based on fluid approximations. However, these methods do not capture the small-scale physical processes available to fully kinetic models. Traditionally, empirical closure terms are used to express high order moments of the Boltzmann equation, e.g. the pressure tensor and heat flux. In this paper, we propose different closure terms extracted using machine learning techniques as an alternative. We show in this work how two different machine learning models, a multi-layer perceptron and a gradient boosting regressor, can synthesize higher-order moments extracted from a fully kinetic simulation. The accuracy of the models and their ability to generalize are evaluated and compared to a baseline model. When trained from more extreme simulations, the models showed better extrapolation in comparison to traditional simulations, indicating the importance of outliers. We learn that both models can capture heat flux and pressure tensor very well, with the gradient boosting regressor being the most stable of the two models in terms of the accuracy. The performance of the tested models in the regression task opens the way for new experiments in multi-scale modelling.

Unsupervised classification of simulated magnetospheric regions

Sep 10, 2021

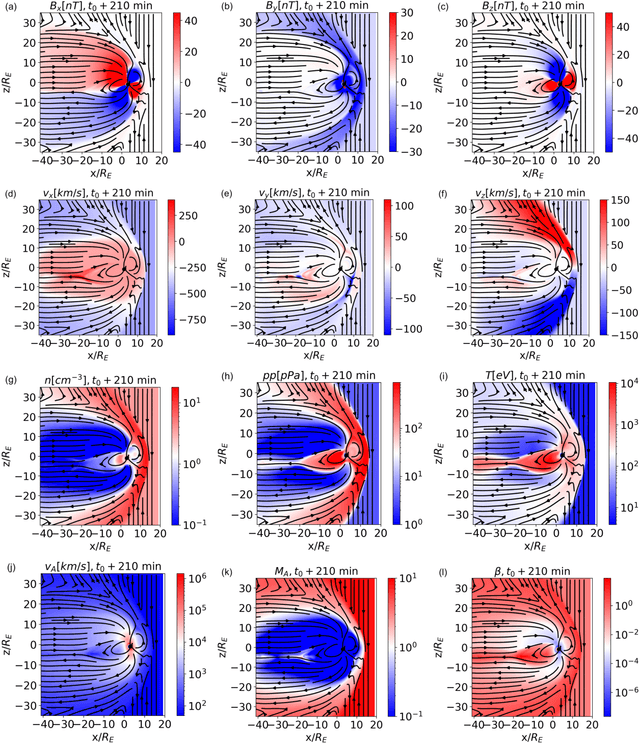

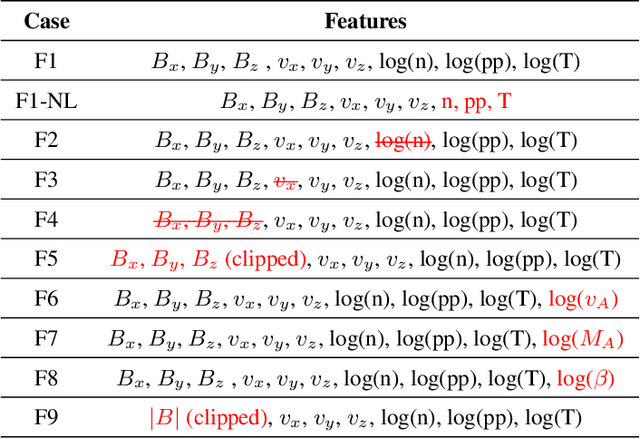

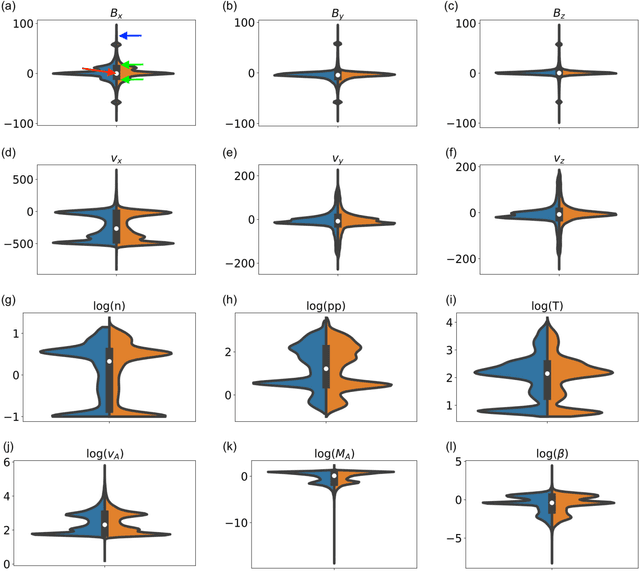

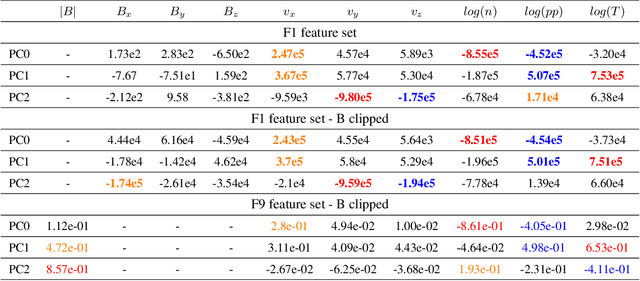

Abstract:In magnetospheric missions, burst mode data sampling should be triggered in the presence of processes of scientific or operational interest. We present an unsupervised classification method for magnetospheric regions, that could constitute the first-step of a multi-step method for the automatic identification of magnetospheric processes of interest. Our method is based on Self Organizing Maps (SOMs), and we test it preliminarily on data points from global magnetospheric simulations obtained with the OpenGGCM-CTIM-RCM code. The dimensionality of the data is reduced with Principal Component Analysis before classification. The classification relies exclusively on local plasma properties at the selected data points, without information on their neighborhood or on their temporal evolution. We classify the SOM nodes into an automatically selected number of classes, and we obtain clusters that map to well defined magnetospheric regions. We validate our classification results by plotting the classified data in the simulated space and by comparing with K-means classification. For the sake of result interpretability, we examine the SOM feature maps (magnetospheric variables are called features in the context of classification), and we use them to unlock information on the clusters. We repeat the classification experiments using different sets of features, we quantitatively compare different classification results, and we obtain insights on which magnetospheric variables make more effective features for unsupervised classification.

Dynamic Time Warping as a New Evaluation for Dst Forecast with Machine Learning

Jun 08, 2020

Abstract:Models based on neural networks and machine learning are seeing a rise in popularity in space physics. In particular, the forecasting of geomagnetic indices with neural network models is becoming a popular field of study. These models are evaluated with metrics such as the root-mean-square error (RMSE) and Pearson correlation coefficient. However, these classical metrics sometimes fail to capture crucial behavior. To show where the classical metrics are lacking, we trained a neural network, using a long short-term memory network, to make a forecast of the disturbance storm time index at origin time $t$ with a forecasting horizon of 1 up to 6 hours, trained on OMNIWeb data. Inspection of the model's results with the correlation coefficient and RMSE indicated a performance comparable to the latest publications. However, visual inspection showed that the predictions made by the neural network were behaving similarly to the persistence model. In this work, a new method is proposed to measure whether two time series are shifted in time with respect to each other, such as the persistence model output versus the observation. The new measure, based on Dynamical Time Warping, is capable of identifying results made by the persistence model and shows promising results in confirming the visual observations of the neural network's output. Finally, different methodologies for training the neural network are explored in order to remove the persistence behavior from the results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge