Jong-Ho Bae

Pulse-Width Modulation Neuron Implemented by Single Positive-Feedback Device

Aug 23, 2021

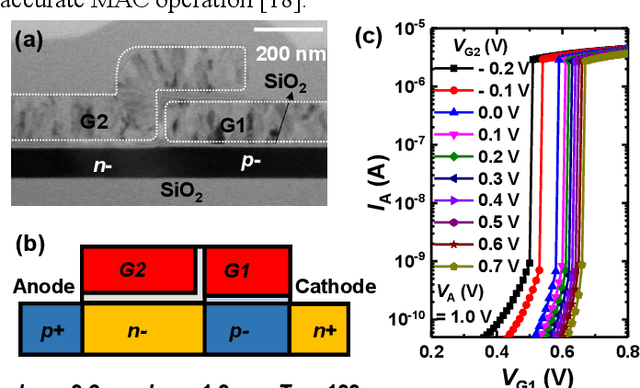

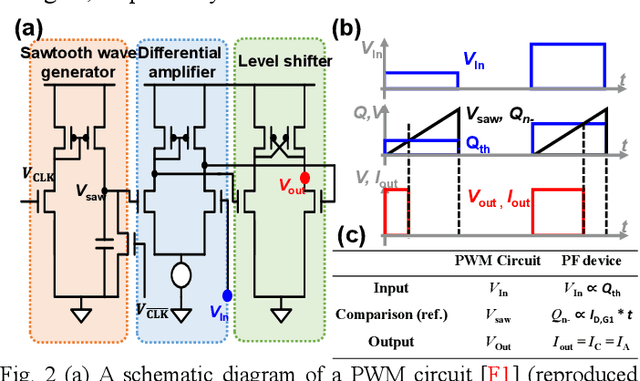

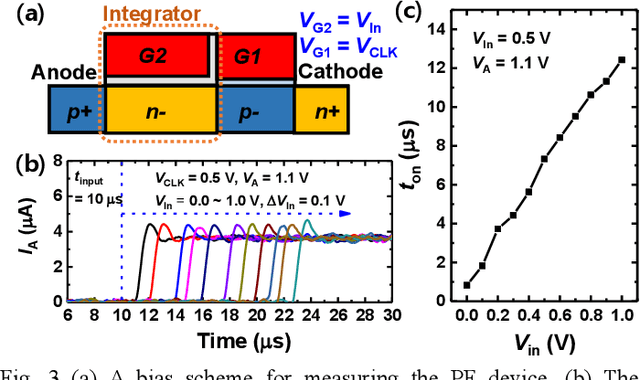

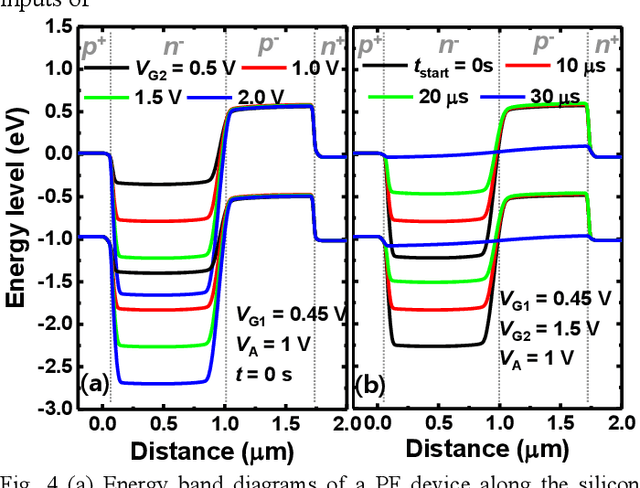

Abstract:Positive-feedback (PF) device and its operation scheme to implement pulse width modulation (PWM) function was proposed and demonstrated, and the device operation mechanism for implementing PWM function was analyzed. By adjusting the amount of the charge stored in the n- floating body (Qn), the potential of the floating body linearly changes with time. When Qn reaches to a threshold value (Qth), the PF device turns on abruptly. From the linear time-varying property of Qn and the gate bias dependency of Qth, fully functionable PWM neuron properties including voltage to pulse width conversion and hard-sigmoid activation function were successfully obtained from a single PF device. A PWM neuron can be implemented by using a single PF device, thus it is beneficial to extremely reduce the area of a PWM neuron circuit than the previously reported one.

Adaptive Learning Rule for Hardware-based Deep Neural Networks Using Electronic Synapse Devices

Aug 19, 2017

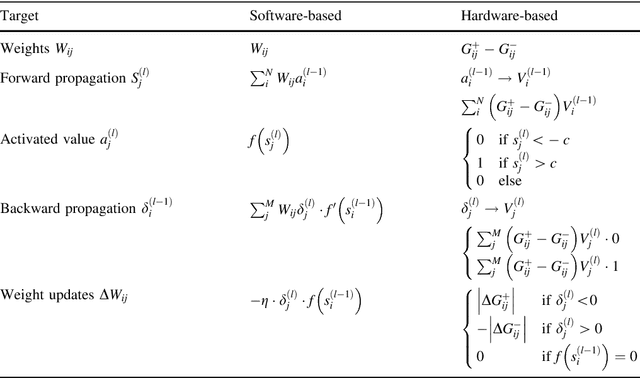

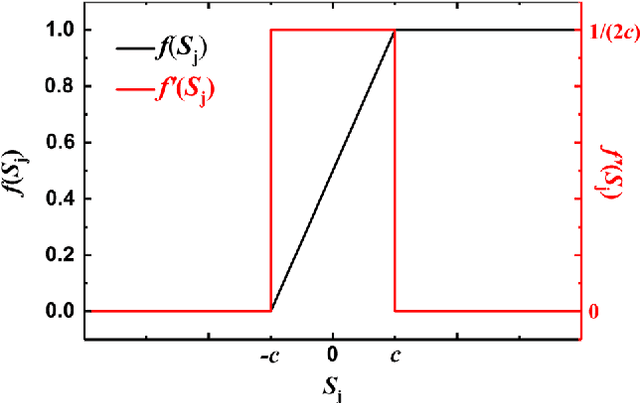

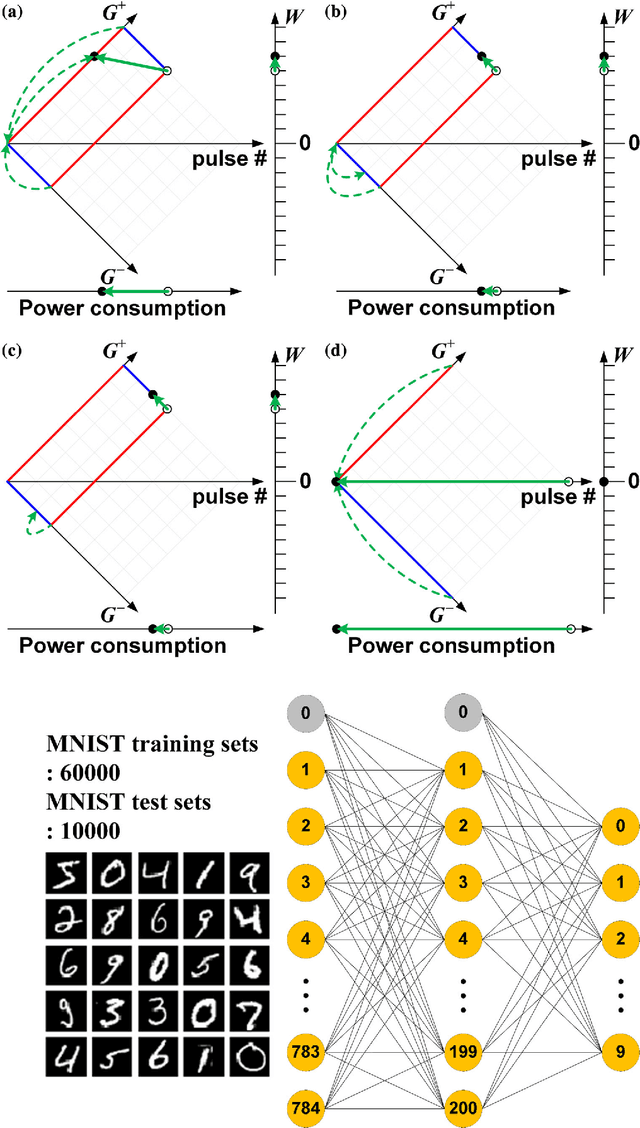

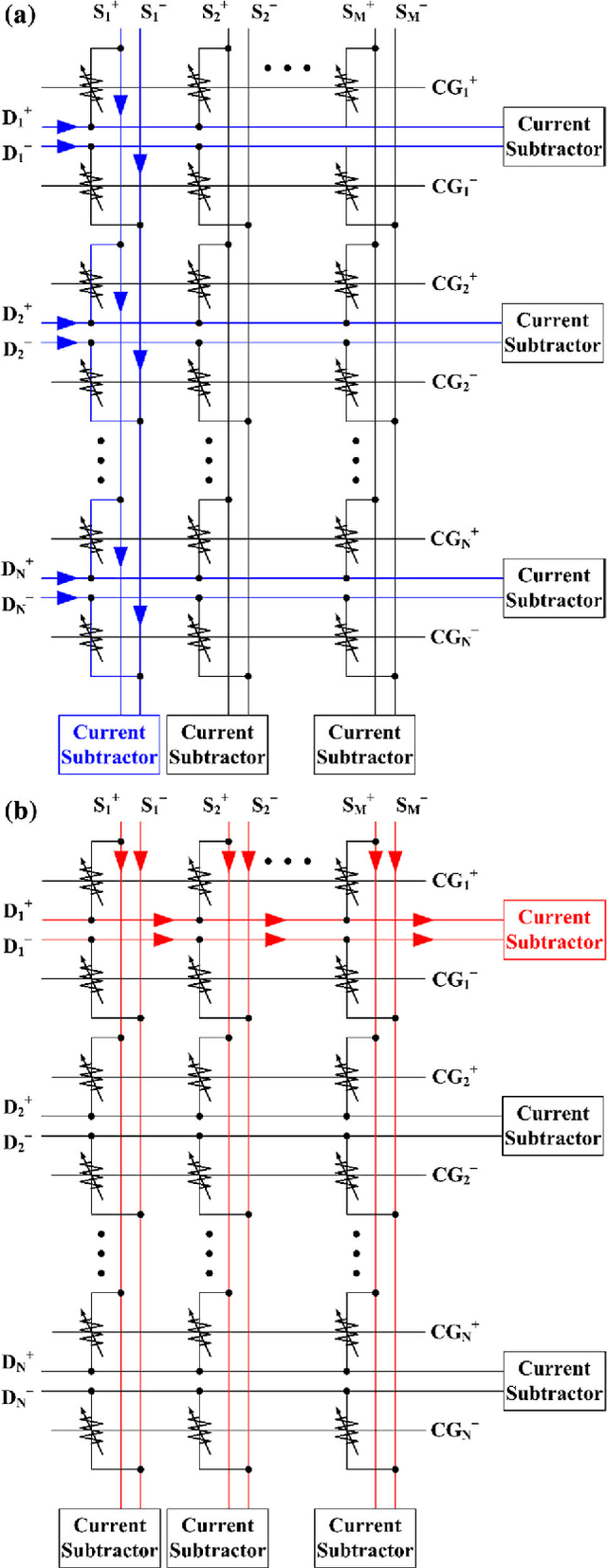

Abstract:In this paper, we propose a learning rule based on a back-propagation (BP) algorithm that can be applied to a hardware-based deep neural network (HW-DNN) using electronic devices that exhibit discrete and limited conductance characteristics. This adaptive learning rule, which enables forward, backward propagation, as well as weight updates in hardware, is helpful during the implementation of power-efficient and high-speed deep neural networks. In simulations using a three-layer perceptron network, we evaluate the learning performance according to various conductance responses of electronic synapse devices and weight-updating methods. It is shown that the learning accuracy is comparable to that obtained when using a software-based BP algorithm when the electronic synapse device has a linear conductance response with a high dynamic range. Furthermore, the proposed unidirectional weight-updating method is suitable for electronic synapse devices which have nonlinear and finite conductance responses. Because this weight-updating method can compensate the demerit of asymmetric weight updates, we can obtain better accuracy compared to other methods. This adaptive learning rule, which can be applied to full hardware implementation, can also compensate the degradation of learning accuracy due to the probable device-to-device variation in an actual electronic synapse device.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge