Jonathan Gemmell

Behavioral Player Rating in Competitive Online Shooter Games

Jul 01, 2022

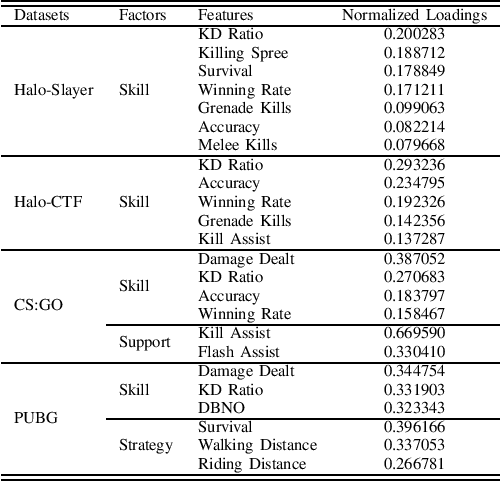

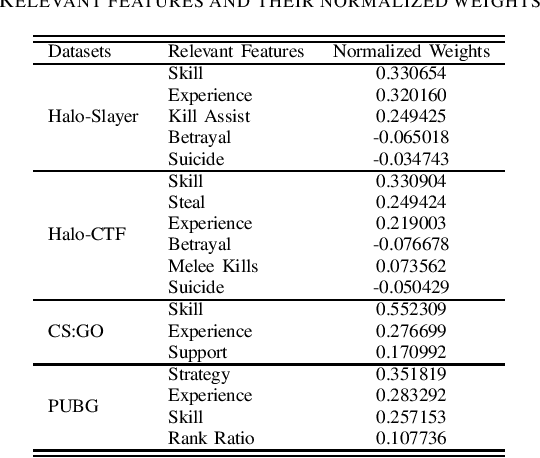

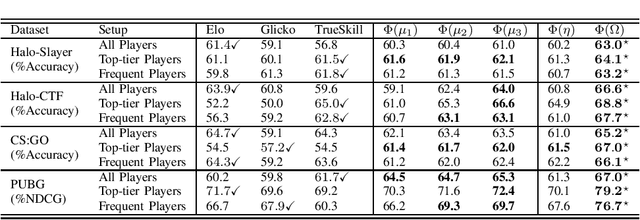

Abstract:Competitive online games use rating systems for matchmaking; progression-based algorithms that estimate the skill level of players with interpretable ratings in terms of the outcome of the games they played. However, the overall experience of players is shaped by factors beyond the sole outcome of their games. In this paper, we engineer several features from in-game statistics to model players and create ratings that accurately represent their behavior and true performance level. We then compare the estimating power of our behavioral ratings against ratings created with three mainstream rating systems by predicting rank of players in four popular game modes from the competitive shooter genre. Our results show that the behavioral ratings present more accurate performance estimations while maintaining the interpretability of the created representations. Considering different aspects of the playing behavior of players and using behavioral ratings for matchmaking can lead to match-ups that are more aligned with players' goals and interests, consequently resulting in a more enjoyable gaming experience.

Player Modeling using Behavioral Signals in Competitive Online Games

Nov 29, 2021

Abstract:Competitive online games use rating systems to match players with similar skills to ensure a satisfying experience for players. In this paper, we focus on the importance of addressing different aspects of playing behavior when modeling players for creating match-ups. To this end, we engineer several behavioral features from a dataset of over 75,000 battle royale matches and create player models based on the retrieved features. We then use the created models to predict ranks for different groups of players in the data. The predicted ranks are compared to those of three popular rating systems. Our results show the superiority of simple behavioral models over mainstream rating systems. Some behavioral features provided accurate predictions for all groups of players while others proved useful for certain groups of players. The results of this study highlight the necessity of considering different aspects of the player's behavior such as goals, strategy, and expertise when making assignments.

How does the User's Knowledge of the Recommender Influence their Behavior?

Sep 02, 2021

Abstract:Recommender systems have become a ubiquitous part of modern web applications. They help users discover new and relevant items. Today's users, through years of interaction with these systems have developed an inherent understanding of how recommender systems function, what their objectives are, and how the user might manipulate them. We describe this understanding as the Theory of the Recommender. In this study, we conducted semi-structured interviews with forty recommender system users to empirically explore the relevant factors influencing user behavior. Our findings, based on a rigorous thematic analysis of the collected data, suggest that users possess an intuitive and sophisticated understanding of the recommender system's behavior. We also found that users, based upon their understanding, attitude, and intentions change their interactions to evoke desired recommender behavior. Finally, we discuss the potential implications of such user behavior on recommendation performance.

Evaluating Team Skill Aggregation in Online Competitive Games

Jun 21, 2021

Abstract:One of the main goals of online competitive games is increasing player engagement by ensuring fair matches. These games use rating systems for creating balanced match-ups. Rating systems leverage statistical estimation to rate players' skills and use skill ratings to predict rank before matching players. Skill ratings of individual players can be aggregated to compute the skill level of a team. While research often aims to improve the accuracy of skill estimation and fairness of match-ups, less attention has been given to how the skill level of a team is calculated from the skill level of its members. In this paper, we propose two new aggregation methods and compare them with a standard approach extensively used in the research literature. We present an exhaustive analysis of the impact of these methods on the predictive performance of rating systems. We perform our experiments using three popular rating systems, Elo, Glicko, and TrueSkill, on three real-world datasets including over 100,000 battle royale and head-to-head matches. Our evaluations show the superiority of the MAX method over the other two methods in the majority of the tested cases, implying that the overall performance of a team is best determined by the performance of its most skilled member. The results of this study highlight the necessity of devising more elaborated methods for calculating a team's performance -- methods covering different aspects of players' behavior such as skills, strategy, or goals.

The Evaluation of Rating Systems in Team-based Battle Royale Games

May 28, 2021

Abstract:Online competitive games have become a mainstream entertainment platform. To create a fair and exciting experience, these games use rating systems to match players with similar skills. While there has been an increasing amount of research on improving the performance of these systems, less attention has been paid to how their performance is evaluated. In this paper, we explore the utility of several metrics for evaluating three popular rating systems on a real-world dataset of over 25,000 team battle royale matches. Our results suggest considerable differences in their evaluation patterns. Some metrics were highly impacted by the inclusion of new players. Many could not capture the real differences between certain groups of players. Among all metrics studied, normalized discounted cumulative gain (NDCG) demonstrated more reliable performance and more flexibility. It alleviated most of the challenges faced by the other metrics while adding the freedom to adjust the focus of the evaluations on different groups of players.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge