Joel A. Góngora

Unsupervised Instance Selection with Low-Label, Supervised Learning for Outlier Detection

Apr 26, 2021

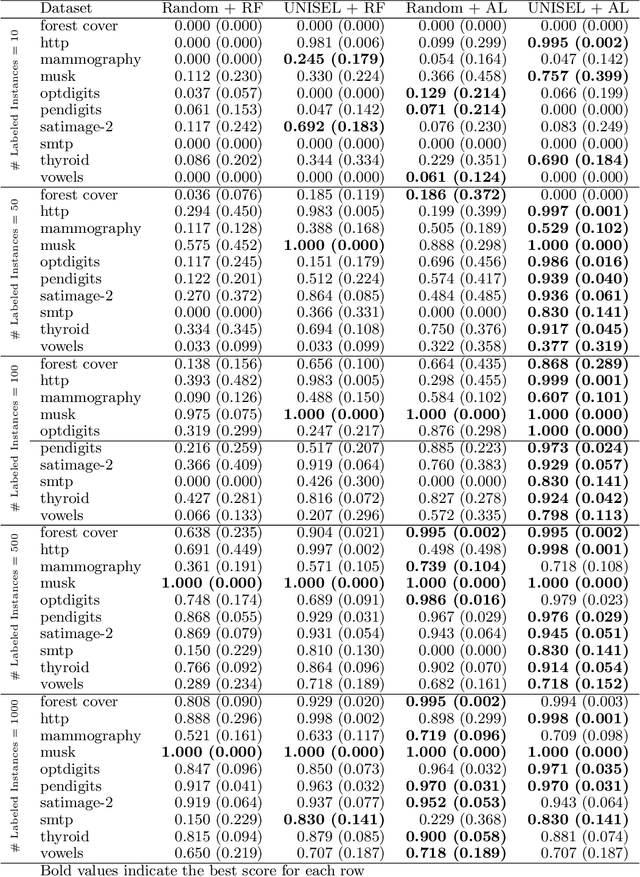

Abstract:The laborious process of labeling data often bottlenecks projects that aim to leverage the power of supervised machine learning. Active Learning (AL) has been established as a technique to ameliorate this condition through an iterative framework that queries a human annotator for labels of instances with the most uncertain class assignment. Via this mechanism, AL produces a binary classifier trained on less labeled data but with little, if any, loss in predictive performance. Despite its advantages, AL can have difficulty with class-imbalanced datasets and results in an inefficient labeling process. To address these drawbacks, we investigate our unsupervised instance selection (UNISEL) technique followed by a Random Forest (RF) classifier on 10 outlier detection datasets under low-label conditions. These results are compared to AL performed on the same datasets. Further, we investigate the combination of UNISEL and AL. Results indicate that UNISEL followed by an RF performs comparably to AL with an RF and that the combination of UNISEL and AL demonstrates superior performance. The practical implications of these findings in terms of time savings and generalizability afforded by UNISEL are discussed.

Towards automatic extractive text summarization of A-133 Single Audit reports with machine learning

Nov 08, 2019

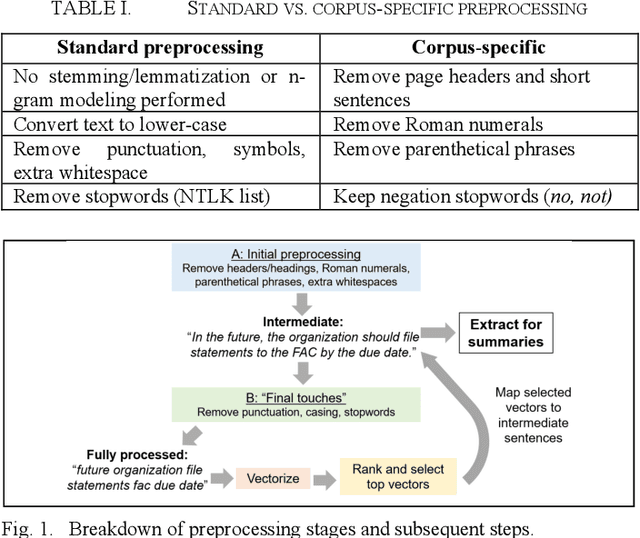

Abstract:The rapid growth of text data has motivated the development of machine-learning based automatic text summarization strategies that concisely capture the essential ideas in a larger text. This study aimed to devise an extractive summarization method for A-133 Single Audits, which assess if recipients of federal grants are compliant with program requirements for use of federal funding. Currently, these voluminous audits must be manually analyzed by officials for oversight, risk management, and prioritization purposes. Automated summarization has the potential to streamline these processes. Analysis focused on the "Findings" section of ~20,000 Single Audits spanning 2016-2018. Following text preprocessing and GloVe embedding, sentence-level k-means clustering was performed to partition sentences by topic and to establish the importance of each sentence. For each audit, key summary sentences were extracted by proximity to cluster centroids. Summaries were judged by non-expert human evaluation and compared to human-generated summaries using the ROUGE metric. Though the goal was to fully automate summarization of A-133 audits, human input was required at various stages due to large variability in audit writing style, content, and context. Examples of human inputs include the number of clusters, the choice to keep or discard certain clusters based on their content relevance, and the definition of a top sentence. Overall, this approach made progress towards automated extractive summaries of A-133 audits, with future work to focus on full automation and improving summary consistency. This work highlights the inherent difficulty and subjective nature of automated summarization in a real-world application.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge