Jingcheng Zhang

Neural Network Structure Design based on N-Gauss Activation Function

Jun 01, 2021

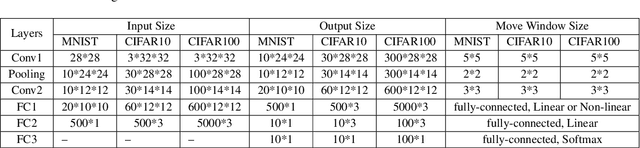

Abstract:Recent work has shown that the activation function of the convolutional neural network can meet the Lipschitz condition, then the corresponding convolutional neural network structure can be constructed according to the scale of the data set, and the data set can be trained more deeply, more accurately and more effectively. In this article, we have accepted the experimental results and introduced the core block N-Gauss, N-Gauss, and Swish (Conv1, Conv2, FC1) neural network structure design to train MNIST, CIFAR10, and CIFAR100 respectively. Experiments show that N-Gauss gives full play to the main role of nonlinear modeling of activation functions, so that deep convolutional neural networks have hierarchical nonlinear mapping learning capabilities. At the same time, the training ability of N-Gauss on simple one-dimensional channel small data sets is equivalent to the performance of ReLU and Swish.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge