Jing Xi Liu

Making Recommender Systems More Knowledgeable: A Framework to Incorporate Side Information

Jun 02, 2024

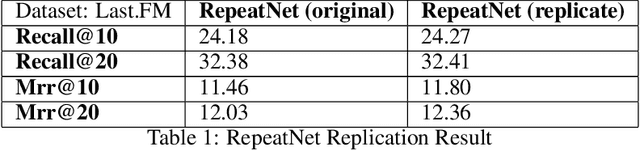

Abstract:Session-based recommender systems typically focus on using only the triplet (user_id, timestamp, item_id) to make predictions of users' next actions. In this paper, we aim to utilize side information to help recommender systems catch patterns and signals otherwise undetectable. Specifically, we propose a general framework for incorporating item-specific side information into the recommender system to enhance its performance without much modification on the original model architecture. Experimental results on several models and datasets prove that with side information, our recommender system outperforms state-of-the-art models by a considerable margin and converges much faster. Additionally, we propose a new type of loss to regularize the attention mechanism used by recommender systems and evaluate its influence on model performance. Furthermore, through analysis, we put forward a few insights on potential further improvements.

Intelligent Text-Conditioned Music Generation

Jun 02, 2024

Abstract:CLIP (Contrastive Language-Image Pre-Training) is a multimodal neural network trained on (text, image) pairs to predict the most relevant text caption given an image. It has been used extensively in image generation by connecting its output with a generative model such as VQGAN, with the most notable example being OpenAI's DALLE-2. In this project, we apply a similar approach to bridge the gap between natural language and music. Our model is split into two steps: first, we train a CLIP-like model on pairs of text and music over contrastive loss to align a piece of music with its most probable text caption. Then, we combine the alignment model with a music decoder to generate music. To the best of our knowledge, this is the first attempt at text-conditioned deep music generation. Our experiments show that it is possible to train the text-music alignment model using contrastive loss and train a decoder to generate music from text prompts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge