Jens Eckstein

Training one model to detect heart and lung sound events from single point auscultations

Jan 15, 2023Abstract:Objective: This work proposes a semi-supervised training approach for detecting lung and heart sounds simultaneously with only one trained model and in invariance to the auscultation point. Methods: We use open-access data from the 2016 Physionet/CinC Challenge, the 2022 George Moody Challenge, and from the lung sound database HF_V1. We first train specialist single-task models using foreground ground truth (GT) labels from different auscultation databases to identify background sound events in the respective lung and heart auscultation databases. The pseudo-labels generated in this way were combined with the ground truth labels in a new training iteration, such that a new model was subsequently trained to detect foreground and background signals. Benchmark tests ensured that the newly trained model could detect both, lung, and heart sound events in different auscultation sites without regressing on the original task. We also established hand-validated labels for the respective background signal in heart and lung sound auscultations to evaluate the models. Results: In this work, we report for the first time results for i) a multi-class prediction for lung sound events and ii) for simultaneous detection of heart and lung sound events and achieve competitive results using only one model. The combined multi-task model regressed slightly in heart sound detection and gained significantly in lung sound detection accuracy with an overall macro F1 score of 39.2% over six classes, representing a 6.7% improvement over the single-task baseline models. Conclusion/Significance: To the best of our knowledge, this is the first approach developed to date for measuring heart and lung sound events invariant to both, the auscultation site and capturing device. Hence, our model is capable of performing lung and heart sound detection from any auscultation location.

Visualization and Analysis of Wearable Health Data From COVID-19 Patients

Jan 19, 2022

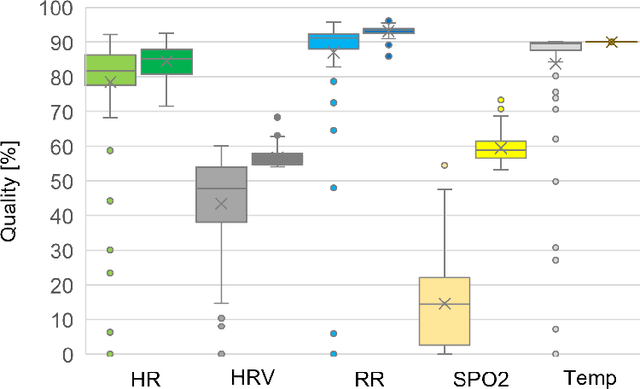

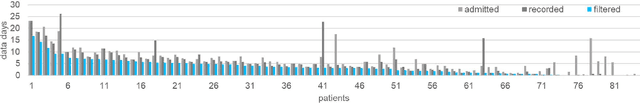

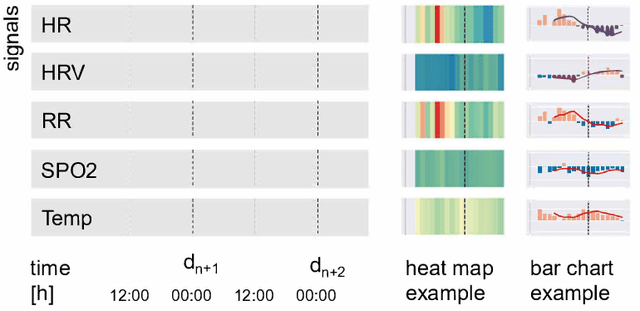

Abstract:Effective visualizations were evaluated to reveal relevant health patterns from multi-sensor real-time wearable devices that recorded vital signs from patients admitted to hospital with COVID-19. Furthermore, specific challenges associated with wearable health data visualizations, such as fluctuating data quality resulting from compliance problems, time needed to charge the device and technical problems are described. As a primary use case, we examined the detection and communication of relevant health patterns visible in the vital signs acquired by the technology. Customized heat maps and bar charts were used to specifically highlight medically relevant patterns in vital signs. A survey of two medical doctors, one clinical project manager and seven health data science researchers was conducted to evaluate the visualization methods. From a dataset of 84 hospitalized COVID-19 patients, we extracted one typical COVID-19 patient history and based on the visualizations showcased the health history of two noteworthy patients. The visualizations were shown to be effective, simple and intuitive in deducing the health status of patients. For clinical staff who are time-constrained and responsible for numerous patients, such visualization methods can be an effective tool to enable continuous acquisition and monitoring of patients' health statuses even remotely.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge