Jens Dittrich

Saarland University

Make Thunderbolts Less Frightening -- Predicting Extreme Weather Using Deep Learning

Dec 06, 2019

Abstract:Forecasting severe weather conditions is still a very challenging and computationally expensive task due to the enormous amount of data and the complexity of the underlying physics. Machine learning approaches and especially deep learning have however shown huge improvements in many research areas dealing with large datasets in recent years. In this work, we tackle one specific sub-problem of weather forecasting, namely the prediction of thunderstorms and lightning. We propose the use of a convolutional neural network architecture inspired by UNet++ and ResNet to predict thunderstorms as a binary classification problem based on satellite images and lightnings recorded in the past. We achieve a probability of detection of more than 94% for lightnings within the next 15 minutes while at the same time minimizing the false alarm ratio compared to previous approaches.

The Error is the Feature: how to Forecast Lightning using a Model Prediction Error

Nov 23, 2018

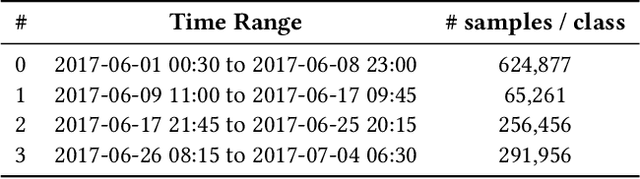

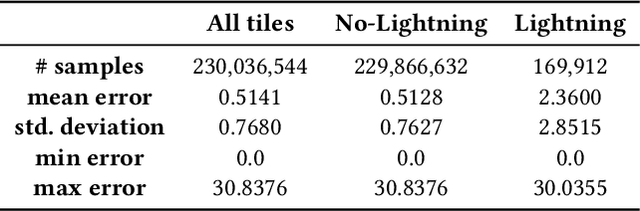

Abstract:Despite the progress throughout the last decades, weather forecasting is still a challenging and computationally expensive task. Most models which are currently operated by meteorological services around the world rely on numerical weather prediction, a system based on mathematical algorithms describing physical effects. Recent progress in artificial intelligence however demonstrates that machine learning can be successfully applied to many research fields, especially areas dealing with big data that can be used for training. Current approaches to predict thunderstorms often focus on indices describing temperature differences in the atmosphere. If these indices reach a critical threshold, the forecast system emits a thunderstorm warning. Other meteorological systems such as radar and lightning detection systems are added for a more precise prediction. This paper describes a new approach to the prediction of lightnings based on machine learning rather than complex numerical computations. The error of optical flow algorithms applied to images of meteorological satellites is interpreted as a sign for convection potentially leading to thunderstorms. These results are used as the base for the feature generation phase incorporating different convolution steps. Tree classifier models are then trained to predict lightnings within the next few hours (called nowcasting) based on these features. The evaluation section compares the predictive power of the different models and the impact of different features on the classification result.

The Case for Automatic Database Administration using Deep Reinforcement Learning

Jan 17, 2018

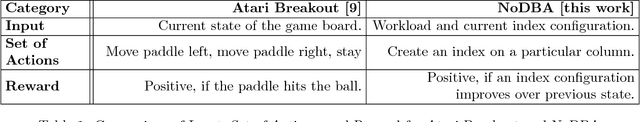

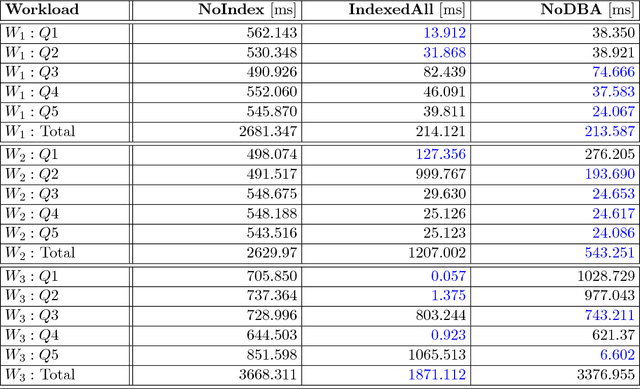

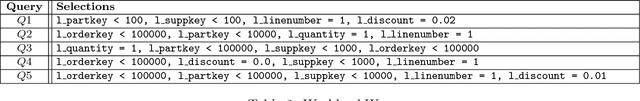

Abstract:Like any large software system, a full-fledged DBMS offers an overwhelming amount of configuration knobs. These range from static initialisation parameters like buffer sizes, degree of concurrency, or level of replication to complex runtime decisions like creating a secondary index on a particular column or reorganising the physical layout of the store. To simplify the configuration, industry grade DBMSs are usually shipped with various advisory tools, that provide recommendations for given workloads and machines. However, reality shows that the actual configuration, tuning, and maintenance is usually still done by a human administrator, relying on intuition and experience. Recent work on deep reinforcement learning has shown very promising results in solving problems, that require such a sense of intuition. For instance, it has been applied very successfully in learning how to play complicated games with enormous search spaces. Motivated by these achievements, in this work we explore how deep reinforcement learning can be used to administer a DBMS. First, we will describe how deep reinforcement learning can be used to automatically tune an arbitrary software system like a DBMS by defining a problem environment. Second, we showcase our concept of NoDBA at the concrete example of index selection and evaluate how well it recommends indexes for given workloads.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge