Jeff Hajewski

gBeam-ACO: a greedy and faster variant of Beam-ACO

Apr 23, 2020

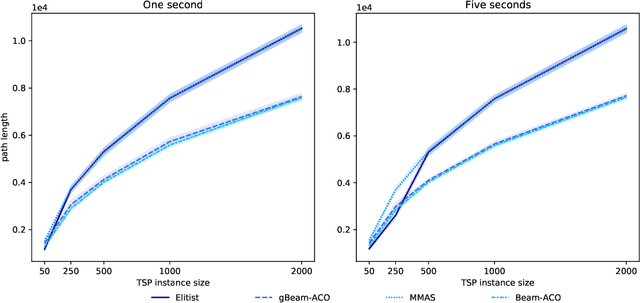

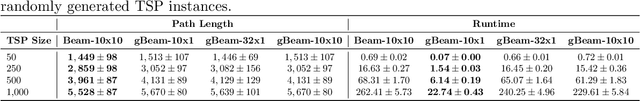

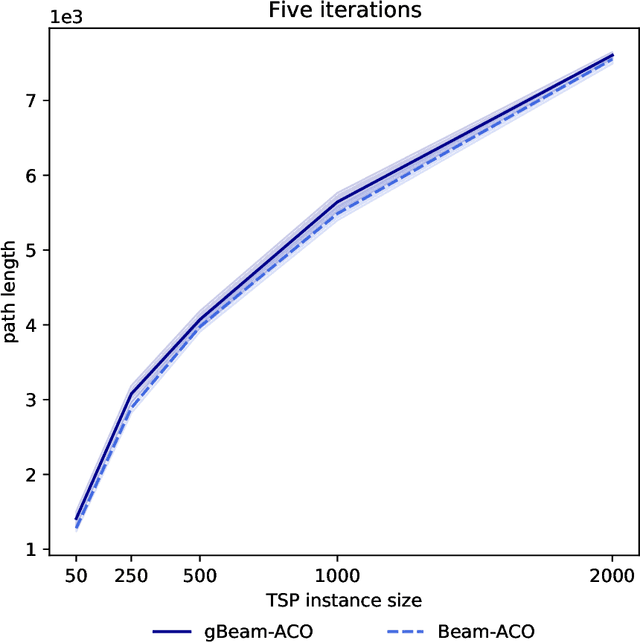

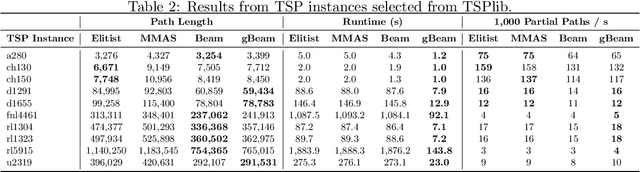

Abstract:Beam-ACO, a modification of the traditional Ant Colony Optimization (ACO) algorithms that incorporates a modified beam search, is one of the most effective ACO algorithms for solving the Traveling Salesman Problem (TSP). Although adding beam search to the ACO heuristic search process is effective, it also increases the amount of work (in terms of partial paths) done by the algorithm at each step. In this work, we introduce a greedy variant of Beam-ACO that uses a greedy path selection heuristic. The exploitation of the greedy path selection is offset by the exploration required in maintaining the beam of paths. This approach has the added benefit of avoiding costly calls to a random number generator and reduces the algorithms internal state, making it simpler to parallelize. Our experiments demonstrate that not only is our greedy Beam-ACO (gBeam-ACO) faster than traditional Beam-ACO, in some cases by an order of magnitude, but it does not sacrifice quality of the found solution, especially on large TSP instances. We also found that our greedy algorithm, which we refer to as gBeam-ACO, was less dependent on hyperparameter settings.

Distributed Evolution of Deep Autoencoders

Apr 16, 2020

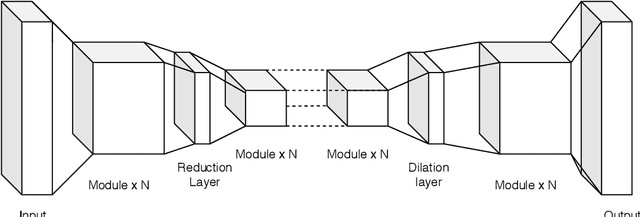

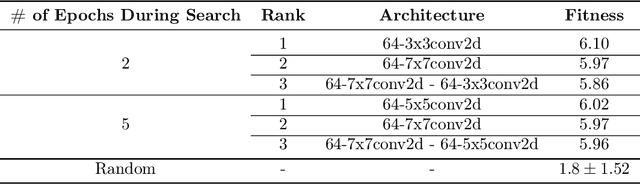

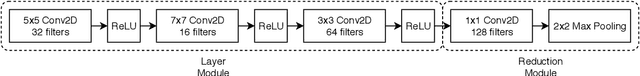

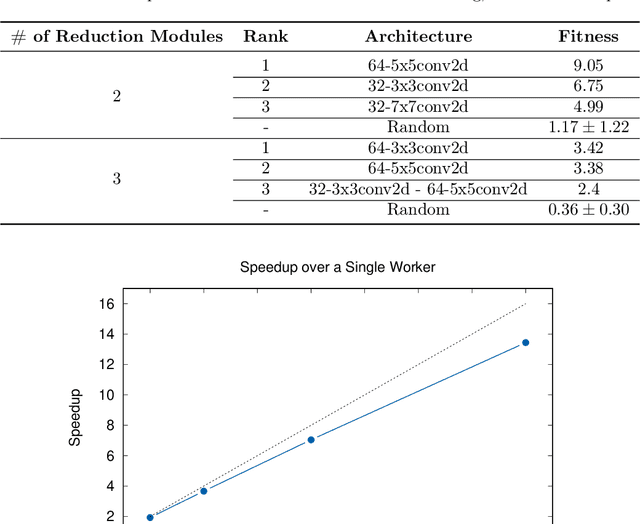

Abstract:Autoencoders have seen wide success in domains ranging from feature selection to information retrieval. Despite this success, designing an autoencoder for a given task remains a challenging undertaking due to the lack of firm intuition on how the backing neural network architectures of the encoder and decoder impact the overall performance of the autoencoder. In this work we present a distributed system that uses an efficient evolutionary algorithm to design a modular autoencoder. We demonstrate the effectiveness of this system on the tasks of manifold learning and image denoising. The system beats random search by nearly an order of magnitude on both tasks while achieving near linear horizontal scaling as additional worker nodes are added to the system.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge