Jayabrata Bhaduri

A Computer Vision Enabled damage detection model with improved YOLOv5 based on Transformer Prediction Head

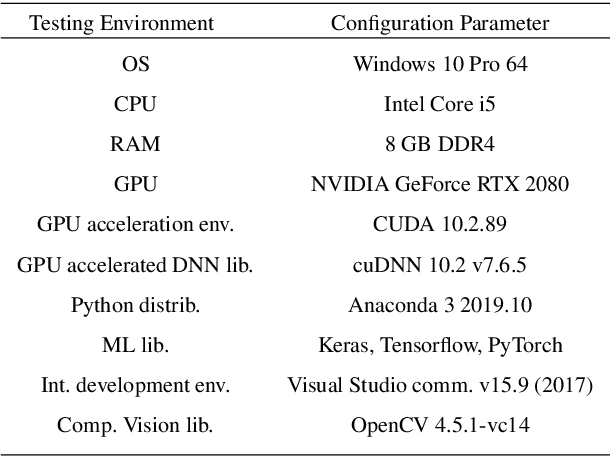

Mar 07, 2023Abstract:Objective:Computer vision-based up-to-date accurate damage classification and localization are of decisive importance for infrastructure monitoring, safety, and the serviceability of civil infrastructure. Current state-of-the-art deep learning (DL)-based damage detection models, however, often lack superior feature extraction capability in complex and noisy environments, limiting the development of accurate and reliable object distinction. Method: To this end, we present DenseSPH-YOLOv5, a real-time DL-based high-performance damage detection model where DenseNet blocks have been integrated with the backbone to improve in preserving and reusing critical feature information. Additionally, convolutional block attention modules (CBAM) have been implemented to improve attention performance mechanisms for strong and discriminating deep spatial feature extraction that results in superior detection under various challenging environments. Moreover, additional feature fusion layers and a Swin-Transformer Prediction Head (SPH) have been added leveraging advanced self-attention mechanism for more efficient detection of multiscale object sizes and simultaneously reducing the computational complexity. Results: Evaluating the model performance in large-scale Road Damage Dataset (RDD-2018), at a detection rate of 62.4 FPS, DenseSPH-YOLOv5 obtains a mean average precision (mAP) value of 85.25 %, F1-score of 81.18 %, and precision (P) value of 89.51 % outperforming current state-of-the-art models. Significance: The present research provides an effective and efficient damage localization model addressing the shortcoming of existing DL-based damage detection models by providing highly accurate localized bounding box prediction. Current work constitutes a step towards an accurate and robust automated damage detection system in real-time in-field applications.

A fast accurate fine-grain object detection model based on YOLOv4 deep neural network

Oct 30, 2021

Abstract:Early identification and prevention of various plant diseases in commercial farms and orchards is a key feature of precision agriculture technology. This paper presents a high-performance real-time fine-grain object detection framework that addresses several obstacles in plant disease detection that hinder the performance of traditional methods, such as, dense distribution, irregular morphology, multi-scale object classes, textural similarity, etc. The proposed model is built on an improved version of the You Only Look Once (YOLOv4) algorithm. The modified network architecture maximizes both detection accuracy and speed by including the DenseNet in the back-bone to optimize feature transfer and reuse, two new residual blocks in the backbone and neck enhance feature extraction and reduce computing cost; the Spatial Pyramid Pooling (SPP) enhances receptive field, and a modified Path Aggregation Network (PANet) preserves fine-grain localized information and improve feature fusion. Additionally, the use of the Hard-Swish function as the primary activation improved the model's accuracy due to better nonlinear feature extraction. The proposed model is tested in detecting four different diseases in tomato plants under various challenging environments. The model outperforms the existing state-of-the-art detection models in detection accuracy and speed. At a detection rate of 70.19 FPS, the proposed model obtained a precision value of $90.33 \%$, F1-score of $93.64 \%$, and a mean average precision ($mAP$) value of $96.29 \%$. Current work provides an effective and efficient method for detecting different plant diseases in complex scenarios that can be extended to different fruit and crop detection, generic disease detection, and various automated agricultural detection processes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge