Javier Gonzalez-Trejo

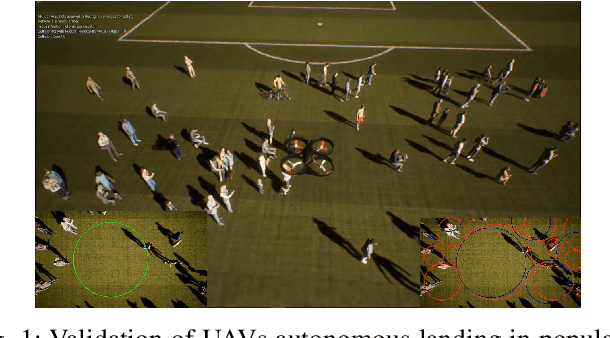

Visual-based Safe Landing for UAVs in Populated Areas: Real-time Validation in Virtual Environments

Mar 25, 2022

Abstract:Safe autonomous landing for Unmanned Aerial Vehicles (UAVs) in populated areas is a crucial aspect for successful urban deployment, particularly in emergency landing situations. Nonetheless, validating autonomous landing in real scenarios is a challenging task involving a high risk of injuring people. In this work, we propose a framework for real-time safe and thorough evaluation of vision-based autonomous landing in populated scenarios, using photo-realistic virtual environments. We propose to use the Unreal graphics engine coupled with the AirSim plugin for drone's simulation, and evaluate autonomous landing strategies based on visual detection of Safe Landing Zones (SLZ) in populated scenarios. Then, we study two different criteria for selecting the "best" SLZ, and evaluate them during autonomous landing of a virtual drone in different scenarios and conditions, under different distributions of people in urban scenes, including moving people. We evaluate different metrics to quantify the performance of the landing strategies, establishing a baseline for comparison with future works in this challenging task, and analyze them through an important number of randomized iterations. The study suggests that the use of the autonomous landing algorithms considerably helps to prevent accidents involving humans, which may allow to unleash the full potential of drones in urban environments near to people.

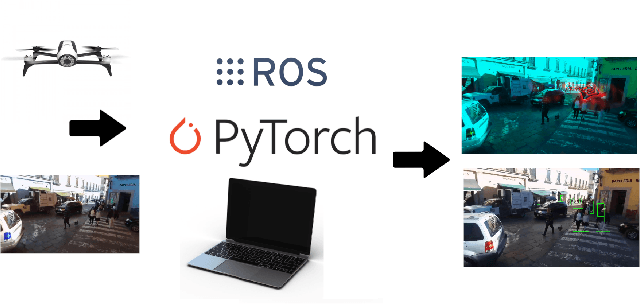

Dense Crowds Detection and Surveillance with Drones using Density Maps

Mar 03, 2020

Abstract:Detecting and Counting people in a human crowd from a moving drone present challenging problems that arisefrom the constant changing in the image perspective andcamera angle. In this paper, we test two different state-of-the-art approaches, density map generation with VGG19 trainedwith the Bayes loss function and detect-then-count with FasterRCNN with ResNet50-FPN as backbone, in order to comparetheir precision for counting and detecting people in differentreal scenarios taken from a drone flight. We show empiricallythat both proposed methodologies perform especially well fordetecting and counting people in sparse crowds when thedrone is near the ground. Nevertheless, VGG19 provides betterprecision on both tasks while also being lighter than FasterRCNN. Furthermore, VGG19 outperforms Faster RCNN whendealing with dense crowds, proving to be more robust toscale variations and strong occlusions, being more suitable forsurveillance applications using drones

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge