Javier Civit-Masot

Energy efficiency in Edge TPU vs. embedded GPU for computer-aided medical imaging segmentation and classification

Nov 20, 2023

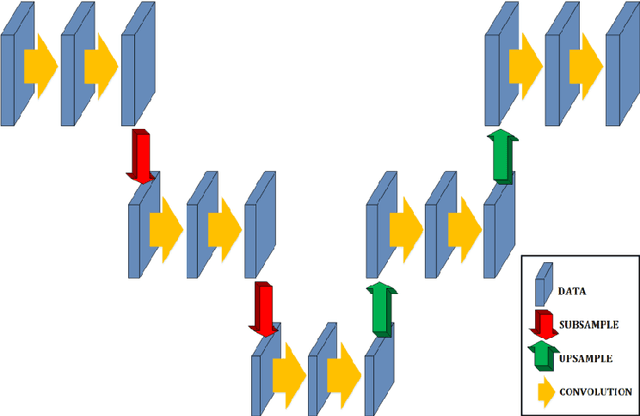

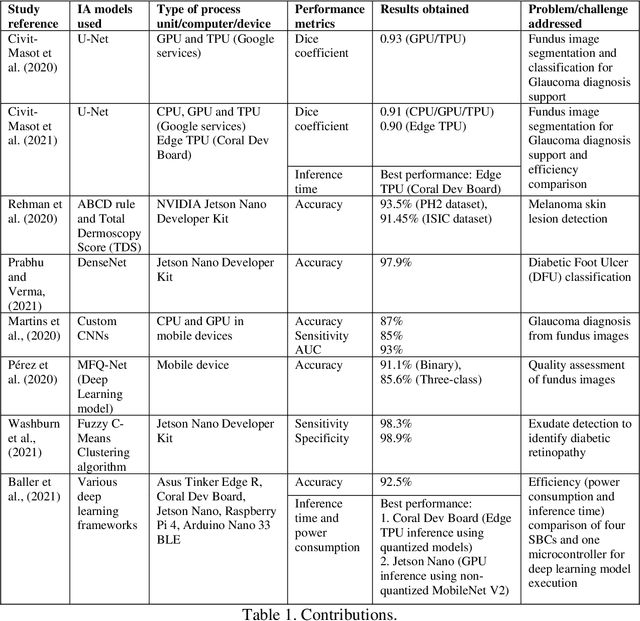

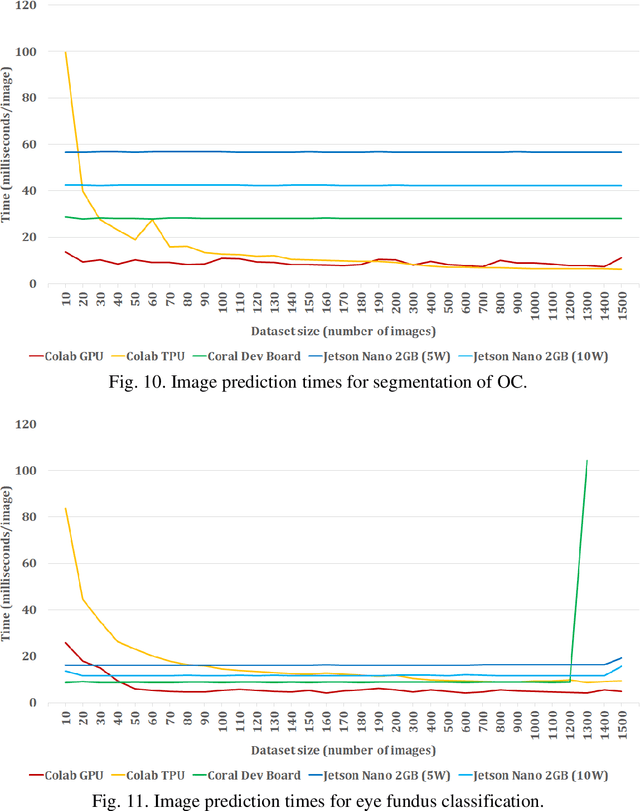

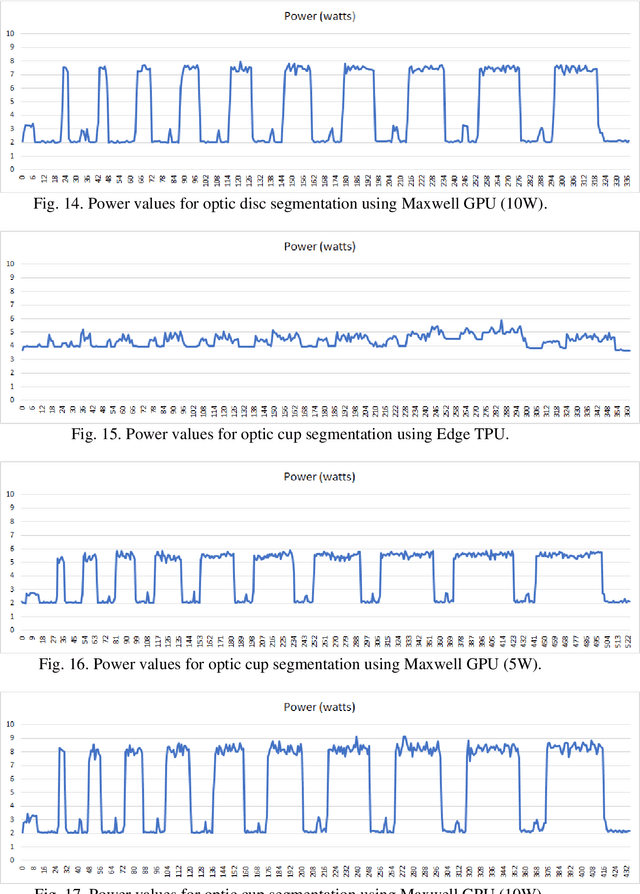

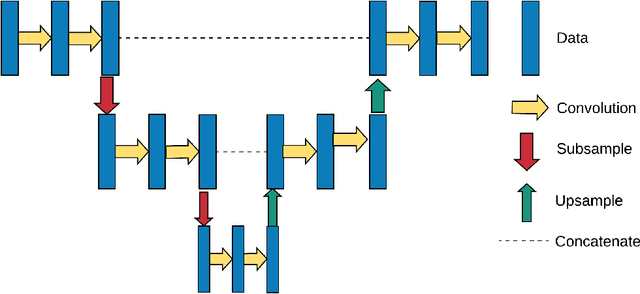

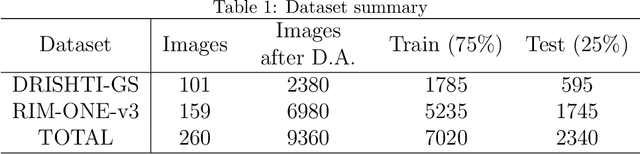

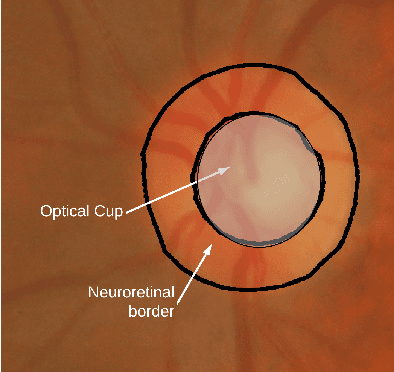

Abstract:In this work, we evaluate the energy usage of fully embedded medical diagnosis aids based on both segmentation and classification of medical images implemented on Edge TPU and embedded GPU processors. We use glaucoma diagnosis based on color fundus images as an example to show the possibility of performing segmentation and classification in real time on embedded boards and to highlight the different energy requirements of the studied implementations. Several other works develop the use of segmentation and feature extraction techniques to detect glaucoma, among many other pathologies, with deep neural networks. Memory limitations and low processing capabilities of embedded accelerated systems (EAS) limit their use for deep network-based system training. However, including specific acceleration hardware, such as NVIDIA's Maxwell GPU or Google's Edge TPU, enables them to perform inferences using complex pre-trained networks in very reasonable times. In this study, we evaluate the timing and energy performance of two EAS equipped with Machine Learning (ML) accelerators executing an example diagnostic tool developed in a previous work. For optic disc (OD) and cup (OC) segmentation, the obtained prediction times per image are under 29 and 43 ms using Edge TPUs and Maxwell GPUs, respectively. Prediction times for the classification subsystem are lower than 10 and 14 ms for Edge TPUs and Maxwell GPUs, respectively. Regarding energy usage, in approximate terms, for OD segmentation Edge TPUs and Maxwell GPUs use 38 and 190 mJ per image, respectively. For fundus classification, Edge TPUs and Maxwell GPUs use 45 and 70 mJ, respectively.

* 37 pages, 20 figures. Accepted manuscript. Published in "Engineering Applications of Artificial Intelligence" (Elsevier), ISSN 0952-1976

A Study on the Use of Edge TPUs for Eye Fundus Image Segmentation

Jul 26, 2022

Abstract:Medical image segmentation can be implemented using Deep Learning methods with fast and efficient segmentation networks. Single-board computers (SBCs) are difficult to use to train deep networks due to their memory and processing limitations. Specific hardware such as Google's Edge TPU makes them suitable for real time predictions using complex pre-trained networks. In this work, we study the performance of two SBCs, with and without hardware acceleration for fundus image segmentation, though the conclusions of this study can be applied to the segmentation by deep neural networks of other types of medical images. To test the benefits of hardware acceleration, we use networks and datasets from a previous published work and generalize them by testing with a dataset with ultrasound thyroid images. We measure prediction times in both SBCs and compare them with a cloud based TPU system. The results show the feasibility of Machine Learning accelerated SBCs for optic disc and cup segmentation obtaining times below 25 milliseconds per image using Edge TPUs.

* Preprint of paper published in Engineering Applications of Artificial Intelligence

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge