Javad Mohammadpour Vehni

Co-supervised learning paradigm with conditional generative adversarial networks for sample-efficient classification

Dec 27, 2022

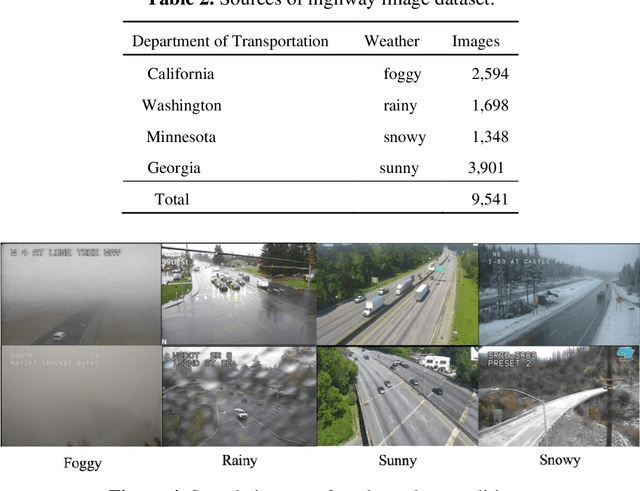

Abstract:Classification using supervised learning requires annotating a large amount of classes-balanced data for model training and testing. This has practically limited the scope of applications with supervised learning, in particular deep learning. To address the issues associated with limited and imbalanced data, this paper introduces a sample-efficient co-supervised learning paradigm (SEC-CGAN), in which a conditional generative adversarial network (CGAN) is trained alongside the classifier and supplements semantics-conditioned, confidence-aware synthesized examples to the annotated data during the training process. In this setting, the CGAN not only serves as a co-supervisor but also provides complementary quality examples to aid the classifier training in an end-to-end fashion. Experiments demonstrate that the proposed SEC-CGAN outperforms the external classifier GAN (EC-GAN) and a baseline ResNet-18 classifier. For the comparison, all classifiers in above methods adopt the ResNet-18 architecture as the backbone. Particularly, for the Street View House Numbers dataset, using the 5% of training data, a test accuracy of 90.26% is achieved by SEC-CGAN as opposed to 88.59% by EC-GAN and 87.17% by the baseline classifier; for the highway image dataset, using the 10% of training data, a test accuracy of 98.27% is achieved by SEC-CGAN, compared to 97.84% by EC-GAN and 95.52% by the baseline classifier.

* 14 pages, 5 figures

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge