Jasper Rou

Convergence of the generalization error for deep gradient flow methods for PDEs

Dec 31, 2025Abstract:The aim of this article is to provide a firm mathematical foundation for the application of deep gradient flow methods (DGFMs) for the solution of (high-dimensional) partial differential equations (PDEs). We decompose the generalization error of DGFMs into an approximation and a training error. We first show that the solution of PDEs that satisfy reasonable and verifiable assumptions can be approximated by neural networks, thus the approximation error tends to zero as the number of neurons tends to infinity. Then, we derive the gradient flow that the training process follows in the ``wide network limit'' and analyze the limit of this flow as the training time tends to infinity. These results combined show that the generalization error of DGFMs tends to zero as the number of neurons and the training time tend to infinity.

Time Deep Gradient Flow Method for pricing American options

Jul 23, 2025Abstract:In this research, we explore neural network-based methods for pricing multidimensional American put options under the BlackScholes and Heston model, extending up to five dimensions. We focus on two approaches: the Time Deep Gradient Flow (TDGF) method and the Deep Galerkin Method (DGM). We extend the TDGF method to handle the free-boundary partial differential equation inherent in American options. We carefully design the sampling strategy during training to enhance performance. Both TDGF and DGM achieve high accuracy while outperforming conventional Monte Carlo methods in terms of computational speed. In particular, TDGF tends to be faster during training than DGM.

Error Analysis of Deep PDE Solvers for Option Pricing

May 08, 2025

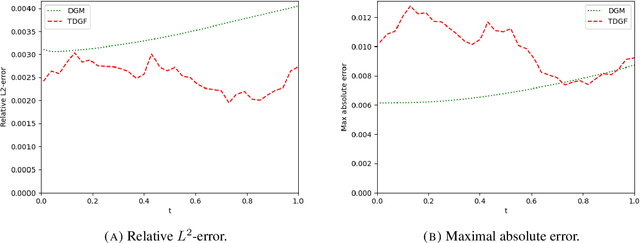

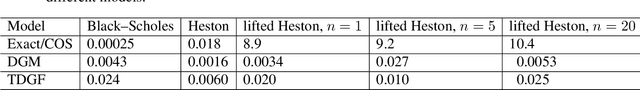

Abstract:Option pricing often requires solving partial differential equations (PDEs). Although deep learning-based PDE solvers have recently emerged as quick solutions to this problem, their empirical and quantitative accuracy remain not well understood, hindering their real-world applicability. In this research, our aim is to offer actionable insights into the utility of deep PDE solvers for practical option pricing implementation. Through comparative experiments in both the Black--Scholes and the Heston model, we assess the empirical performance of two neural network algorithms to solve PDEs: the Deep Galerkin Method and the Time Deep Gradient Flow method (TDGF). We determine their empirical convergence rates and training time as functions of (i) the number of sampling stages, (ii) the number of samples, (iii) the number of layers, and (iv) the number of nodes per layer. For the TDGF, we also consider the order of the discretization scheme and the number of time steps.

A time-stepping deep gradient flow method for option pricing in diffusion models

Mar 01, 2024

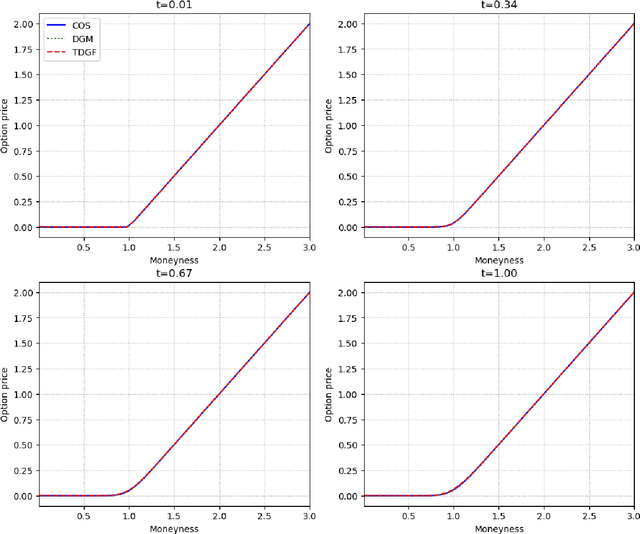

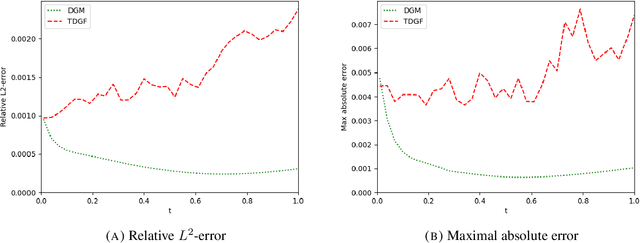

Abstract:We develop a novel deep learning approach for pricing European options in diffusion models, that can efficiently handle high-dimensional problems resulting from Markovian approximations of rough volatility models. The option pricing partial differential equation is reformulated as an energy minimization problem, which is approximated in a time-stepping fashion by deep artificial neural networks. The proposed scheme respects the asymptotic behavior of option prices for large levels of moneyness, and adheres to a priori known bounds for option prices. The accuracy and efficiency of the proposed method is assessed in a series of numerical examples, with particular focus in the lifted Heston model.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge