Janne Alatalo

Improved Difference Images for Change Detection Classifiers in SAR Imagery Using Deep Learning

Mar 31, 2023Abstract:Satellite-based Synthetic Aperture Radar (SAR) images can be used as a source of remote sensed imagery regardless of cloud cover and day-night cycle. However, the speckle noise and varying image acquisition conditions pose a challenge for change detection classifiers. This paper proposes a new method of improving SAR image processing to produce higher quality difference images for the classification algorithms. The method is built on a neural network-based mapping transformation function that produces artificial SAR images from a location in the requested acquisition conditions. The inputs for the model are: previous SAR images from the location, imaging angle information from the SAR images, digital elevation model, and weather conditions. The method was tested with data from a location in North-East Finland by using Sentinel-1 SAR images from European Space Agency, weather data from Finnish Meteorological Institute, and a digital elevation model from National Land Survey of Finland. In order to verify the method, changes to the SAR images were simulated, and the performance of the proposed method was measured using experimentation where it gave substantial improvements to performance when compared to a more conventional method of creating difference images.

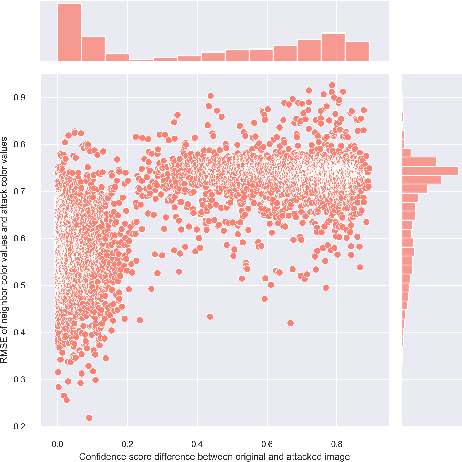

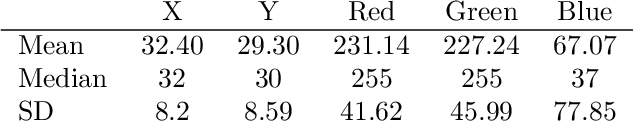

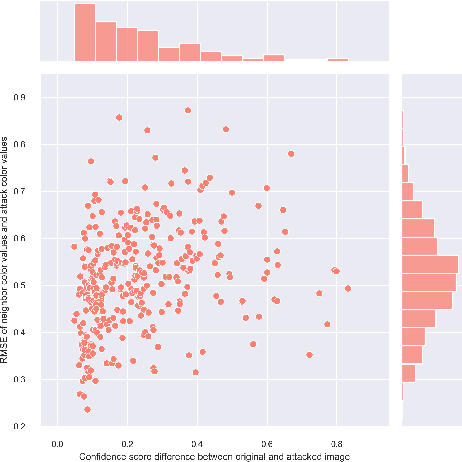

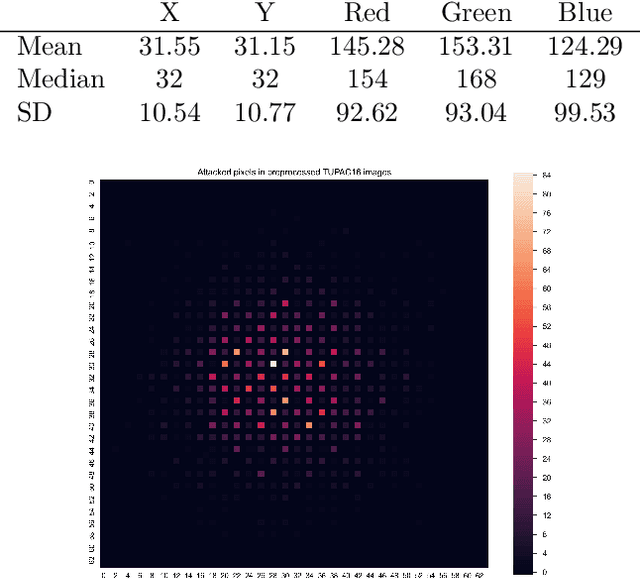

Chromatic and spatial analysis of one-pixel attacks against an image classifier

Jun 07, 2021

Abstract:One-pixel attack is a curious way of deceiving neural network classifier by changing only one pixel in the input image. The full potential and boundaries of this attack method are not yet fully understood. In this research, the successful and unsuccessful attacks are studied in more detail to illustrate the working mechanisms of a one-pixel attack created using differential evolution. The data comes from our earlier studies where we applied the attack against medical imaging. We used a real breast cancer tissue dataset and a real classifier as the attack target. This research presents ways to analyze chromatic and spatial distributions of one-pixel attacks. In addition, we present one-pixel attack confidence maps to illustrate the behavior of the target classifier. We show that the more effective attacks change the color of the pixel more, and that the successful attacks are situated at the center of the images. This kind of analysis is not only useful for understanding the behavior of the attack but also the qualities of the classifying neural network.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge