Jakub Kuzilek

An effect analysis of the balancing techniques on the counterfactual explanations of student success prediction models

Aug 01, 2024

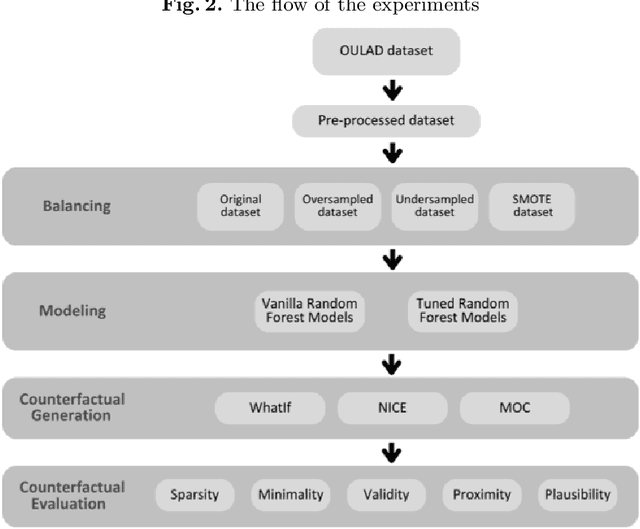

Abstract:In the past decade, we have experienced a massive boom in the usage of digital solutions in higher education. Due to this boom, large amounts of data have enabled advanced data analysis methods to support learners and examine learning processes. One of the dominant research directions in learning analytics is predictive modeling of learners' success using various machine learning methods. To build learners' and teachers' trust in such methods and systems, exploring the methods and methodologies that enable relevant stakeholders to deeply understand the underlying machine-learning models is necessary. In this context, counterfactual explanations from explainable machine learning tools are promising. Several counterfactual generation methods hold much promise, but the features must be actionable and causal to be effective. Thus, obtaining which counterfactual generation method suits the student success prediction models in terms of desiderata, stability, and robustness is essential. Although a few studies have been published in recent years on the use of counterfactual explanations in educational sciences, they have yet to discuss which counterfactual generation method is more suitable for this problem. This paper analyzed the effectiveness of commonly used counterfactual generation methods, such as WhatIf Counterfactual Explanations, Multi-Objective Counterfactual Explanations, and Nearest Instance Counterfactual Explanations after balancing. This contribution presents a case study using the Open University Learning Analytics dataset to demonstrate the practical usefulness of counterfactual explanations. The results illustrate the method's effectiveness and describe concrete steps that could be taken to alter the model's prediction.

Modelling student online behaviour in a virtual learning environment

Nov 09, 2018

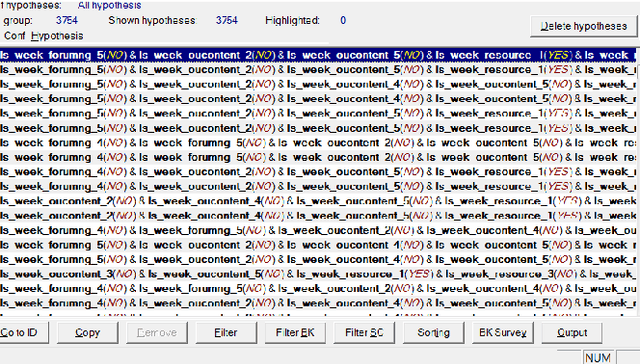

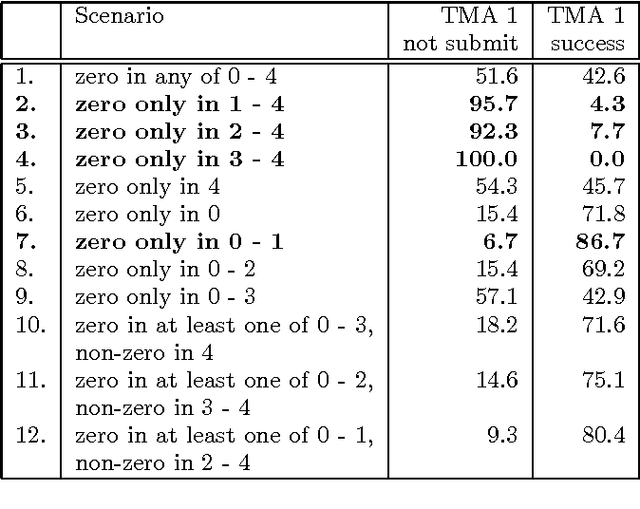

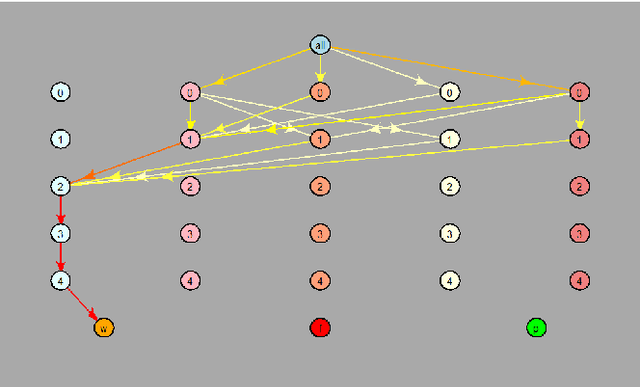

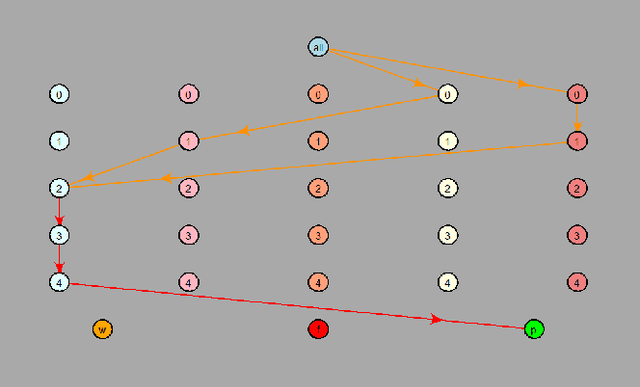

Abstract:In recent years, distance education has enjoyed a major boom. Much work at The Open University (OU) has focused on improving retention rates in these modules by providing timely support to students who are at risk of failing the module. In this paper we explore methods for analysing student activity in online virtual learning environment (VLE) -- General Unary Hypotheses Automaton (GUHA) and Markov chain-based analysis -- and we explain how this analysis can be relevant for module tutors and other student support staff. We show that both methods are a valid approach to modelling student activities. An advantage of the Markov chain-based approach is in its graphical output and in the possibility to model time dependencies of the student activities.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge