Jairaj Sathyanarayana

Sample-Rank: Weak Multi-Objective Recommendations Using Rejection Sampling

Aug 24, 2020

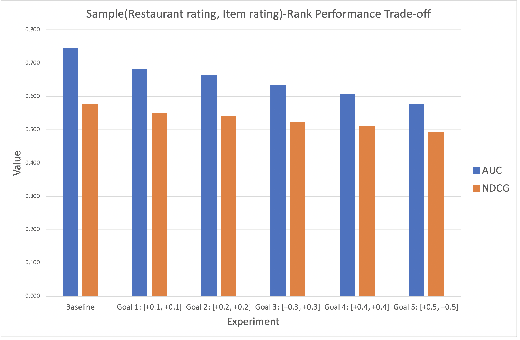

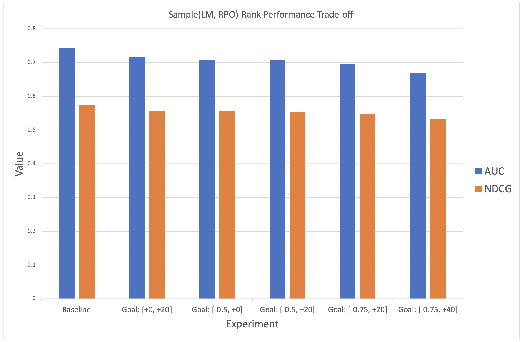

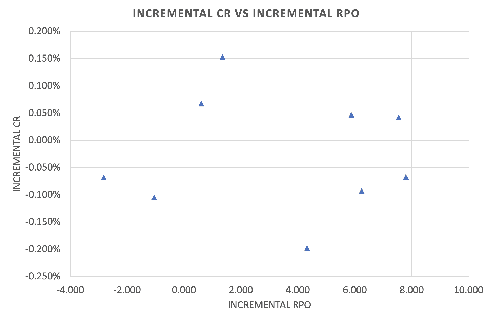

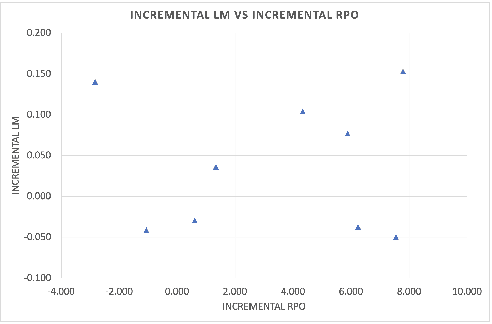

Abstract:Online food ordering marketplaces are multi-stakeholder systems where recommendations impact the experience and growth of each participant in the system. A recommender system in this setting has to encapsulate the objectives and constraints of different stakeholders in order to find utility of an item for recommendation. Constrained-optimization based approaches to this problem typically involve complex formulations and have high computational complexity in production settings involving millions of entities. Simplifications and relaxation techniques (for example, scalarization) help but introduce sub-optimality and can be time-consuming due to the amount of tuning needed. In this paper, we introduce a method involving multi-goal sampling followed by ranking for user-relevance (Sample-Rank), to nudge recommendations towards multi-objective (MO) goals of the marketplace. The proposed method's novelty is that it reduces the MO recommendation problem to sampling from a desired multi-goal distribution then using it to build a production-friendly learning-to-rank (LTR) model. In offline experiments we show that we are able to bias recommendations towards MO criteria with acceptable trade-offs in metrics like AUC and NDCG. We also show results from a large-scale online A/B experiment where this approach gave a statistically significant lift of 2.64% in average revenue per order (RPO) (objective #1) with no drop in conversion rate (CR) (objective #2) while holding the average last-mile traversed flat (objective #3), vs. the baseline ranking method. This method also significantly reduces time to model development and deployment in MO settings and allows for trivial extensions to more objectives and other types of LTR models.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge