Jaeseung Lee

A Lightweight CNN-Transformer Model for Learning Traveling Salesman Problems

May 03, 2023Abstract:Transformer-based models show state-of-the-art performance even for large-scale Traveling Salesman Problems (TSPs). However, they are based on fully-connected attention models and suffer from large computational complexity and GPU memory usage. We propose a lightweight CNN-Transformer model based on a CNN embedding layer and partial self-attention. Our CNN-Transformer model is able to better learn spatial features from input data using a CNN embedding layer compared with the standard Transformer models. It also removes considerable redundancy in fully connected attention models using the proposed partial self-attention. Experiments show that the proposed model outperforms other state-of-the-art Transformer-based models in terms of TSP solution quality, GPU memory usage, and inference time. Our model consumes approximately 20% less GPU memory usage and has 45% faster inference time compared with other state-of-the-art Transformer-based models. Our code is publicly available at https://github.com/cm8908/CNN_Transformer3

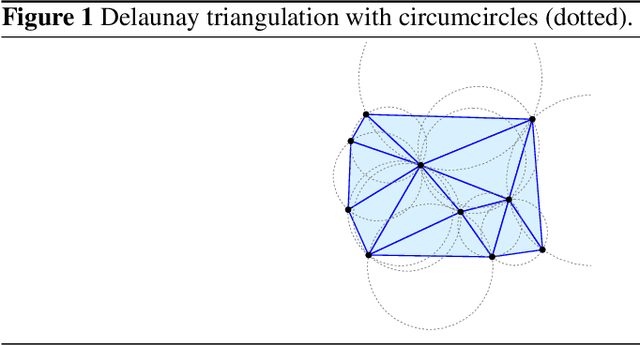

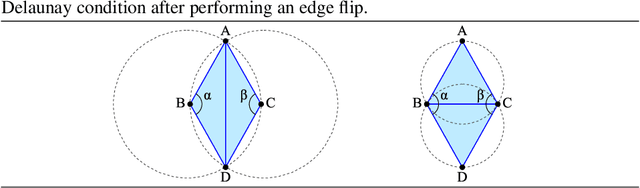

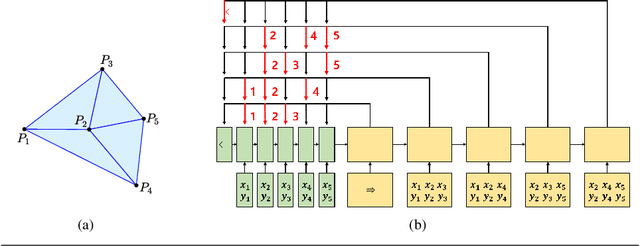

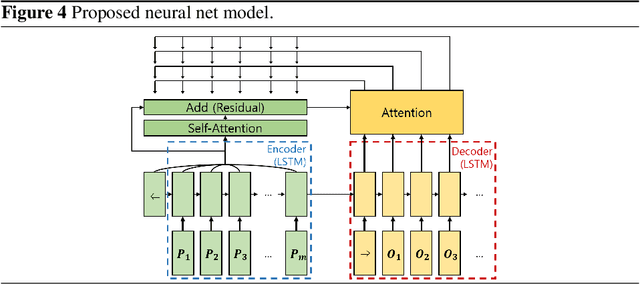

Learning Delaunay Triangulation using Self-attention and Domain Knowledge

Jul 05, 2021

Abstract:Delaunay triangulation is a well-known geometric combinatorial optimization problem with various applications. Many algorithms can generate Delaunay triangulation given an input point set, but most are nontrivial algorithms requiring an understanding of geometry or the performance of additional geometric operations, such as the edge flip. Deep learning has been used to solve various combinatorial optimization problems; however, generating Delaunay triangulation based on deep learning remains a difficult problem, and very few research has been conducted due to its complexity. In this paper, we propose a novel deep-learning-based approach for learning Delaunay triangulation using a new attention mechanism based on self-attention and domain knowledge. The proposed model is designed such that the model efficiently learns point-to-point relationships using self-attention in the encoder. In the decoder, a new attention score function using domain knowledge is proposed to provide a high penalty when the geometric requirement is not satisfied. The strength of the proposed attention score function lies in its ability to extend its application to solving other combinatorial optimization problems involving geometry. When the proposed neural net model is well trained, it is simple and efficient because it automatically predicts the Delaunay triangulation for an input point set without requiring any additional geometric operations. We conduct experiments to demonstrate the effectiveness of the proposed model and conclude that it exhibits better performance compared with other deep-learning-based approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge