Jae-hun Shim

Core-set Sampling for Efficient Neural Architecture Search

Jul 08, 2021

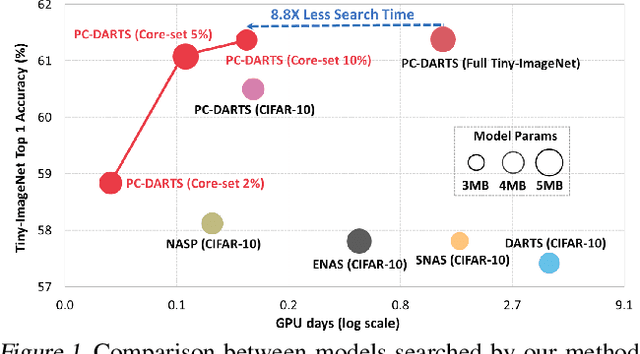

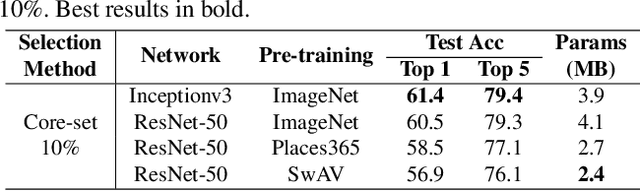

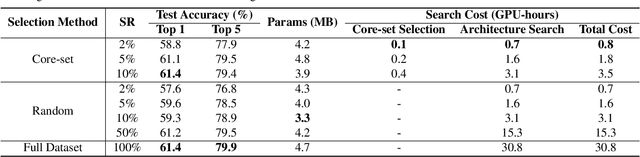

Abstract:Neural architecture search (NAS), an important branch of automatic machine learning, has become an effective approach to automate the design of deep learning models. However, the major issue in NAS is how to reduce the large search time imposed by the heavy computational burden. While most recent approaches focus on pruning redundant sets or developing new search methodologies, this paper attempts to formulate the problem based on the data curation manner. Our key strategy is to search the architecture using summarized data distribution, i.e., core-set. Typically, many NAS algorithms separate searching and training stages, and the proposed core-set methodology is only used in search stage, thus their performance degradation can be minimized. In our experiments, we were able to save overall computational time from 30.8 hours to 3.5 hours, 8.8x reduction, on a single RTX 3090 GPU without sacrificing accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge