J. Willard Curtis

Vision-Based Distributed Formation Control of Unmanned Aerial Vehicles

Sep 01, 2018

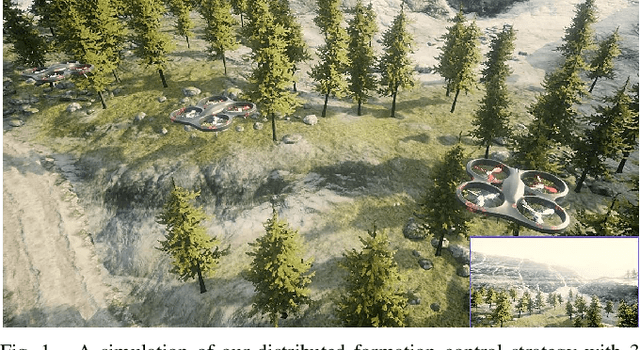

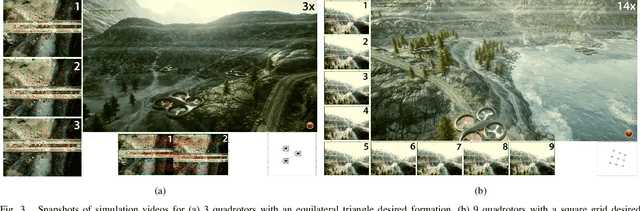

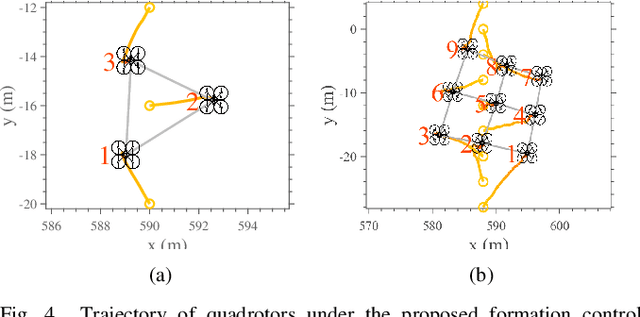

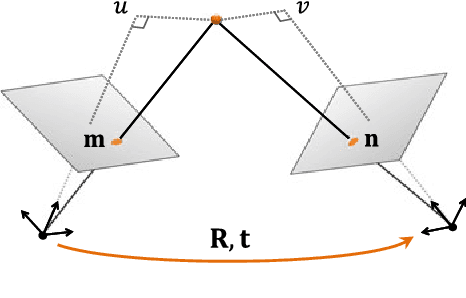

Abstract:We present a novel control strategy for a team of unmanned aerial vehicles (UAVs) to autonomously achieve a desired formation using only visual feedback provided by the UAV's onboard cameras. This effectively eliminates the need for global position measurements. The proposed pipeline is fully distributed and encompasses a collision avoidance scheme. In our approach, each UAV extracts feature points from captured images and communicates their pixel coordinates and descriptors among its neighbors. These feature points are used in our novel pose estimation algorithm, QuEst, to localize the neighboring UAVs. Compared to existing methods, QuEst has better estimation accuracy and is robust to feature point degeneracies. We demonstrate the proposed pipeline in a high-fidelity simulation environment and show that UAVs can achieve a desired formation in a natural environment without any fiducial markers.

Quaternion Based Camera Pose Estimation From Matched Feature Points

Apr 11, 2017

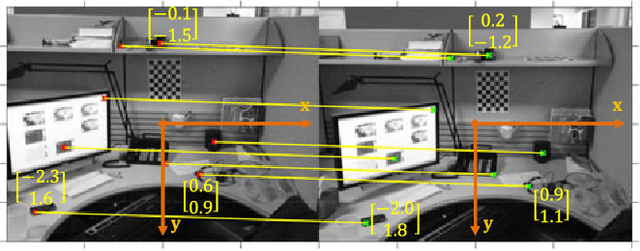

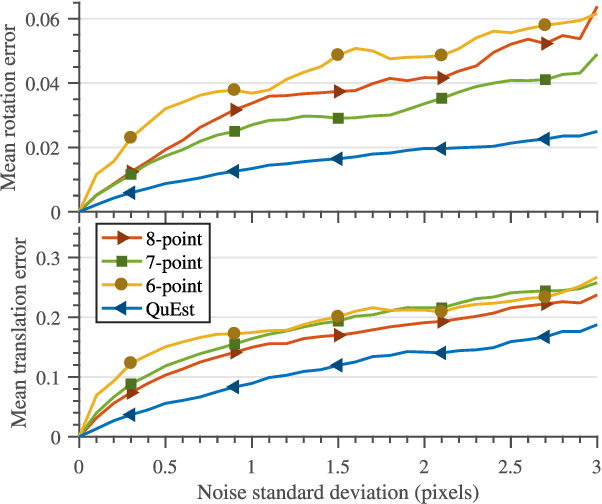

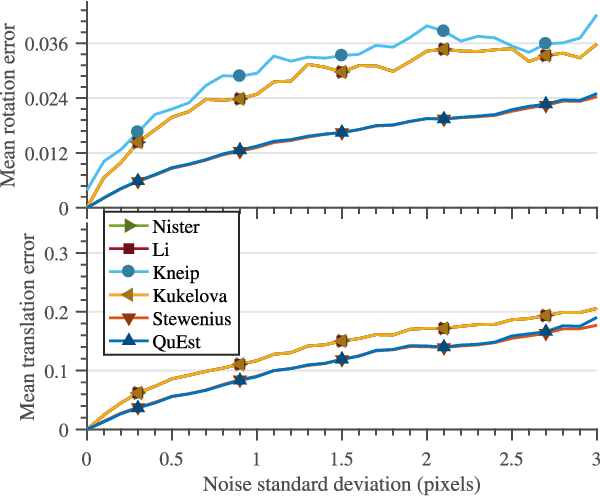

Abstract:We present a novel solution to the camera pose estimation problem, where rotation and translation of a camera between two views are estimated from matched feature points in the images. The camera pose estimation problem is traditionally solved via algorithms that are based on the essential matrix or the Euclidean homography. With six or more feature points in general positions in the space, essential matrix based algorithms can recover a unique solution. However, such algorithms fail when points are on critical surfaces (e.g., coplanar points) and homography should be used instead. By formulating the problem in quaternions and decoupling the rotation and translation estimation, our proposed algorithm works for all point configurations. Using both simulated and real world images, we compare the estimation accuracy of our algorithm with some of the most commonly used algorithms. Our method is shown to be more robust to noise and outliers. For the benefit of community, we have made the implementation of our algorithm available online and free.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge