J. Anibal Arias-Aguilar

On the Possibility of Rewarding Structure Learning Agents: Mutual Information on Linguistic Random Sets

Oct 09, 2019

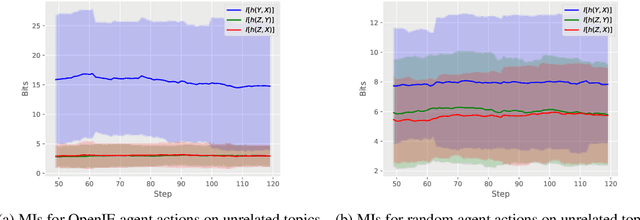

Abstract:We present a first attempt to elucidate an Information-Theoretic approach to design the reward provided by a natural language environment to some structure learning agent. To this end, we revisit the Information Theory of unsupervised induction of phrase-structure grammars to characterize the behavior of simulated agents whose actions are characterized in terms of random sets of linguistic samples. Our results showed empirical evidence of that semantic structures (built using Open Information Extraction methods) can be distinguished from randomly constructed structures by observing the Mutual Information among their constituent linguistic random sets. This suggests the possibility of rewarding structure learning agents without using pretrained structural analyzers (oracle actors or experts).

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge