J. Alex Thomasson

Selection of appropriate multispectral camera exposure settings and radiometric calibration methods for applications in phenotyping and precision agriculture

Feb 28, 2024

Abstract:Radiometric accuracy of data is crucial in quantitative precision agriculture, to produce reliable and repeatable data for modeling and decision making. The effect of exposure time and gain settings on the radiometric accuracy of multispectral images was not explored enough. The goal of this study was to determine if having a fixed exposure (FE) time during image acquisition improved radiometric accuracy of images, compared to the default auto-exposure (AE) settings. This involved quantifying the errors from auto-exposure and determining ideal exposure values within which radiometric mean absolute percentage error (MAPE) were minimal (< 5%). The results showed that FE orthomosaic was closer to ground-truth (higher R2 and lower MAPE) than AE orthomosaic. An ideal exposure range was determined for capturing canopy and soil objects, without loss of information from under-exposure or saturation from over-exposure. A simulation of errors from AE showed that MAPE < 5% for the blue, green, red, and NIR bands and < 7% for the red edge band for exposure settings within the determined ideal ranges and increased exponentially beyond the ideal exposure upper limit. Further, prediction of total plant nitrogen uptake (g/plant) using vegetation indices (VIs) from two different growing seasons were closer to the ground truth (mostly, R2 > 0.40, and MAPE = 12 to 14%, p < 0.05) when FE was used, compared to the prediction from AE images (mostly, R2 < 0.13, MAPE = 15 to 18%, p >= 0.05).

Plastic Contaminant Detection in Aerial Imagery of Cotton Fields with Deep Learning

Dec 14, 2022

Abstract:Plastic shopping bags that get carried away from the side of roads and tangled on cotton plants can end up at cotton gins if not removed before the harvest. Such bags may not only cause problem in the ginning process but might also get embodied in cotton fibers reducing its quality and marketable value. Therefore, it is required to detect, locate, and remove the bags before cotton is harvested. Manually detecting and locating these bags in cotton fields is labor intensive, time-consuming and a costly process. To solve these challenges, we present application of four variants of YOLOv5 (YOLOv5s, YOLOv5m, YOLOv5l and YOLOv5x) for detecting plastic shopping bags using Unmanned Aircraft Systems (UAS)-acquired RGB (Red, Green, and Blue) images. We also show fixed effect model tests of color of plastic bags as well as YOLOv5-variant on average precision (AP), mean average precision (mAP@50) and accuracy. In addition, we also demonstrate the effect of height of plastic bags on the detection accuracy. It was found that color of bags had significant effect (p < 0.001) on accuracy across all the four variants while it did not show any significant effect on the AP with YOLOv5m (p = 0.10) and YOLOv5x (p = 0.35) at 95% confidence level. Similarly, YOLOv5-variant did not show any significant effect on the AP (p = 0.11) and accuracy (p = 0.73) of white bags, but it had significant effects on the AP (p = 0.03) and accuracy (p = 0.02) of brown bags including on the mAP@50 (p = 0.01) and inference speed (p < 0.0001). Additionally, height of plastic bags had significant effect (p < 0.0001) on overall detection accuracy. The findings reported in this paper can be useful in speeding up removal of plastic bags from cotton fields before harvest and thereby reducing the amount of contaminants that end up at cotton gins.

Assessing The Performance of YOLOv5 Algorithm for Detecting Volunteer Cotton Plants in Corn Fields at Three Different Growth Stages

Jul 31, 2022

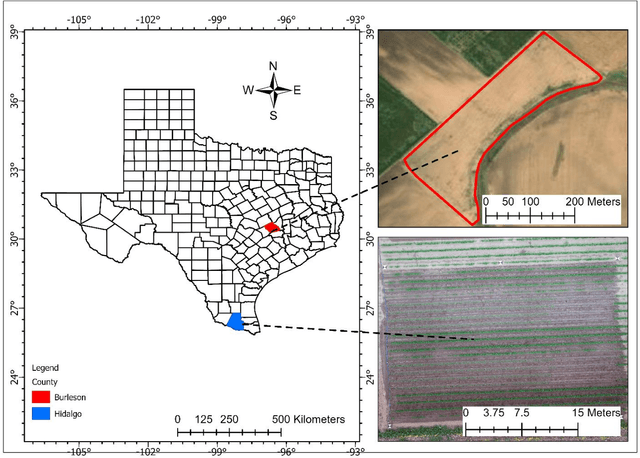

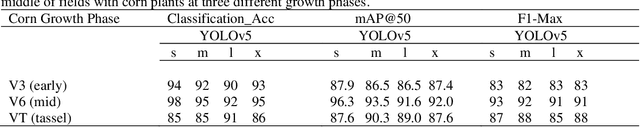

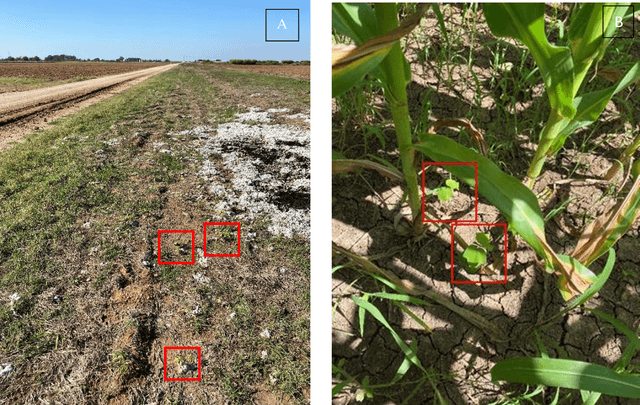

Abstract:The boll weevil (Anthonomus grandis L.) is a serious pest that primarily feeds on cotton plants. In places like Lower Rio Grande Valley of Texas, due to sub-tropical climatic conditions, cotton plants can grow year-round and therefore the left-over seeds from the previous season during harvest can continue to grow in the middle of rotation crops like corn (Zea mays L.) and sorghum (Sorghum bicolor L.). These feral or volunteer cotton (VC) plants when reach the pinhead squaring phase (5-6 leaf stage) can act as hosts for the boll weevil pest. The Texas Boll Weevil Eradication Program (TBWEP) employs people to locate and eliminate VC plants growing by the side of roads or fields with rotation crops but the ones growing in the middle of fields remain undetected. In this paper, we demonstrate the application of computer vision (CV) algorithm based on You Only Look Once version 5 (YOLOv5) for detecting VC plants growing in the middle of corn fields at three different growth stages (V3, V6, and VT) using unmanned aircraft systems (UAS) remote sensing imagery. All the four variants of YOLOv5 (s, m, l, and x) were used and their performances were compared based on classification accuracy, mean average precision (mAP), and F1-score. It was found that YOLOv5s could detect VC plants with a maximum classification accuracy of 98% and mAP of 96.3 % at the V6 stage of corn while YOLOv5s and YOLOv5m resulted in the lowest classification accuracy of 85% and YOLOv5m and YOLOv5l had the least mAP of 86.5% at the VT stage on images of size 416 x 416 pixels. The developed CV algorithm has the potential to effectively detect and locate VC plants growing in the middle of corn fields as well as expedite the management aspects of TBWEP.

Computer Vision for Volunteer Cotton Detection in a Corn Field with UAS Remote Sensing Imagery and Spot Spray Applications

Jul 15, 2022

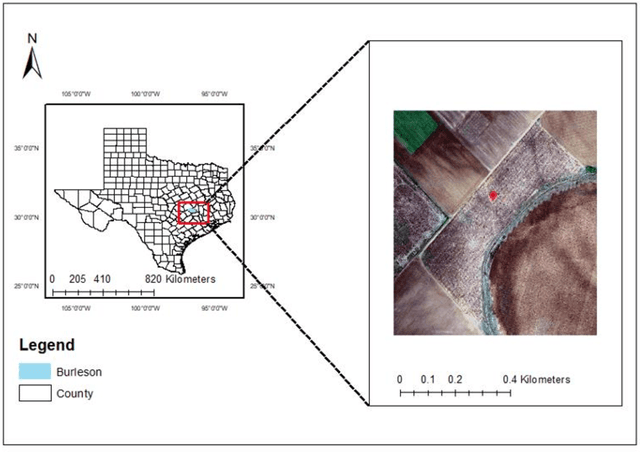

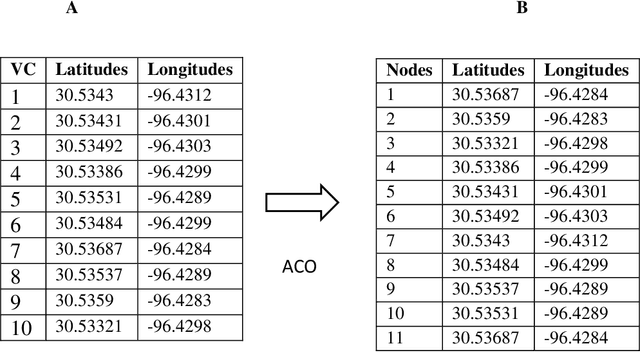

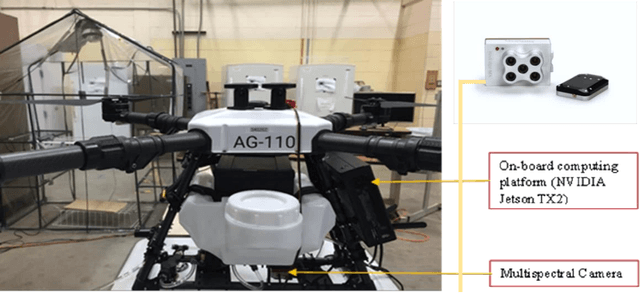

Abstract:To control boll weevil (Anthonomus grandis L.) pest re-infestation in cotton fields, the current practices of volunteer cotton (VC) (Gossypium hirsutum L.) plant detection in fields of rotation crops like corn (Zea mays L.) and sorghum (Sorghum bicolor L.) involve manual field scouting at the edges of fields. This leads to many VC plants growing in the middle of fields remain undetected that continue to grow side by side along with corn and sorghum. When they reach pinhead squaring stage (5-6 leaves), they can serve as hosts for the boll weevil pests. Therefore, it is required to detect, locate and then precisely spot-spray them with chemicals. In this paper, we present the application of YOLOv5m on radiometrically and gamma-corrected low resolution (1.2 Megapixel) multispectral imagery for detecting and locating VC plants growing in the middle of tasseling (VT) growth stage of cornfield. Our results show that VC plants can be detected with a mean average precision (mAP) of 79% and classification accuracy of 78% on images of size 1207 x 923 pixels at an average inference speed of nearly 47 frames per second (FPS) on NVIDIA Tesla P100 GPU-16GB and 0.4 FPS on NVIDIA Jetson TX2 GPU. We also demonstrate the application of a customized unmanned aircraft systems (UAS) for spot-spray applications based on the developed computer vision (CV) algorithm and how it can be used for near real-time detection and mitigation of VC plants growing in corn fields for efficient management of the boll weevil pests.

Detecting Volunteer Cotton Plants in a Corn Field with Deep Learning on UAV Remote-Sensing Imagery

Jul 14, 2022

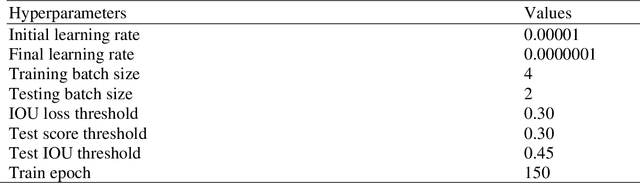

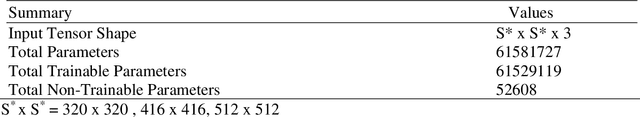

Abstract:The cotton boll weevil, Anthonomus grandis Boheman is a serious pest to the U.S. cotton industry that has cost more than 16 billion USD in damages since it entered the United States from Mexico in the late 1800s. This pest has been nearly eradicated; however, southern part of Texas still faces this issue and is always prone to the pest reinfestation each year due to its sub-tropical climate where cotton plants can grow year-round. Volunteer cotton (VC) plants growing in the fields of inter-seasonal crops, like corn, can serve as hosts to these pests once they reach pin-head square stage (5-6 leaf stage) and therefore need to be detected, located, and destroyed or sprayed . In this paper, we present a study to detect VC plants in a corn field using YOLOv3 on three band aerial images collected by unmanned aircraft system (UAS). The two-fold objectives of this paper were : (i) to determine whether YOLOv3 can be used for VC detection in a corn field using RGB (red, green, and blue) aerial images collected by UAS and (ii) to investigate the behavior of YOLOv3 on images at three different scales (320 x 320, S1; 416 x 416, S2; and 512 x 512, S3 pixels) based on average precision (AP), mean average precision (mAP) and F1-score at 95% confidence level. No significant differences existed for mAP among the three scales, while a significant difference was found for AP between S1 and S3 (p = 0.04) and S2 and S3 (p = 0.02). A significant difference was also found for F1-score between S2 and S3 (p = 0.02). The lack of significant differences of mAP at all the three scales indicated that the trained YOLOv3 model can be used on a computer vision-based remotely piloted aerial application system (RPAAS) for VC detection and spray application in near real-time.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge