Ioannis Xarchakos

TryOffAnyone: Tiled Cloth Generation from a Dressed Person

Dec 11, 2024

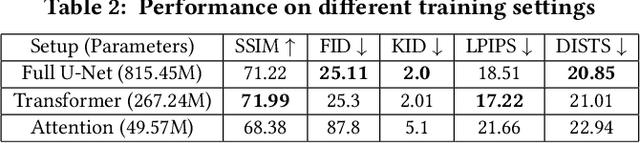

Abstract:The fashion industry is increasingly leveraging computer vision and deep learning technologies to enhance online shopping experiences and operational efficiencies. In this paper, we address the challenge of generating high-fidelity tiled garment images essential for personalized recommendations, outfit composition, and virtual try-on systems from photos of garments worn by models. Inspired by the success of Latent Diffusion Models (LDMs) in image-to-image translation, we propose a novel approach utilizing a fine-tuned StableDiffusion model. Our method features a streamlined single-stage network design, which integrates garmentspecific masks to isolate and process target clothing items effectively. By simplifying the network architecture through selective training of transformer blocks and removing unnecessary crossattention layers, we significantly reduce computational complexity while achieving state-of-the-art performance on benchmark datasets like VITON-HD. Experimental results demonstrate the effectiveness of our approach in producing high-quality tiled garment images for both full-body and half-body inputs. Code and model are available at: https://github.com/ixarchakos/try-off-anyone

Video Monitoring Queries

Feb 24, 2020

Abstract:Recent advances in video processing utilizing deep learning primitives achieved breakthroughs in fundamental problems in video analysis such as frame classification and object detection enabling an array of new applications. In this paper we study the problem of interactive declarative query processing on video streams. In particular we introduce a set of approximate filters to speed up queries that involve objects of specific type (e.g., cars, trucks, etc.) on video frames with associated spatial relationships among them (e.g., car left of truck). The resulting filters are able to assess quickly if the query predicates are true to proceed with further analysis of the frame or otherwise not consider the frame further avoiding costly object detection operations. We propose two classes of filters $IC$ and $OD$, that adapt principles from deep image classification and object detection. The filters utilize extensible deep neural architectures and are easy to deploy and utilize. In addition, we propose statistical query processing techniques to process aggregate queries involving objects with spatial constraints on video streams and demonstrate experimentally the resulting increased accuracy on the resulting aggregate estimation. Combined these techniques constitute a robust set of video monitoring query processing techniques. We demonstrate that the application of the techniques proposed in conjunction with declarative queries on video streams can dramatically increase the frame processing rate and speed up query processing by at least two orders of magnitude. We present the results of a thorough experimental study utilizing benchmark video data sets at scale demonstrating the performance benefits and the practical relevance of our proposals.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge