Imke van Heerden

Ways of Seeing, and Selling, AI Art

Mar 10, 2025

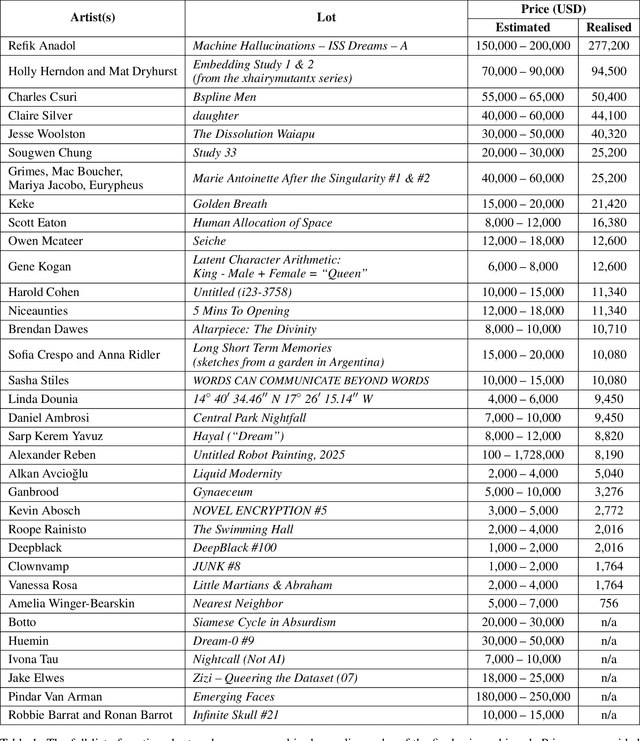

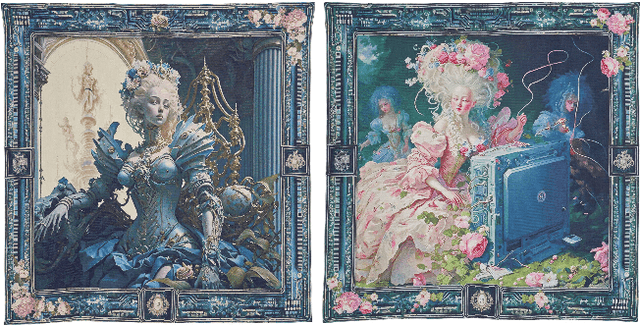

Abstract:In early 2025, Augmented Intelligence - Christie's first AI art auction - drew criticism for showcasing a controversial genre. Amid wider legal uncertainty, artists voiced concerns over data mining practices, notably with respect to copyright. The backlash could be viewed as a microcosm of AI's contested position in the creative economy. Touching on the auction's presentation, reception, and results, this paper explores how, among social dissonance, machine learning finds its place in the artworld. Foregrounding responsible innovation, the paper provides a balanced perspective that champions creators' rights and brings nuance to this polarised debate. With a focus on exhibition design, it centres framing, which refers to the way a piece is presented to influence consumer perception. Context plays a central role in shaping our understanding of how good, valuable, and even ethical an artwork is. In this regard, Augmented Intelligence situates AI art within a surprisingly traditional framework, leveraging hallmarks of "high art" to establish the genre's cultural credibility. Generative AI has a clear economic dimension, converging questions of artistic merit with those of monetary worth. Scholarship on ways of seeing, or framing, could substantively inform the interpretation and evaluation of creative outputs, including assessments of their aesthetic and commercial value.

A Perspective on Literary Metaphor in the Context of Generative AI

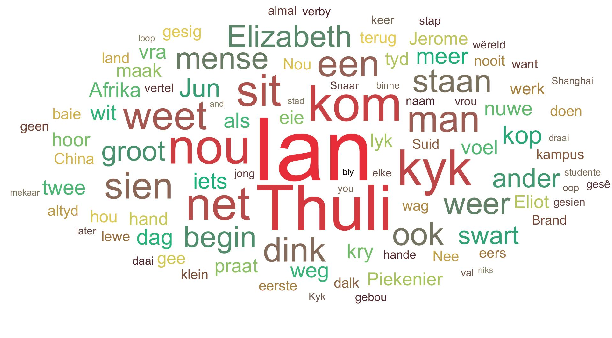

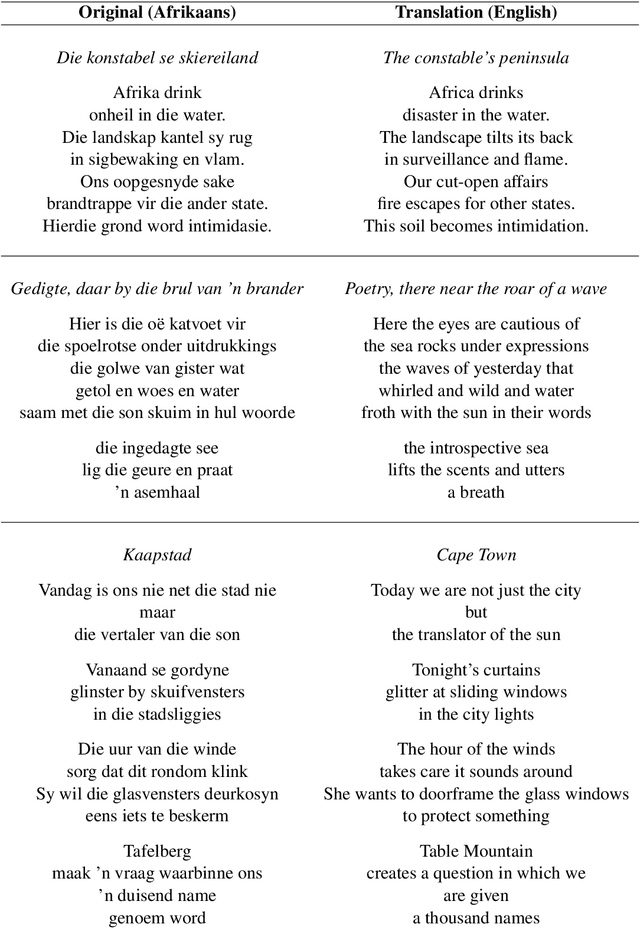

Sep 02, 2024Abstract:At the intersection of creative text generation and literary theory, this study explores the role of literary metaphor and its capacity to generate a range of meanings. In this regard, literary metaphor is vital to the development of any particular language. To investigate whether the inclusion of original figurative language improves textual quality, we trained an LSTM-based language model in Afrikaans. The network produces phrases containing compellingly novel figures of speech. Specifically, the emphasis falls on how AI might be utilised as a defamiliarisation technique, which disrupts expected uses of language to augment poetic expression. Providing a literary perspective on text generation, the paper raises thought-provoking questions on aesthetic value, interpretation and evaluation.

AfriKI: Machine-in-the-Loop Afrikaans Poetry Generation

Mar 30, 2021

Abstract:This paper proposes a generative language model called AfriKI. Our approach is based on an LSTM architecture trained on a small corpus of contemporary fiction. With the aim of promoting human creativity, we use the model as an authoring tool to explore machine-in-the-loop Afrikaans poetry generation. To our knowledge, this is the first study to attempt creative text generation in Afrikaans.

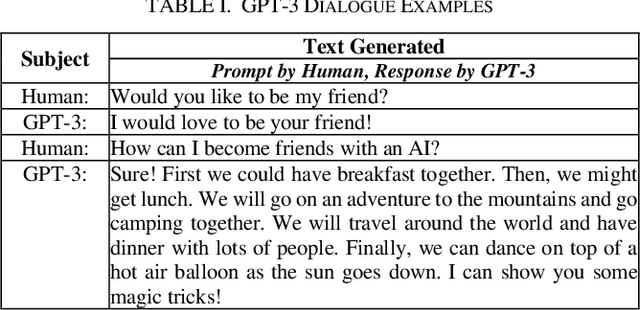

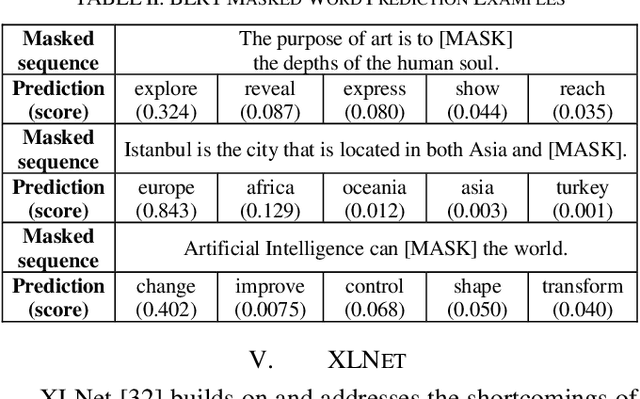

Exploring Transformers in Natural Language Generation: GPT, BERT, and XLNet

Feb 16, 2021

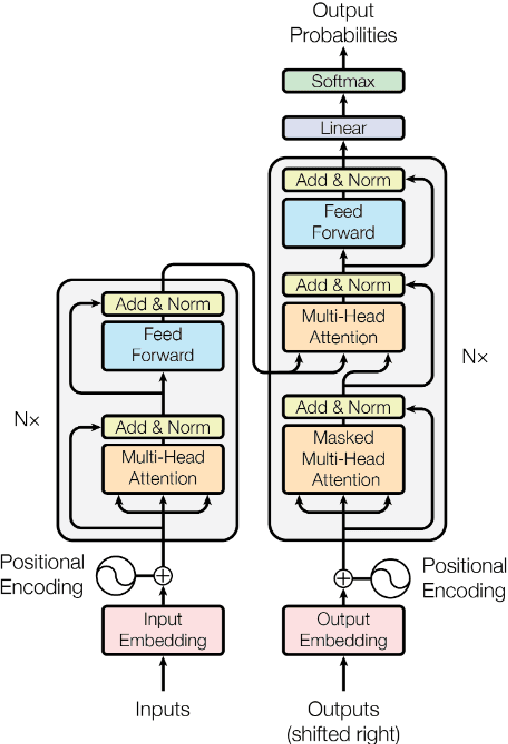

Abstract:Recent years have seen a proliferation of attention mechanisms and the rise of Transformers in Natural Language Generation (NLG). Previously, state-of-the-art NLG architectures such as RNN and LSTM ran into vanishing gradient problems; as sentences grew larger, distance between positions remained linear, and sequential computation hindered parallelization since sentences were processed word by word. Transformers usher in a new era. In this paper, we explore three major Transformer-based models, namely GPT, BERT, and XLNet, that carry significant implications for the field. NLG is a burgeoning area that is now bolstered with rapid developments in attention mechanisms. From poetry generation to summarization, text generation derives benefit as Transformer-based language models achieve groundbreaking results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge