Hyun-Chul Choi

Non-Parametric Style Transfer

Jun 26, 2022Abstract:Recent feed-forward neural methods of arbitrary image style transfer mainly utilized encoded feature map upto its second-order statistics, i.e., linearly transformed the encoded feature map of a content image to have the same mean and variance (or covariance) of a target style feature map. In this work, we extend the second-order statistical feature matching into a general distribution matching based on the understanding that style of an image is represented by the distribution of responses from receptive fields. For this generalization, first, we propose a new feature transform layer that exactly matches the feature map distribution of content image into that of target style image. Second, we analyze the recent style losses consistent with our new feature transform layer to train a decoder network which generates a style transferred image from the transformed feature map. Based on our experimental results, it is proven that the stylized images obtained with our method are more similar with the target style images in all existing style measures without losing content clearness.

Unbiased Image Style Transfer

Jul 05, 2018

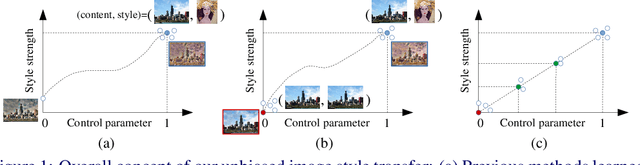

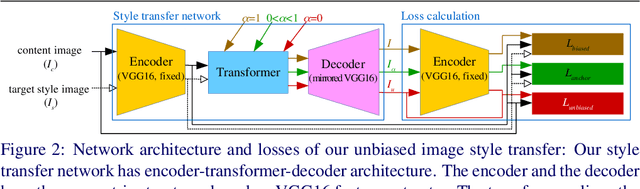

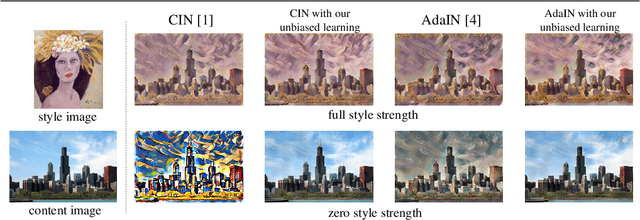

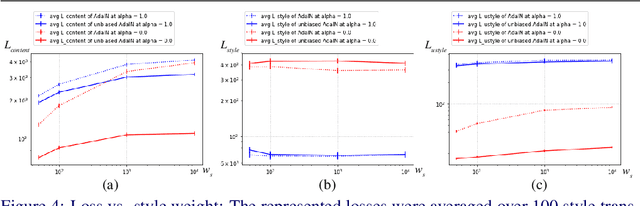

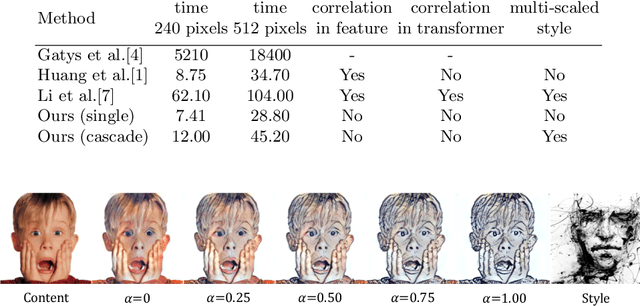

Abstract:Recent fast image style transferring methods use feed-forward neural networks to generate an output image of desired style strength from the input pair of a content and a target style image. In the existing methods, the image of intermediate style between the content and the target style is obtained by decoding a linearly interpolated feature in encoded feature space. However, there has been no work on analyzing the effectiveness of this kind of style strength interpolation so far. In this paper, we tackle the missing work on the in-depth analysis of style interpolation and propose a method that is more effective in controlling style strength. We interpret the training task of a style transfer network as a regression learning between the control parameter and output style strength. In this understanding, the existing methods are biased due to the fact that training is performed with one-sided data of full style strength (alpha = 1.0). Thus, this biased learning does not guarantee the generation of a desired intermediate style corresponding to the style control parameter between 0.0 and 1.0. To solve this problem of the biased network, we propose an unbiased learning technique which uses unbiased training data and corresponding unbiased loss for alpha = 0.0 to make the feed-forward networks to generate a zero-style image, i.e., content image when alpha = 0.0. Our experimental results verified that our unbiased learning method achieved the reconstruction of a content image with zero style strength, better regression specification between style control parameter and output style, and more stable style transfer that is insensitive to the weight of style loss without additive complexity in image generating process.

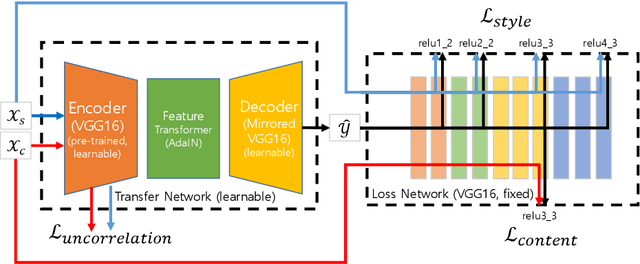

Uncorrelated Feature Encoding for Faster Image Style Transfer

Jul 04, 2018

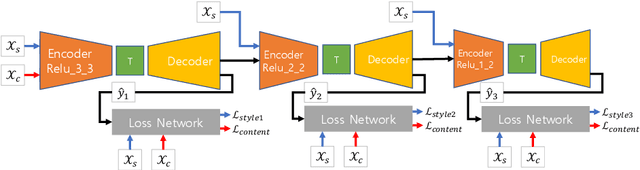

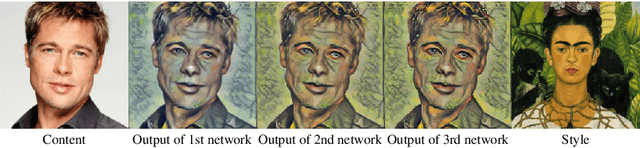

Abstract:Recent fast style transfer methods use a pre-trained convolutional neural network as a feature encoder and a perceptual loss network. Although the pre-trained network is used to generate responses of receptive fields effective for representing style and content of image, it is not optimized for image style transfer but rather for image classification. Furthermore, it also requires a time-consuming and correlation-considering feature alignment process for image style transfer because of its inter-channel correlation. In this paper, we propose an end-to-end learning method which optimizes an encoder/decoder network for the purpose of style transfer as well as relieves the feature alignment complexity from considering inter-channel correlation. We used uncorrelation loss, i.e., the total correlation coefficient between the responses of different encoder channels, with style and content losses for training style transfer network. This makes the encoder network to be trained to generate inter-channel uncorrelated features and to be optimized for the task of image style transfer which maintained the quality of image style only with a light-weighted and correlation-unaware feature alignment process. Moreover, our method drastically reduced redundant channels of the encoded feature and this resulted in the efficient size of structure of network and faster forward processing speed. Our method can also be applied to cascade network scheme for multiple scaled style transferring and allows user-control of style strength by using a content-style trade-off parameter.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge