Hyeonsu Jeong

Understanding Self-Distillation and Partial Label Learning in Multi-Class Classification with Label Noise

Feb 16, 2024Abstract:Self-distillation (SD) is the process of training a student model using the outputs of a teacher model, with both models sharing the same architecture. Our study theoretically examines SD in multi-class classification with cross-entropy loss, exploring both multi-round SD and SD with refined teacher outputs, inspired by partial label learning (PLL). By deriving a closed-form solution for the student model's outputs, we discover that SD essentially functions as label averaging among instances with high feature correlations. Initially beneficial, this averaging helps the model focus on feature clusters correlated with a given instance for predicting the label. However, it leads to diminishing performance with increasing distillation rounds. Additionally, we demonstrate SD's effectiveness in label noise scenarios and identify the label corruption condition and minimum number of distillation rounds needed to achieve 100% classification accuracy. Our study also reveals that one-step distillation with refined teacher outputs surpasses the efficacy of multi-step SD using the teacher's direct output in high noise rate regimes.

Recovering Top-Two Answers and Confusion Probability in Multi-Choice Crowdsourcing

Dec 29, 2022

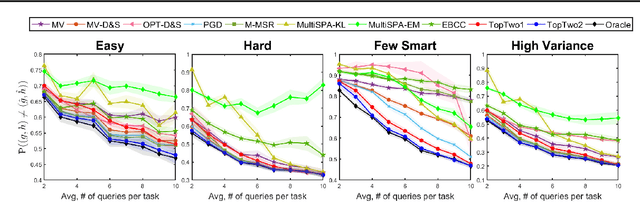

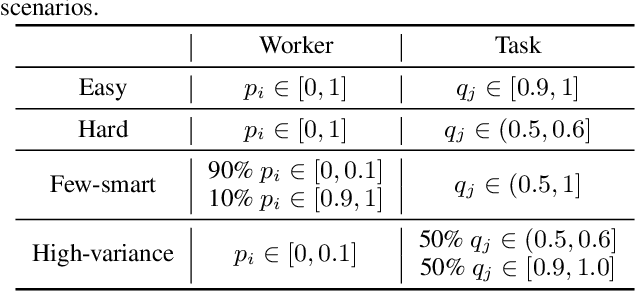

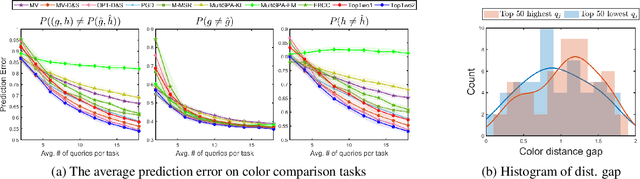

Abstract:Crowdsourcing has emerged as an effective platform to label a large volume of data in a cost- and time-efficient manner. Most previous works have focused on designing an efficient algorithm to recover only the ground-truth labels of the data. In this paper, we consider multi-choice crowdsourced labeling with the goal of recovering not only the ground truth but also the most confusing answer and the confusion probability. The most confusing answer provides useful information about the task by revealing the most plausible answer other than the ground truth and how plausible it is. To theoretically analyze such scenarios, we propose a model where there are top-two plausible answers for each task, distinguished from the rest of choices. Task difficulty is quantified by the confusion probability between the top two, and worker reliability is quantified by the probability of giving an answer among the top two. Under this model, we propose a two-stage inference algorithm to infer the top-two answers as well as the confusion probability. We show that our algorithm achieves the minimax optimal convergence rate. We conduct both synthetic and real-data experiments and demonstrate that our algorithm outperforms other recent algorithms. We also show the applicability of our algorithms in inferring the difficulty of tasks and training neural networks with the soft labels composed of the top-two most plausible classes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge