Hugh T. Blair

FPGA-Based In-Vivo Calcium Image Decoding for Closed-Loop Feedback Applications

Dec 09, 2022Abstract:The miniaturized calcium imaging is an emerging neural recording technique that can monitor neural activity at large scale at a specific brain region of a rat or mice. It has been widely used in the study of brain functions in experimental neuroscientific research. Most calcium-image analysis pipelines operate offline, which incurs long processing latency thus are hard to be used for closed-loop feedback stimulation targeting certain neural circuits. In this paper, we propose our FPGA-based design that enables real-time calcium image processing and position decoding for closed-loop feedback applications. Our design can perform real-time calcium image motion correction, enhancement, and fast trace extraction based on predefined cell contours and tiles. With that, we evaluated a variety of machine learning methods to decode positions from the extracted traces. Our proposed design and implementation can achieve position decoding with less than 1 ms latency under 300 MHz on FPGA for a variety of mainstream 1-photon miniscope sensors. We benchmarked the position decoding accuracy on open-sourced datasets collected from six different rats, and we show that by taking advantage of the ordinal encoding in the decoding task, we can consistently improve decoding accuracy without any overhead on hardware implementation and runtime across the subjects.

An uncertainty principle for neural coding: Conjugate representations of position and velocity are mapped onto firing rates and co-firing rates of neural spike trains

Dec 23, 2019

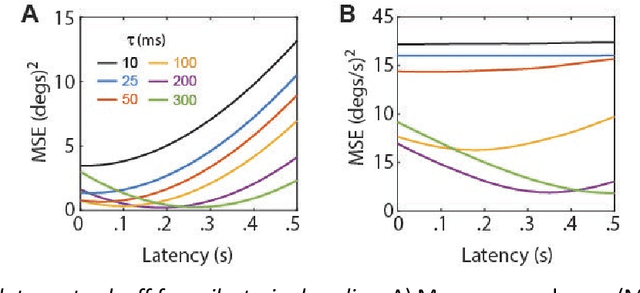

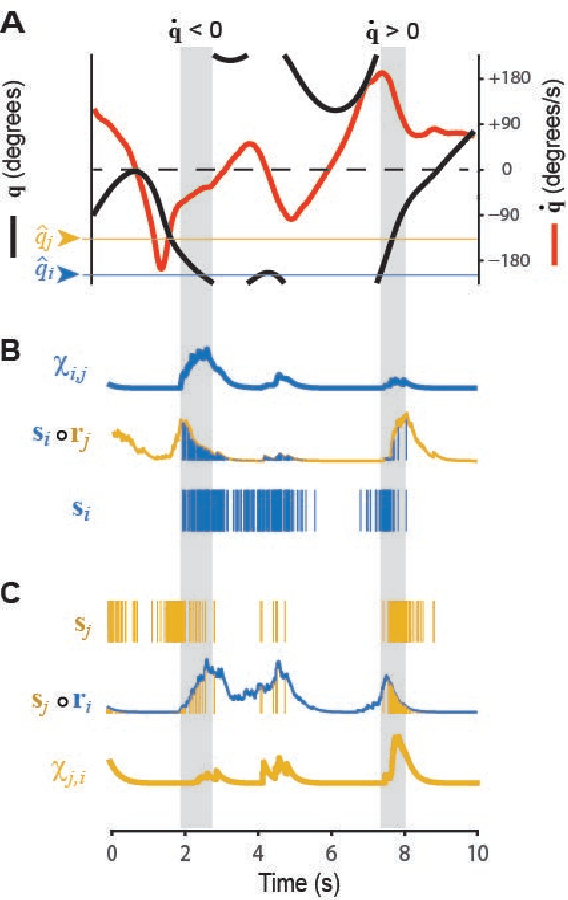

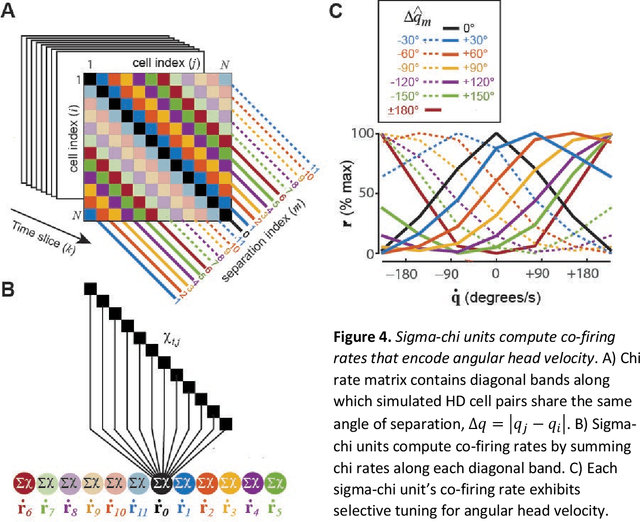

Abstract:The hippocampal system contains neural populations that encode an animal's position and velocity as it navigates through space. Here, we show that such populations can embed two codes within their spike trains: a firing rate code (R) conveyed by within-cell spike intervals, and a co-firing rate code (R') conveyed by between-cell spike intervals. These two codes behave as conjugates of one another, obeying an analog of the uncertainty principle from physics: information conveyed in R comes at the expense of information in R', and vice versa. An exception to this trade-off occurs when spike trains encode a pair of conjugate variables, such as position and velocity, which do not compete for capacity across R and R'. To illustrate this, we describe two biologically inspired methods for decoding R and R', referred to as sigma and sigma-chi decoding, respectively. Simulations of head direction (HD) and grid cells show that if firing rates are tuned for position (but not velocity), then position is recovered by sigma decoding, whereas velocity is recovered by sigma-chi decoding. Conversely, simulations of oscillatory interference among theta-modulated "speed cells" show that if co-firing rates are tuned for position (but not velocity), then position is recovered by sigma-chi decoding, whereas velocity is recovered by sigma decoding. Between these two extremes, information about both variables can be distributed across both channels, and partially recovered by both decoders. These results suggest that neurons with different spatial and temporal tuning properties-such as speed versus grid cells-might not encode different information, but rather, distribute similar information about position and velocity in different ways across R and R'.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge