Hossam Sharara

FLoBC: A Decentralized Blockchain-Based Federated Learning Framework

Dec 22, 2021

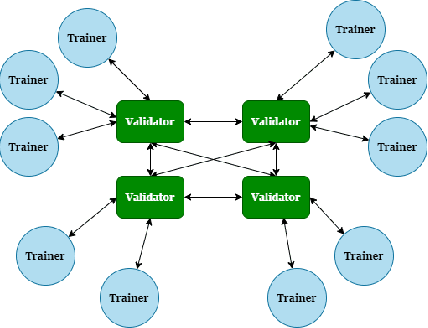

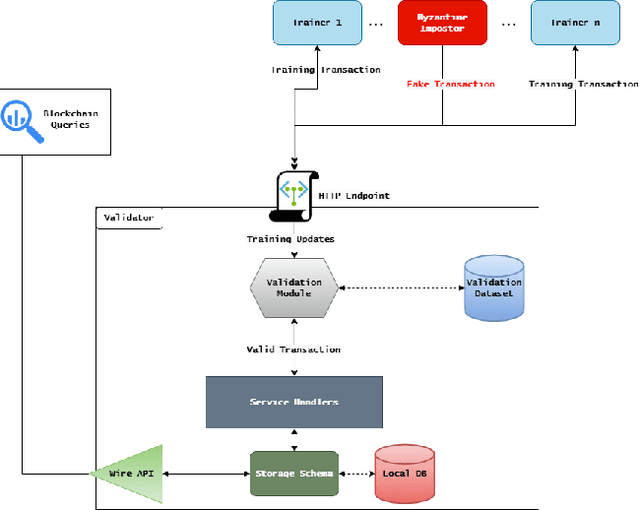

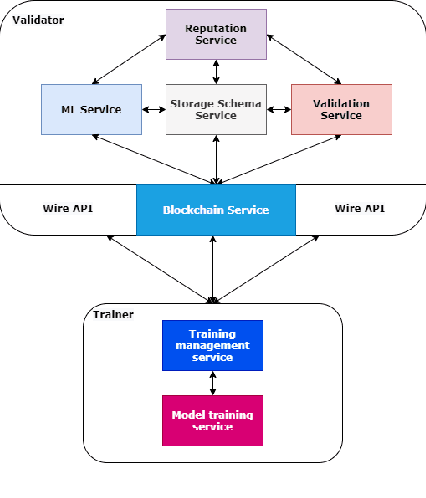

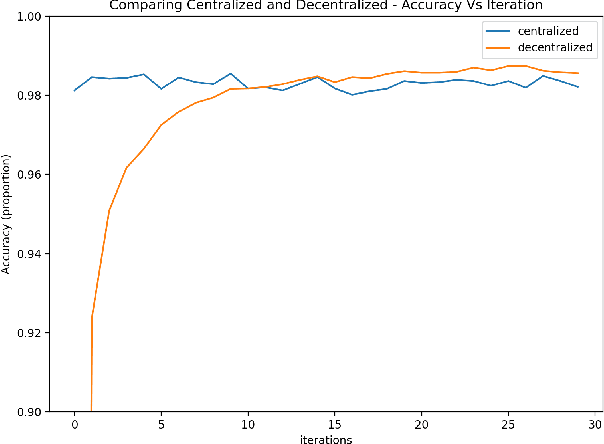

Abstract:The rapid expansion of data worldwide invites the need for more distributed solutions in order to apply machine learning on a much wider scale. The resultant distributed learning systems can have various degrees of centralization. In this work, we demonstrate our solution FLoBC for building a generic decentralized federated learning system using blockchain technology, accommodating any machine learning model that is compatible with gradient descent optimization. We present our system design comprising the two decentralized actors: trainer and validator, alongside our methodology for ensuring reliable and efficient operation of said system. Finally, we utilize FLoBC as an experimental sandbox to compare and contrast the effects of trainer-to-validator ratio, reward-penalty policy, and model synchronization schemes on the overall system performance, ultimately showing by example that a decentralized federated learning system is indeed a feasible alternative to more centralized architectures.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge