Hikaru Ogura

Convex Fairness Constrained Model Using Causal Effect Estimators

Feb 16, 2020

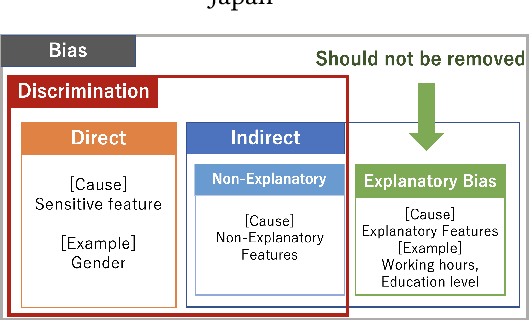

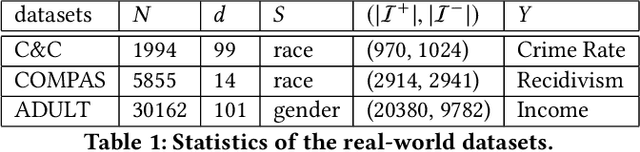

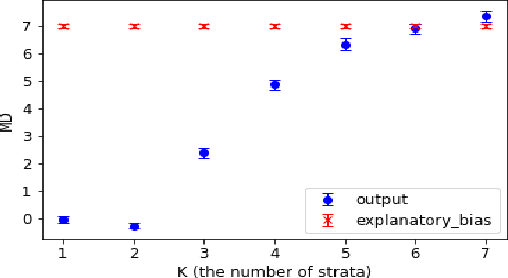

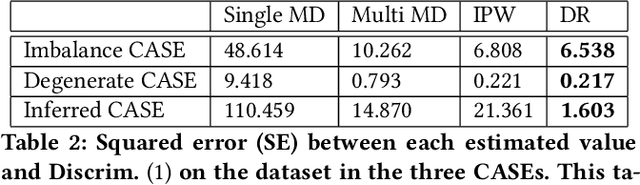

Abstract:Recent years have seen much research on fairness in machine learning. Here, mean difference (MD) or demographic parity is one of the most popular measures of fairness. However, MD quantifies not only discrimination but also explanatory bias which is the difference of outcomes justified by explanatory features. In this paper, we devise novel models, called FairCEEs, which remove discrimination while keeping explanatory bias. The models are based on estimators of causal effect utilizing propensity score analysis. We prove that FairCEEs with the squared loss theoretically outperform a naive MD constraint model. We provide an efficient algorithm for solving FairCEEs in regression and binary classification tasks. In our experiment on synthetic and real-world data in these two tasks, FairCEEs outperformed an existing model that considers explanatory bias in specific cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge