Het Naik

Towards Designing Computer Vision-based Explainable-AI Solution: A Use Case of Livestock Mart Industry

Feb 08, 2021

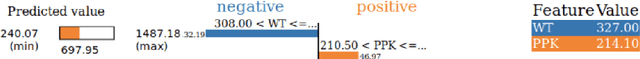

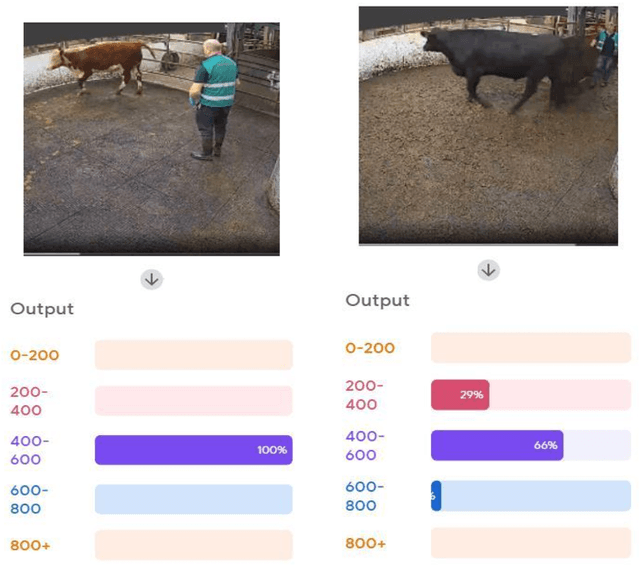

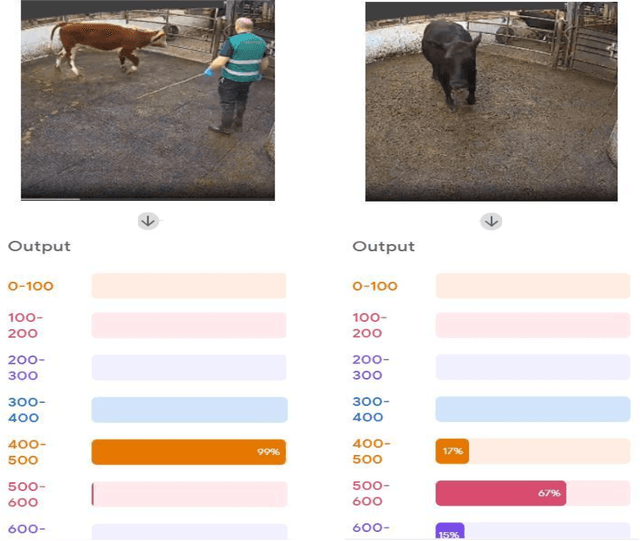

Abstract:The objective of an online Mart is to match buyers and sellers, to weigh animals and to oversee their sale. A reliable pricing method can be developed by ML models that can read through historical sales data. However, when AI models suggest or recommend a price, that in itself does not reveal too much (i.e., it acts like a black box) about the qualities and the abilities of an animal. An interested buyer would like to know more about the salient features of an animal before making the right choice based on his requirements. A model capable of explaining the different factors that impact the price point is essential for the needs of the market. It can also inspire confidence in buyers and sellers about the price point offered. To achieve these objectives, we have been working with the team at MartEye, a startup based in Portershed in Galway City, Ireland. Through this paper, we report our work-in-progress research towards building a smart video analytic platform, leveraging Explainable AI techniques.

Explainable AI meets Healthcare: A Study on Heart Disease Dataset

Nov 06, 2020

Abstract:With the increasing availability of structured and unstructured data and the swift progress of analytical techniques, Artificial Intelligence (AI) is bringing a revolution to the healthcare industry. With the increasingly indispensable role of AI in healthcare, there are growing concerns over the lack of transparency and explainability in addition to potential bias encountered by predictions of the model. This is where Explainable Artificial Intelligence (XAI) comes into the picture. XAI increases the trust placed in an AI system by medical practitioners as well as AI researchers, and thus, eventually, leads to an increasingly widespread deployment of AI in healthcare. In this paper, we present different interpretability techniques. The aim is to enlighten practitioners on the understandability and interpretability of explainable AI systems using a variety of techniques available which can be very advantageous in the health-care domain. Medical diagnosis model is responsible for human life and we need to be confident enough to treat a patient as instructed by a black-box model. Our paper contains examples based on the heart disease dataset and elucidates on how the explainability techniques should be preferred to create trustworthiness while using AI systems in healthcare.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge