Hazem Abbas

Improving Depth Estimation using Location Information

Dec 27, 2021

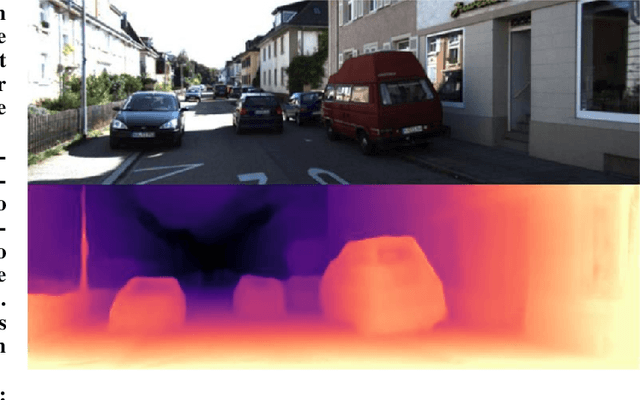

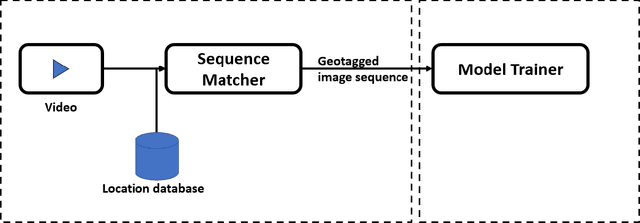

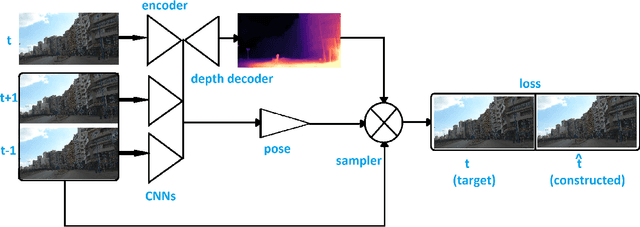

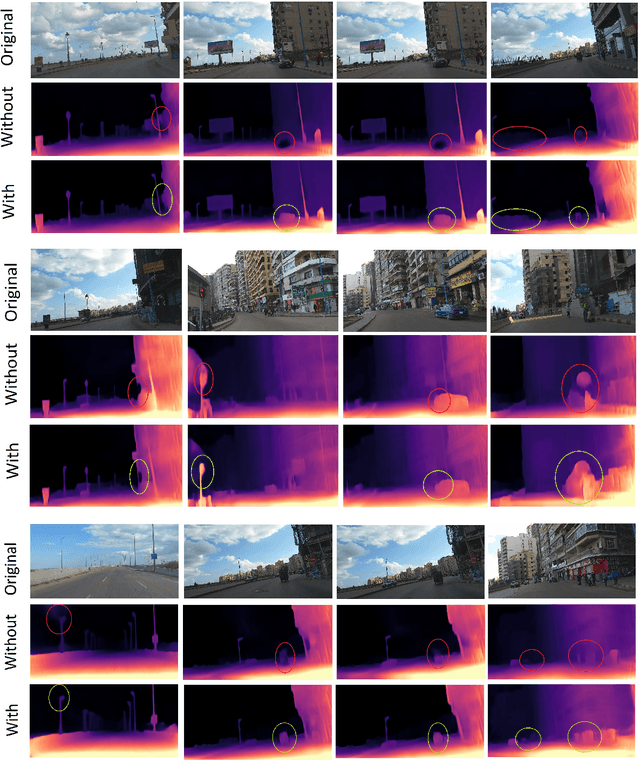

Abstract:The ability to accurately estimate depth information is crucial for many autonomous applications to recognize the surrounded environment and predict the depth of important objects. One of the most recently used techniques is monocular depth estimation where the depth map is inferred from a single image. This paper improves the self-supervised deep learning techniques to perform accurate generalized monocular depth estimation. The main idea is to train the deep model to take into account a sequence of the different frames, each frame is geotagged with its location information. This makes the model able to enhance depth estimation given area semantics. We demonstrate the effectiveness of our model to improve depth estimation results. The model is trained in a realistic environment and the results show improvements in the depth map after adding the location data to the model training phase.

A Transfer Learning End-to-End Arabic Text-To-Speech Deep Architecture

Jul 22, 2020

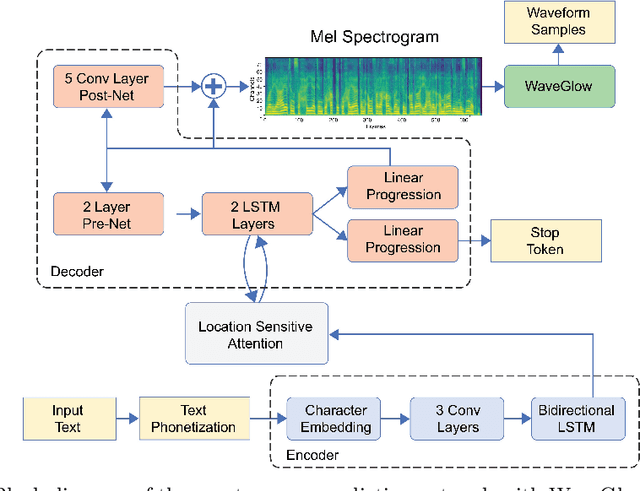

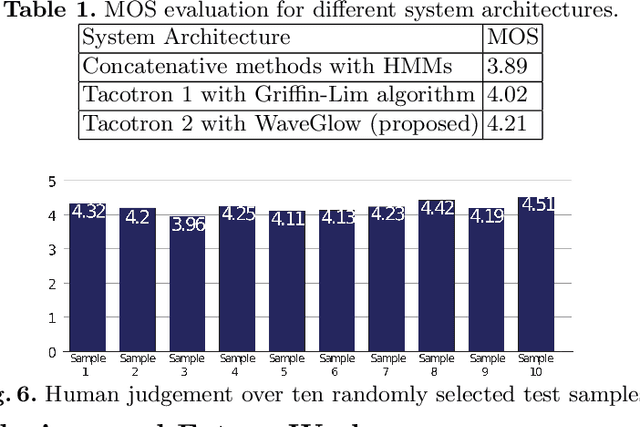

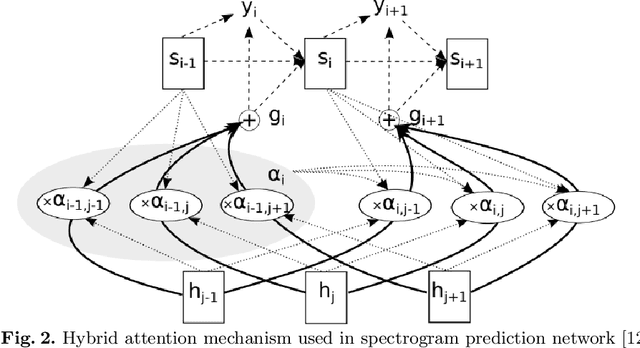

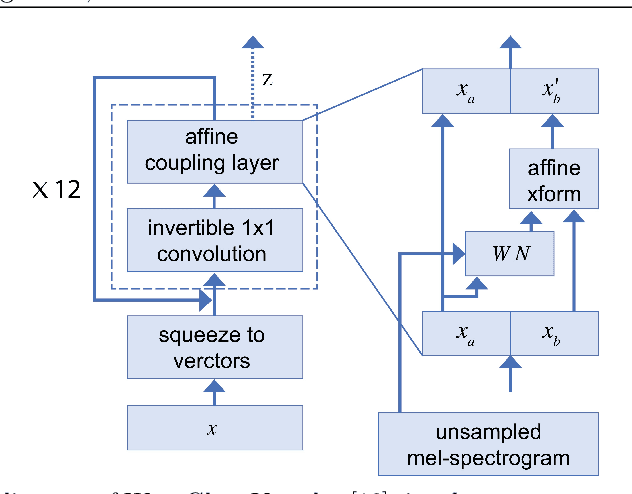

Abstract:Speech synthesis is the artificial production of human speech. A typical text-to-speech system converts a language text into a waveform. There exist many English TTS systems that produce mature, natural, and human-like speech synthesizers. In contrast, other languages, including Arabic, have not been considered until recently. Existing Arabic speech synthesis solutions are slow, of low quality, and the naturalness of synthesized speech is inferior to the English synthesizers. They also lack essential speech key factors such as intonation, stress, and rhythm. Different works were proposed to solve those issues, including the use of concatenative methods such as unit selection or parametric methods. However, they required a lot of laborious work and domain expertise. Another reason for such poor performance of Arabic speech synthesizers is the lack of speech corpora, unlike English that has many publicly available corpora and audiobooks. This work describes how to generate high quality, natural, and human-like Arabic speech using an end-to-end neural deep network architecture. This work uses just $\langle$ text, audio $\rangle$ pairs with a relatively small amount of recorded audio samples with a total of 2.41 hours. It illustrates how to use English character embedding despite using diacritic Arabic characters as input and how to preprocess these audio samples to achieve the best results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge