Harshdeep Singh

A Survey of Classical And Quantum Sequence Models

Dec 15, 2023Abstract:Our primary objective is to conduct a brief survey of various classical and quantum neural net sequence models, which includes self-attention and recurrent neural networks, with a focus on recent quantum approaches proposed to work with near-term quantum devices, while exploring some basic enhancements for these quantum models. We re-implement a key representative set of these existing methods, adapting an image classification approach using quantum self-attention to create a quantum hybrid transformer that works for text and image classification, and applying quantum self-attention and quantum recurrent neural networks to natural language processing tasks. We also explore different encoding techniques and introduce positional encoding into quantum self-attention neural networks leading to improved accuracy and faster convergence in text and image classification experiments. This paper also performs a comparative analysis of classical self-attention models and their quantum counterparts, helping shed light on the differences in these models and their performance.

ChemoVerse: Manifold traversal of latent spaces for novel molecule discovery

Sep 29, 2020

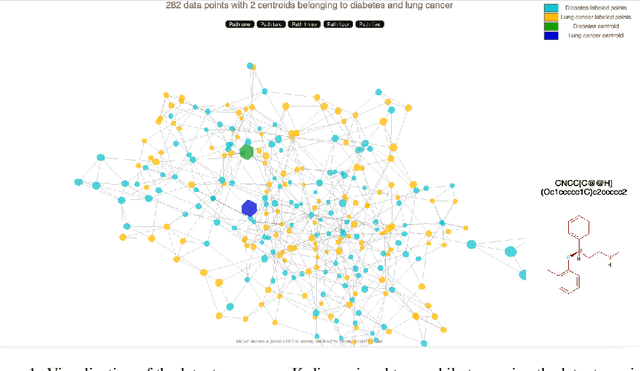

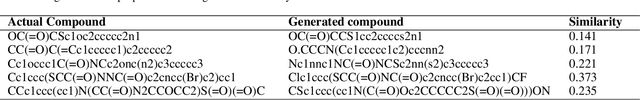

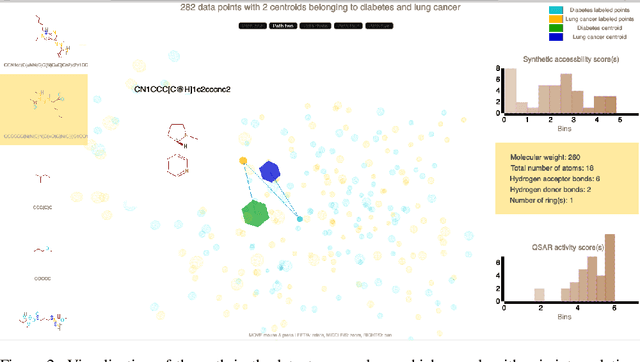

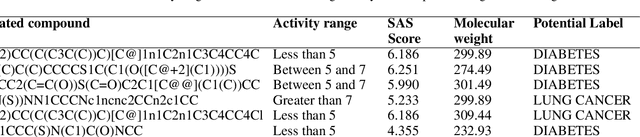

Abstract:In order to design a more potent and effective chemical entity, it is essential to identify molecular structures with the desired chemical properties. Recent advances in generative models using neural networks and machine learning are being widely used by many emerging startups and researchers in this domain to design virtual libraries of drug-like compounds. Although these models can help a scientist to produce novel molecular structures rapidly, the challenge still exists in the intelligent exploration of the latent spaces of generative models, thereby reducing the randomness in the generative procedure. In this work we present a manifold traversal with heuristic search to explore the latent chemical space. Different heuristics and scores such as the Tanimoto coefficient, synthetic accessibility, binding activity, and QED drug-likeness can be incorporated to increase the validity and proximity for desired molecular properties of the generated molecules. For evaluating the manifold traversal exploration, we produce the latent chemical space using various generative models such as grammar variational autoencoders (with and without attention) as they deal with the randomized generation and validity of compounds. With this novel traversal method, we are able to find more unseen compounds and more specific regions to mine in the latent space. Finally, these components are brought together in a simple platform allowing users to perform search, visualization and selection of novel generated compounds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge