Haojun Hou

Transition Relation Aware Self-Attention for Session-based Recommendation

Mar 12, 2022

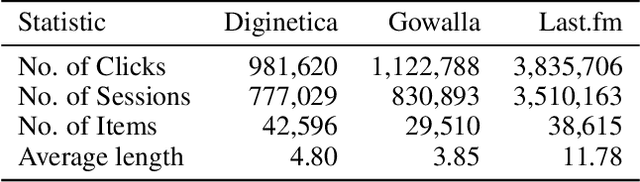

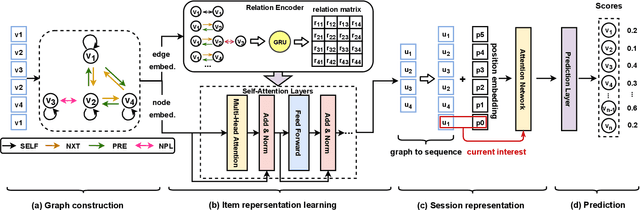

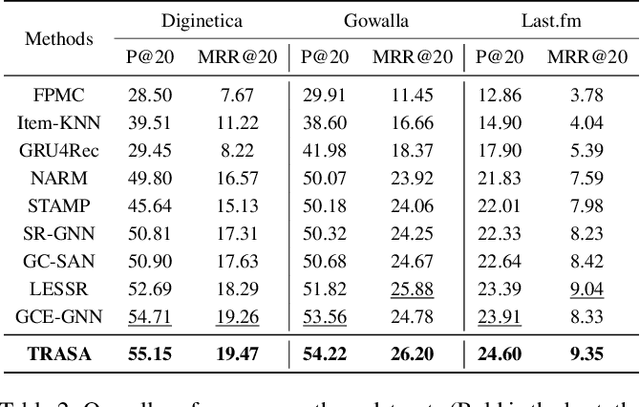

Abstract:Session-based recommendation is a challenging problem in the real-world scenes, e.g., ecommerce, short video platforms, and music platforms, which aims to predict the next click action based on the anonymous session. Recently, graph neural networks (GNNs) have emerged as the state-of-the-art methods for session-based recommendation. However, we find that there exist two limitations in these methods. One is the item transition relations are not fully exploited since the relations are not explicitly modeled. Another is the long-range dependencies between items can not be captured effectively due to the limitation of GNNs. To solve the above problems, we propose a novel approach for session-based recommendation, called Transition Relation Aware Self-Attention (TRASA). Specifically, TRASA first converts the session to a graph and then encodes the shortest path between items through the gated recurrent unit as their transition relation. Then, to capture the long-range dependencies, TRASA utilizes the self-attention mechanism to build the direct connection between any two items without going through intermediate ones. Also, the transition relations are incorporated explicitly when computing the attention scores. Extensive experiments on three real-word datasets demonstrate that TRASA outperforms the existing state-of-the-art methods consistently.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge