Hannuo Zhang

Seg-CycleGAN : SAR-to-optical image translation guided by a downstream task

Aug 11, 2024

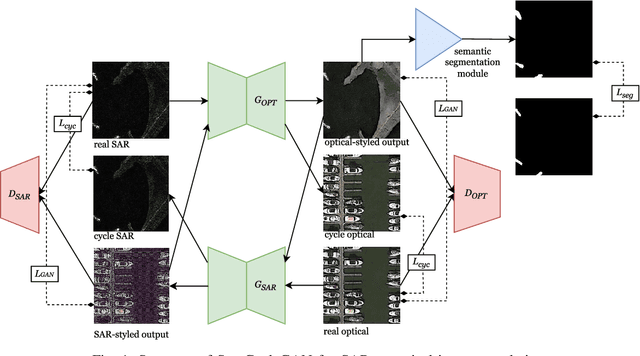

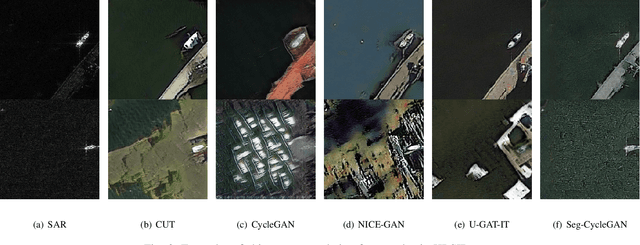

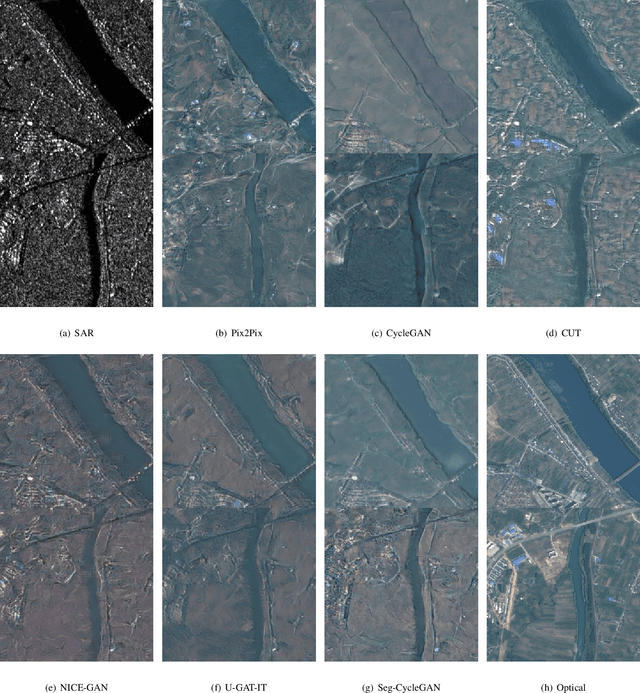

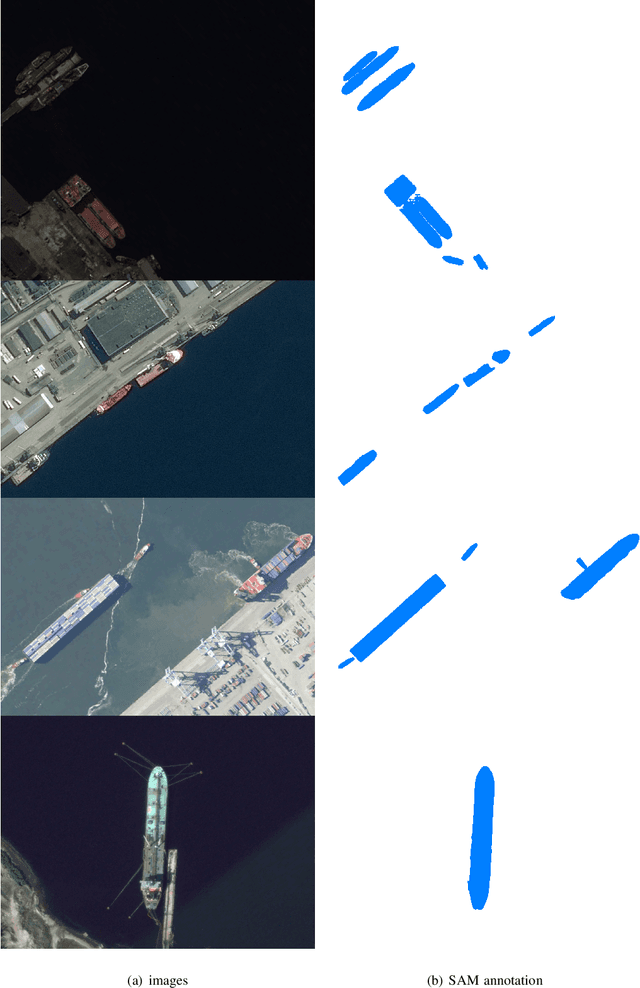

Abstract:Optical remote sensing and Synthetic Aperture Radar(SAR) remote sensing are crucial for earth observation, offering complementary capabilities. While optical sensors provide high-quality images, they are limited by weather and lighting conditions. In contrast, SAR sensors can operate effectively under adverse conditions. This letter proposes a GAN-based SAR-to-optical image translation method named Seg-CycleGAN, designed to enhance the accuracy of ship target translation by leveraging semantic information from a pre-trained semantic segmentation model. Our method utilizes the downstream task of ship target semantic segmentation to guide the training of image translation network, improving the quality of output Optical-styled images. The potential of foundation-model-annotated datasets in SAR-to-optical translation tasks is revealed. This work suggests broader research and applications for downstream-task-guided frameworks. The code will be available at https://github.com/NPULHH/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge