Hannah Bos

Micro-power spoken keyword spotting on Xylo Audio 2

Jun 21, 2024

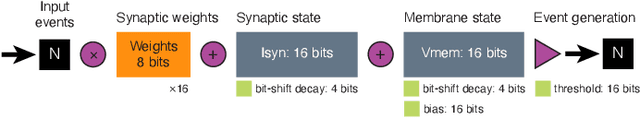

Abstract:For many years, designs for "Neuromorphic" or brain-like processors have been motivated by achieving extreme energy efficiency, compared with von-Neumann and tensor processor devices. As part of their design language, Neuromorphic processors take advantage of weight, parameter, state and activity sparsity. In the extreme case, neural networks based on these principles mimic the sparse activity oof biological nervous systems, in ``Spiking Neural Networks'' (SNNs). Few benchmarks are available for Neuromorphic processors, that have been implemented for a range of Neuromorphic and non-Neuromorphic platforms, which can therefore demonstrate the energy benefits of Neuromorphic processor designs. Here we describes the implementation of a spoken audio keyword-spotting (KWS) benchmark "Aloha" on the Xylo Audio 2 (SYNS61210) Neuromorphic processor device. We obtained high deployed quantized task accuracy, (95%), exceeding the benchmark task accuracy. We measured real continuous power of the deployed application on Xylo. We obtained best-in-class dynamic inference power ($291\mu$W) and best-in-class inference efficiency ($6.6\mu$J / Inf). Xylo sets a new minimum power for the Aloha KWS benchmark, and highlights the extreme energy efficiency achievable with Neuromorphic processor designs. Our results show that Neuromorphic designs are well-suited for real-time near- and in-sensor processing on edge devices.

Sub-mW Neuromorphic SNN audio processing applications with Rockpool and Xylo

Aug 30, 2022

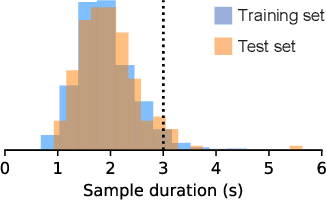

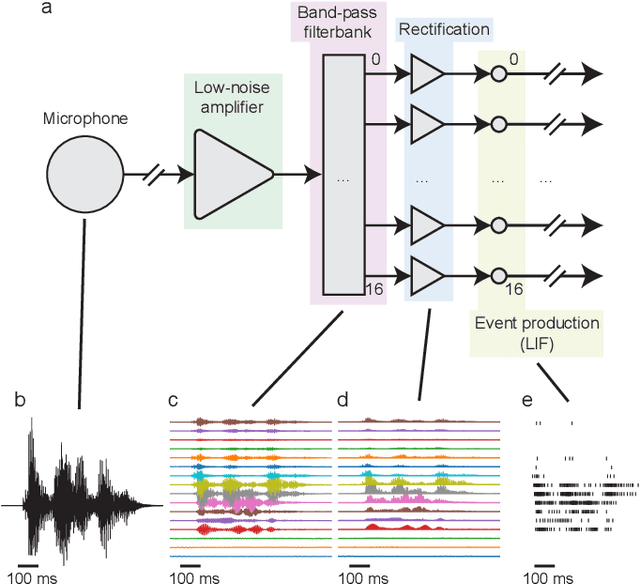

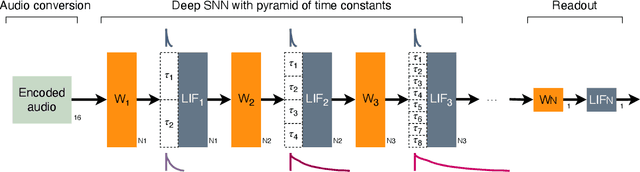

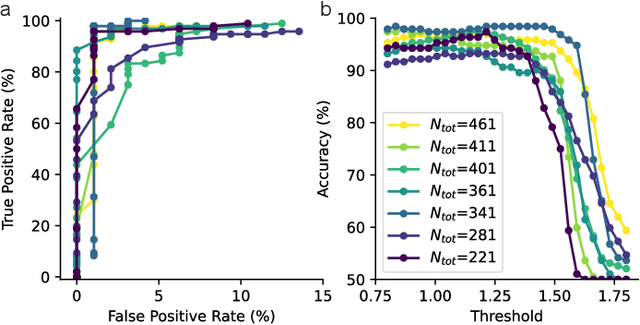

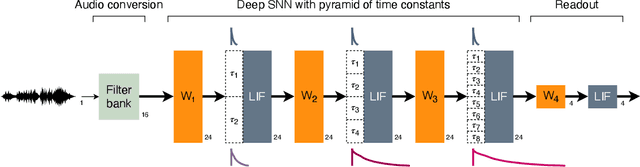

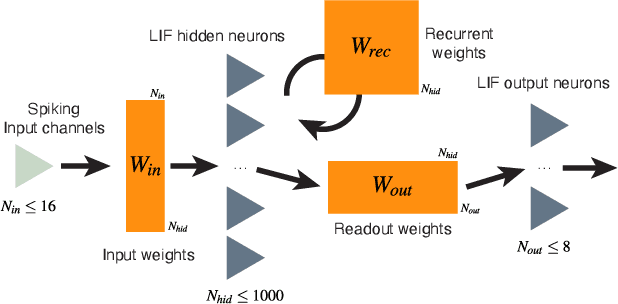

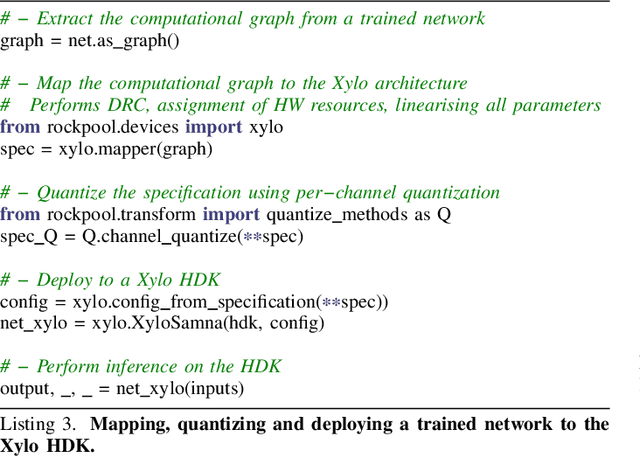

Abstract:Spiking Neural Networks (SNNs) provide an efficient computational mechanism for temporal signal processing, especially when coupled with low-power SNN inference ASICs. SNNs have been historically difficult to configure, lacking a general method for finding solutions for arbitrary tasks. In recent years, gradient-descent optimization methods have been applied to SNNs with increasing ease. SNNs and SNN inference processors therefore offer a good platform for commercial low-power signal processing in energy constrained environments without cloud dependencies. However, to date these methods have not been accessible to ML engineers in industry, requiring graduate-level training to successfully configure a single SNN application. Here we demonstrate a convenient high-level pipeline to design, train and deploy arbitrary temporal signal processing applications to sub-mW SNN inference hardware. We apply a new straightforward SNN architecture designed for temporal signal processing, using a pyramid of synaptic time constants to extract signal features at a range of temporal scales. We demonstrate this architecture on an ambient audio classification task, deployed to the Xylo SNN inference processor in streaming mode. Our application achieves high accuracy (98%) and low latency (100ms) at low power (<4muW inference power). Our approach makes training and deploying SNN applications available to ML engineers with general NN backgrounds, without requiring specific prior experience with spiking NNs. We intend for our approach to make Neuromorphic hardware and SNNs an attractive choice for commercial low-power and edge signal processing applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge