Halil Faruk Karagoz

Conformalized High-Density Quantile Regression via Dynamic Prototypes-based Probability Density Estimation

Nov 02, 2024

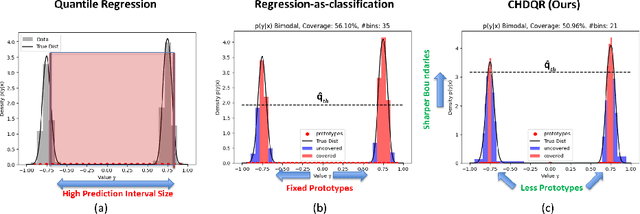

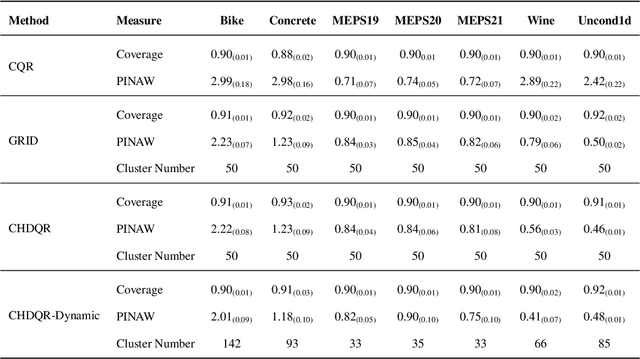

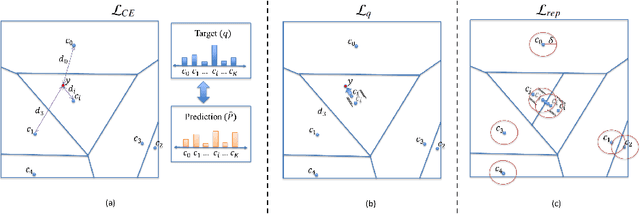

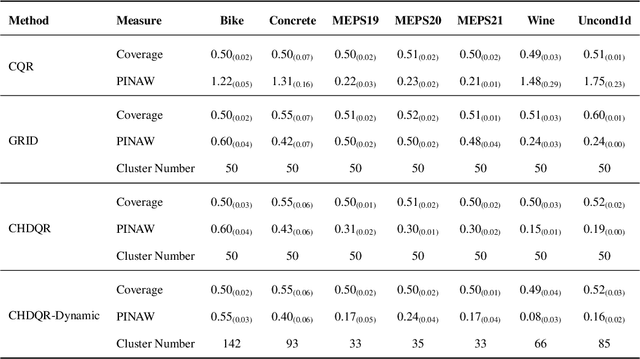

Abstract:Recent methods in quantile regression have adopted a classification perspective to handle challenges posed by heteroscedastic, multimodal, or skewed data by quantizing outputs into fixed bins. Although these regression-as-classification frameworks can capture high-density prediction regions and bypass convex quantile constraints, they are restricted by quantization errors and the curse of dimensionality due to a constant number of bins per dimension. To address these limitations, we introduce a conformalized high-density quantile regression approach with a dynamically adaptive set of prototypes. Our method optimizes the set of prototypes by adaptively adding, deleting, and relocating quantization bins throughout the training process. Moreover, our conformal scheme provides valid coverage guarantees, focusing on regions with the highest probability density. Experiments across diverse datasets and dimensionalities confirm that our method consistently achieves high-quality prediction regions with enhanced coverage and robustness, all while utilizing fewer prototypes and memory, ensuring scalability to higher dimensions. The code is available at https://github.com/batuceng/max_quantile .

ProtoDiffusion: Classifier-Free Diffusion Guidance with Prototype Learning

Jul 04, 2023

Abstract:Diffusion models are generative models that have shown significant advantages compared to other generative models in terms of higher generation quality and more stable training. However, the computational need for training diffusion models is considerably increased. In this work, we incorporate prototype learning into diffusion models to achieve high generation quality faster than the original diffusion model. Instead of randomly initialized class embeddings, we use separately learned class prototypes as the conditioning information to guide the diffusion process. We observe that our method, called ProtoDiffusion, achieves better performance in the early stages of training compared to the baseline method, signifying that using the learned prototypes shortens the training time. We demonstrate the performance of ProtoDiffusion using various datasets and experimental settings, achieving the best performance in shorter times across all settings.

Textile Pattern Generation Using Diffusion Models

Apr 02, 2023

Abstract:The problem of text-guided image generation is a complex task in Computer Vision, with various applications, including creating visually appealing artwork and realistic product images. One popular solution widely used for this task is the diffusion model, a generative model that generates images through an iterative process. Although diffusion models have demonstrated promising results for various image generation tasks, they may only sometimes produce satisfactory results when applied to more specific domains, such as the generation of textile patterns based on text guidance. This study presents a fine-tuned diffusion model specifically trained for textile pattern generation by text guidance to address this issue. The study involves the collection of various textile pattern images and their captioning with the help of another AI model. The fine-tuned diffusion model is trained with this newly created dataset, and its results are compared with the baseline models visually and numerically. The results demonstrate that the proposed fine-tuned diffusion model outperforms the baseline models in terms of pattern quality and efficiency in textile pattern generation by text guidance. This study presents a promising solution to the problem of text-guided textile pattern generation and has the potential to simplify the design process within the textile industry.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge