Haley Owsianko

Image Denoising with Control over Deep Network Hallucination

Jan 02, 2022

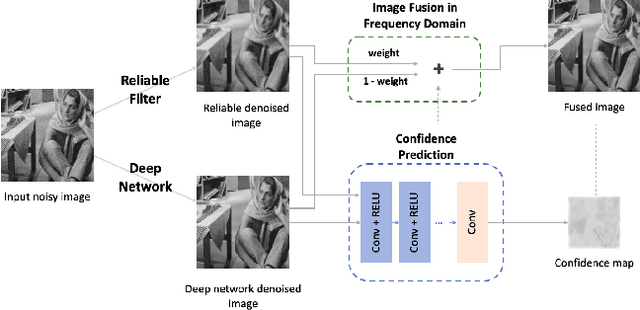

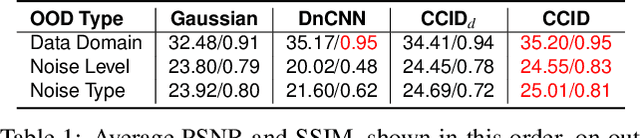

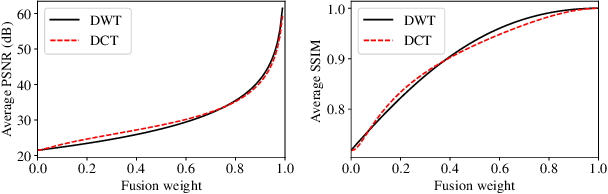

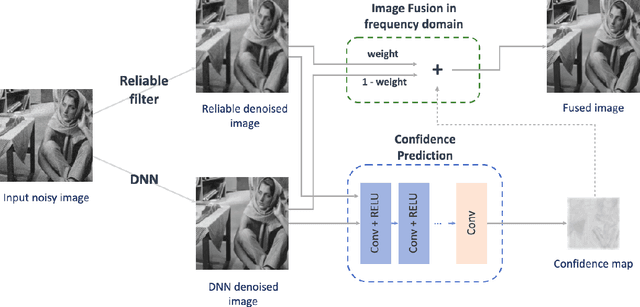

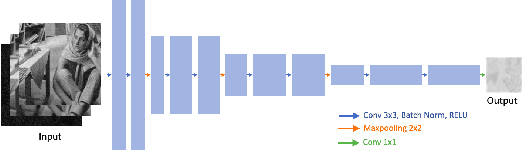

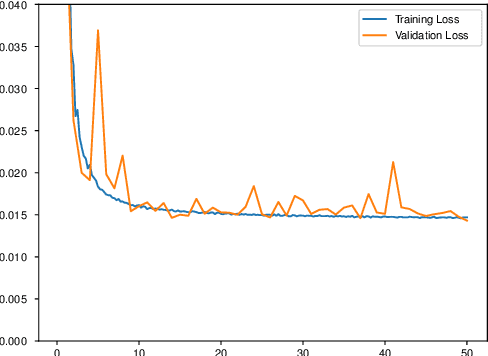

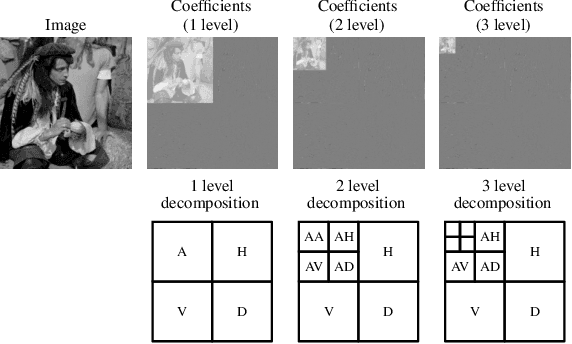

Abstract:Deep image denoisers achieve state-of-the-art results but with a hidden cost. As witnessed in recent literature, these deep networks are capable of overfitting their training distributions, causing inaccurate hallucinations to be added to the output and generalizing poorly to varying data. For better control and interpretability over a deep denoiser, we propose a novel framework exploiting a denoising network. We call it controllable confidence-based image denoising (CCID). In this framework, we exploit the outputs of a deep denoising network alongside an image convolved with a reliable filter. Such a filter can be a simple convolution kernel which does not risk adding hallucinated information. We propose to fuse the two components with a frequency-domain approach that takes into account the reliability of the deep network outputs. With our framework, the user can control the fusion of the two components in the frequency domain. We also provide a user-friendly map estimating spatially the confidence in the output that potentially contains network hallucination. Results show that our CCID not only provides more interpretability and control, but can even outperform both the quantitative performance of the deep denoiser and that of the reliable filter, especially when the test data diverge from the training data.

Controllable Confidence-Based Image Denoising

Jun 17, 2021

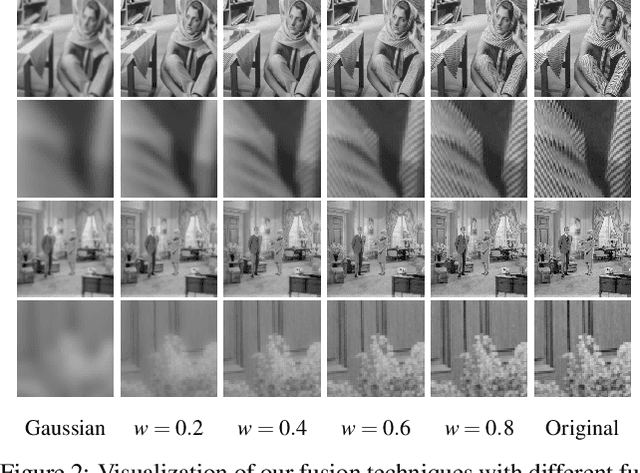

Abstract:Image denoising is a classic restoration problem. Yet, current deep learning methods are subject to the problems of generalization and interpretability. To mitigate these problems, in this project, we present a framework that is capable of controllable, confidence-based noise removal. The framework is based on the fusion between two different denoised images, both derived from the same noisy input. One of the two is denoised using generic algorithms (e.g. Gaussian), which make few assumptions on the input images, therefore, generalize in all scenarios. The other is denoised using deep learning, performing well on seen datasets. We introduce a set of techniques to fuse the two components smoothly in the frequency domain. Beyond that, we estimate the confidence of a deep learning denoiser to allow users to interpret the output, and provide a fusion strategy that safeguards them against out-of-distribution inputs. Through experiments, we demonstrate the effectiveness of the proposed framework in different use cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge