Hala Hawashin

Compositional Concept Generalization with Variational Quantum Circuits

Sep 11, 2025Abstract:Compositional generalization is a key facet of human cognition, but lacking in current AI tools such as vision-language models. Previous work examined whether a compositional tensor-based sentence semantics can overcome the challenge, but led to negative results. We conjecture that the increased training efficiency of quantum models will improve performance in these tasks. We interpret the representations of compositional tensor-based models in Hilbert spaces and train Variational Quantum Circuits to learn these representations on an image captioning task requiring compositional generalization. We used two image encoding techniques: a multi-hot encoding (MHE) on binary image vectors and an angle/amplitude encoding on image vectors taken from the vision-language model CLIP. We achieve good proof-of-concept results using noisy MHE encodings. Performance on CLIP image vectors was more mixed, but still outperformed classical compositional models.

Multimodal Structure-Aware Quantum Data Processing

Nov 06, 2024

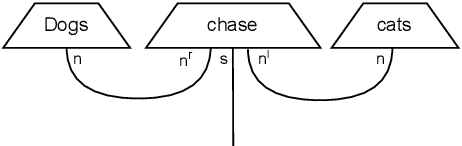

Abstract:While large language models (LLMs) have advanced the field of natural language processing (NLP), their ``black box'' nature obscures their decision-making processes. To address this, researchers developed structured approaches using higher order tensors. These are able to model linguistic relations, but stall when training on classical computers due to their excessive size. Tensors are natural inhabitants of quantum systems and training on quantum computers provides a solution by translating text to variational quantum circuits. In this paper, we develop MultiQ-NLP: a framework for structure-aware data processing with multimodal text+image data. Here, ``structure'' refers to syntactic and grammatical relationships in language, as well as the hierarchical organization of visual elements in images. We enrich the translation with new types and type homomorphisms and develop novel architectures to represent structure. When tested on a main stream image classification task (SVO Probes), our best model showed a par performance with the state of the art classical models; moreover the best model was fully structured.

Multimodal Quantum Natural Language Processing: A Novel Framework for using Quantum Methods to Analyse Real Data

Oct 29, 2024

Abstract:Despite significant advances in quantum computing across various domains, research on applying quantum approaches to language compositionality - such as modeling linguistic structures and interactions - remains limited. This gap extends to the integration of quantum language data with real-world data from sources like images, video, and audio. This thesis explores how quantum computational methods can enhance the compositional modeling of language through multimodal data integration. Specifically, it advances Multimodal Quantum Natural Language Processing (MQNLP) by applying the Lambeq toolkit to conduct a comparative analysis of four compositional models and evaluate their influence on image-text classification tasks. Results indicate that syntax-based models, particularly DisCoCat and TreeReader, excel in effectively capturing grammatical structures, while bag-of-words and sequential models struggle due to limited syntactic awareness. These findings underscore the potential of quantum methods to enhance language modeling and drive breakthroughs as quantum technology evolves.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge