Hadi Vafaii

Metabolic cost of information processing in Poisson variational autoencoders

Feb 13, 2026Abstract:Computation in biological systems is fundamentally energy-constrained, yet standard theories of computation treat energy as freely available. Here, we argue that variational free energy minimization under a Poisson assumption offers a principled path toward an energy-aware theory of computation. Our key observation is that the Kullback-Leibler (KL) divergence term in the Poisson free energy objective becomes proportional to the prior firing rates of model neurons, yielding an emergent metabolic cost term that penalizes high baseline activity. This structure couples an abstract information-theoretic quantity -- the *coding rate* -- to a concrete biophysical variable -- the *firing rate* -- which enables a trade-off between coding fidelity and energy expenditure. Such a coupling arises naturally in the Poisson variational autoencoder (P-VAE) -- a brain-inspired generative model that encodes inputs as discrete spike counts and recovers a spiking form of *sparse coding* as a special case -- but is absent from standard Gaussian VAEs. To demonstrate that this metabolic cost structure is unique to the Poisson formulation, we compare the P-VAE against Grelu-VAE, a Gaussian VAE with ReLU rectification applied to latent samples, which controls for the non-negativity constraint. Across a systematic sweep of the KL term weighting coefficient $β$ and latent dimensionality, we find that increasing $β$ monotonically increases sparsity and reduces average spiking activity in the P-VAE. In contrast, Grelu-VAE representations remain unchanged, confirming that the effect is specific to Poisson statistics rather than a byproduct of non-negative representations. These results establish Poisson variational inference as a promising foundation for a resource-constrained theory of computation.

A Hitchhiker's Guide to Poisson Gradient Estimation

Feb 03, 2026Abstract:Poisson-distributed latent variable models are widely used in computational neuroscience, but differentiating through discrete stochastic samples remains challenging. Two approaches address this: Exponential Arrival Time (EAT) simulation and Gumbel-SoftMax (GSM) relaxation. We provide the first systematic comparison of these methods, along with practical guidance for practitioners. Our main technical contribution is a modification to the EAT method that theoretically guarantees an unbiased first moment (exactly matching the firing rate), and reduces second-moment bias. We evaluate these methods on their distributional fidelity, gradient quality, and performance on two tasks: (1) variational autoencoders with Poisson latents, and (2) partially observable generalized linear models, where latent neural connectivity must be inferred from observed spike trains. Across all metrics, our modified EAT method exhibits better overall performance (often comparable to exact gradients), and substantially higher robustness to hyperparameter choices. Together, our results clarify the trade-offs between these methods and offer concrete recommendations for practitioners working with Poisson latent variable models.

Inferring response times of perceptual decisions with Poisson variational autoencoders

Nov 14, 2025

Abstract:Many properties of perceptual decision making are well-modeled by deep neural networks. However, such architectures typically treat decisions as instantaneous readouts, overlooking the temporal dynamics of the decision process. We present an image-computable model of perceptual decision making in which choices and response times arise from efficient sensory encoding and Bayesian decoding of neural spiking activity. We use a Poisson variational autoencoder to learn unsupervised representations of visual stimuli in a population of rate-coded neurons, modeled as independent homogeneous Poisson processes. A task-optimized decoder then continually infers an approximate posterior over actions conditioned on incoming spiking activity. Combining these components with an entropy-based stopping rule yields a principled and image-computable model of perceptual decisions capable of generating trial-by-trial patterns of choices and response times. Applied to MNIST digit classification, the model reproduces key empirical signatures of perceptual decision making, including stochastic variability, right-skewed response time distributions, logarithmic scaling of response times with the number of alternatives (Hick's law), and speed-accuracy trade-offs.

Unveiling Secrets of Brain Function With Generative Modeling: Motion Perception in Primates & Cortical Network Organization in Mice

Dec 25, 2024

Abstract:This Dissertation is comprised of two main projects, addressing questions in neuroscience through applications of generative modeling. Project #1 (Chapter 4) explores how neurons encode features of the external world. I combine Helmholtz's "Perception as Unconscious Inference" -- paralleled by modern generative models like variational autoencoders (VAE) -- with the hierarchical structure of the visual cortex. This combination leads to the development of a hierarchical VAE model, which I test for its ability to mimic neurons from the primate visual cortex in response to motion stimuli. Results show that the hierarchical VAE perceives motion similar to the primate brain. Additionally, the model identifies causal factors of retinal motion inputs, such as object- and self-motion, in a completely unsupervised manner. Collectively, these results suggest that hierarchical inference underlines the brain's understanding of the world, and hierarchical VAEs can effectively model this understanding. Project #2 (Chapter 5) investigates the spatiotemporal structure of spontaneous brain activity and its reflection of brain states like rest. Using simultaneous fMRI and wide-field Ca2+ imaging data, this project demonstrates that the mouse cortex can be decomposed into overlapping communities, with around half of the cortical regions belonging to multiple communities. Comparisons reveal similarities and differences between networks inferred from fMRI and Ca2+ signals. The introduction (Chapter 1) is divided similarly to this abstract: sections 1.1 to 1.8 provide background information about Project #1, and sections 1.9 to 1.13 are related to Project #2. Chapter 2 includes historical background, Chapter 3 provides the necessary mathematical background, and finally, Chapter 6 contains concluding remarks and future directions.

A prescriptive theory for brain-like inference

Oct 25, 2024

Abstract:The Evidence Lower Bound (ELBO) is a widely used objective for training deep generative models, such as Variational Autoencoders (VAEs). In the neuroscience literature, an identical objective is known as the variational free energy, hinting at a potential unified framework for brain function and machine learning. Despite its utility in interpreting generative models, including diffusion models, ELBO maximization is often seen as too broad to offer prescriptive guidance for specific architectures in neuroscience or machine learning. In this work, we show that maximizing ELBO under Poisson assumptions for general sequence data leads to a spiking neural network that performs Bayesian posterior inference through its membrane potential dynamics. The resulting model, the iterative Poisson VAE (iP-VAE), has a closer connection to biological neurons than previous brain-inspired predictive coding models based on Gaussian assumptions. Compared to amortized and iterative VAEs, iP-VAElearns sparser representations and exhibits superior generalization to out-of-distribution samples. These findings suggest that optimizing ELBO, combined with Poisson assumptions, provides a solid foundation for developing prescriptive theories in NeuroAI.

Poisson Variational Autoencoder

May 23, 2024

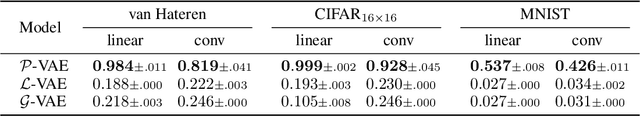

Abstract:Variational autoencoders (VAE) employ Bayesian inference to interpret sensory inputs, mirroring processes that occur in primate vision across both ventral (Higgins et al., 2021) and dorsal (Vafaii et al., 2023) pathways. Despite their success, traditional VAEs rely on continuous latent variables, which deviates sharply from the discrete nature of biological neurons. Here, we developed the Poisson VAE (P-VAE), a novel architecture that combines principles of predictive coding with a VAE that encodes inputs into discrete spike counts. Combining Poisson-distributed latent variables with predictive coding introduces a metabolic cost term in the model loss function, suggesting a relationship with sparse coding which we verify empirically. Additionally, we analyze the geometry of learned representations, contrasting the P-VAE to alternative VAE models. We find that the P-VAEencodes its inputs in relatively higher dimensions, facilitating linear separability of categories in a downstream classification task with a much better (5x) sample efficiency. Our work provides an interpretable computational framework to study brain-like sensory processing and paves the way for a deeper understanding of perception as an inferential process.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge