Gyuri Han

Smart Home Appliances: Chat with Your Fridge

Dec 19, 2019

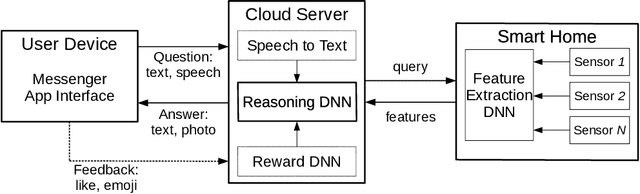

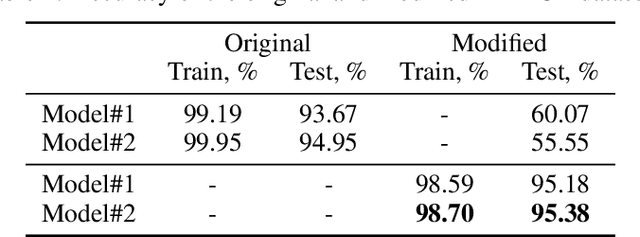

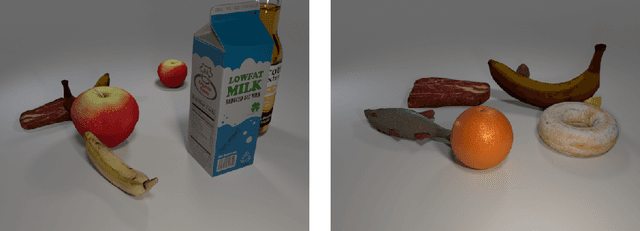

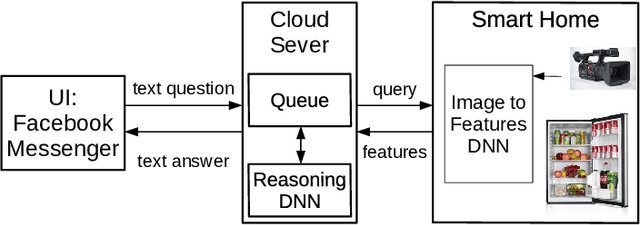

Abstract:Current home appliances are capable to execute a limited number of voice commands such as turning devices on or off, adjusting music volume or light conditions. Recent progress in machine reasoning gives an opportunity to develop new types of conversational user interfaces for home appliances. In this paper, we apply state-of-the-art visual reasoning model and demonstrate that it is feasible to ask a smart fridge about its contents and various properties of the food with close-to-natural conversation experience. Our visual reasoning model answers user questions about existence, count, category and freshness of each product by analyzing photos made by the image sensor inside the smart fridge. Users may chat with their fridge using off-the-shelf phone messenger while being away from home, for example, when shopping in the supermarket. We generate a visually realistic synthetic dataset to train machine learning reasoning model that achieves 95% answer accuracy on test data. We present the results of initial user tests and discuss how we modify distribution of generated questions for model training based on human-in-the-loop guidance. We open source code for the whole system including dataset generation, reasoning model and demonstration scripts.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge