Guik Jung

Masked Deep Q-Recommender for Effective Question Scheduling

Dec 19, 2021

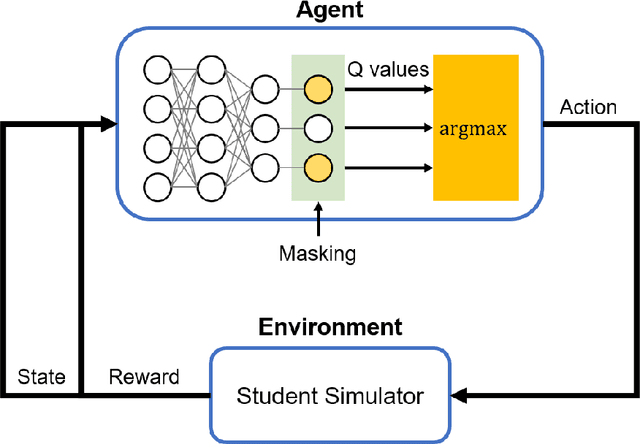

Abstract:Providing appropriate questions according to a student's knowledge level is imperative in personalized learning. However, It requires a lot of manual effort for teachers to understand students' knowledge status and provide optimal questions accordingly. To address this problem, we introduce a question scheduling model that can effectively boost student knowledge level using Reinforcement Learning (RL). Our proposed method first evaluates students' concept-level knowledge using knowledge tracing (KT) model. Given predicted student knowledge, RL-based recommender predicts the benefits of each question. With curriculum range restriction and duplicate penalty, the recommender selects questions sequentially until it reaches the predefined number of questions. In an experimental setting using a student simulator, which gives 20 questions per day for two weeks, questions recommended by the proposed method increased average student knowledge level by 21.3%, superior to an expert-designed schedule baseline with a 10% increase in student knowledge levels.

Diagnostic Assessment Generation via Combinatorial Search

Dec 06, 2021

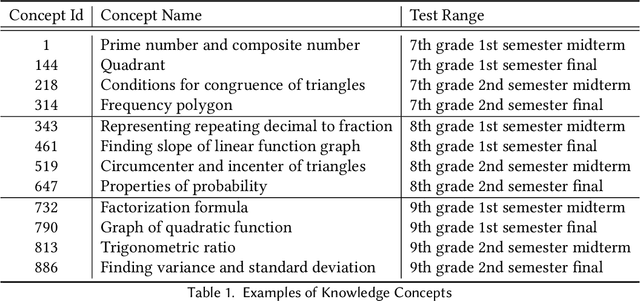

Abstract:Initial assessment tests are crucial in capturing learner knowledge states in a consistent manner. Aside from crafting questions itself, putting together relevant problems to form a question sheet is also a time-consuming process. In this work, we present a generic formulation of question assembly and a genetic algorithm based method that can generate assessment tests from raw problem-solving history. First, we estimate the learner-question knowledge matrix (snapshot). Each matrix element stands for the probability that a learner correctly answers a specific question. We formulate the task as a combinatorial search over this snapshot. To ensure representative and discriminative diagnostic tests, questions are selected (1) that has a low root mean squared error against the whole question pool and (2) high standard deviation among learner performances. Experimental results show that the proposed method outperforms greedy and random baseline by a large margin in one private dataset and four public datasets. We also performed qualitative analysis on the generated assessment test for 9th graders, which enjoys good problem scatterness across the whole 9th grader curriculum and decent difficulty level distribution.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge