Grey Kuling

BI-RADS BERT & Using Section Tokenization to Understand Radiology Reports

Oct 14, 2021

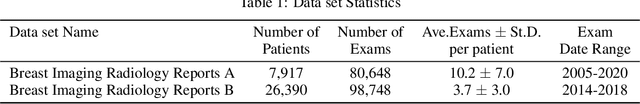

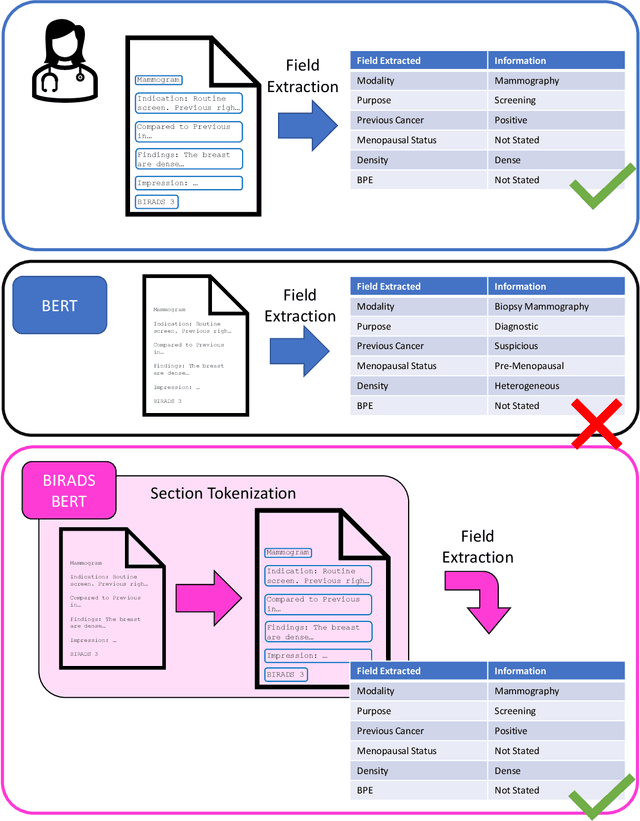

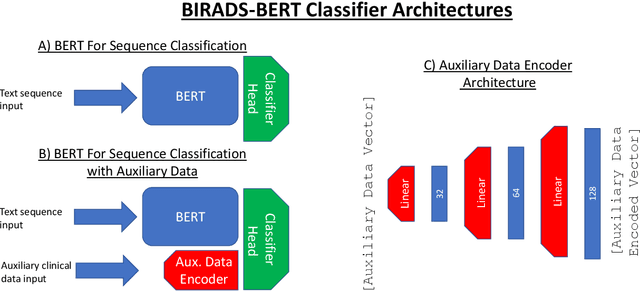

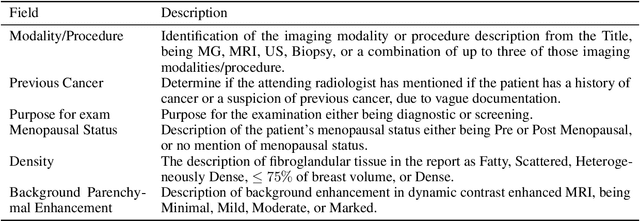

Abstract:Radiology reports are the main form of communication between radiologists and other clinicians, and contain important information for patient care. However in order to use this information for research it is necessary to convert the raw text into structured data suitable for analysis. Domain specific contextual word embeddings have been shown to achieve impressive accuracy at such natural language processing tasks in medicine. In this work we pre-trained a contextual embedding BERT model using breast radiology reports and developed a classifier that incorporated the embedding with auxiliary global textual features in order to perform a section tokenization task. This model achieved a 98% accuracy at segregating free text reports into sections of information outlined in the Breast Imaging Reporting and Data System (BI-RADS) lexicon, a significant improvement over the Classic BERT model without auxiliary information. We then evaluated whether using section tokenization improved the downstream extraction of the following fields: modality/procedure, previous cancer, menopausal status, purpose of exam, breast density and background parenchymal enhancement. Using the BERT model pre-trained on breast radiology reports combined with section tokenization resulted in an overall accuracy of 95.9% in field extraction. This is a 17% improvement compared to an overall accuracy of 78.9% for field extraction for models without section tokenization and with Classic BERT embeddings. Our work shows the strength of using BERT in radiology report analysis and the advantages of section tokenization in identifying key features of patient factors recorded in breast radiology reports.

Intensity augmentation for domain transfer of whole breast segmentation in MRI

Sep 05, 2019

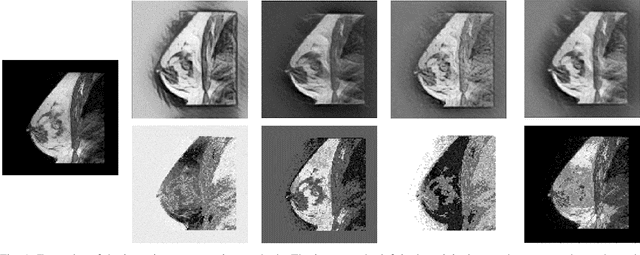

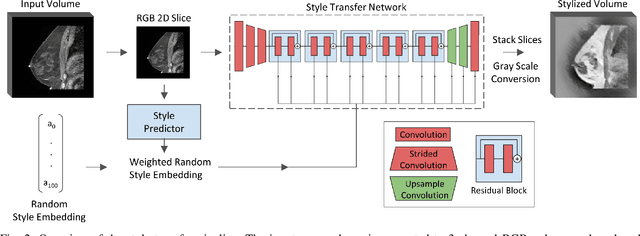

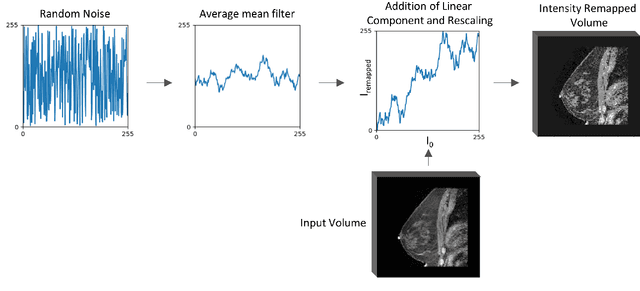

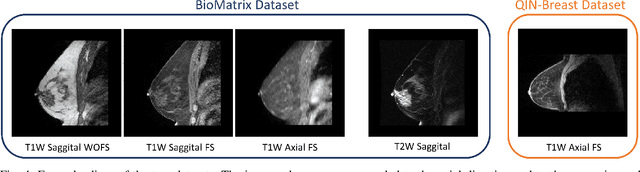

Abstract:The segmentation of the breast from the chest wall is an important first step in the analysis of breast magnetic resonance images. 3D U-nets have been shown to obtain high segmentation accuracy and appear to generalize well when trained on one scanner type and tested on another scanner, provided that a very similar T1-weighted MR protocol is used. There has, however, been little work addressing the problem of domain adaptation when image intensities or patient orientation differ markedly between the training set and an unseen test set. To overcome the domain shift we propose to apply extensive intensity augmentation in addition to geometric augmentation during training. We explored both style transfer and a novel intensity remapping approach as intensity augmentation strategies. For our experiments, we trained a 3D U-net on T1-weighted scans and tested on T2-weighted scans. By applying intensity augmentation we increased segmentation performance from a DSC of 0.71 to 0.90. This performance is very close to the baseline performance of training and testing on T2-weighted scans (0.92). Furthermore, we applied our network to an independent test set made up of publicly available scans acquired using a T1-weighted TWIST sequence and a different coil configuration. On this dataset we obtained a performance of 0.89, close to the inter-observer variability of the ground truth segmentations (0.92). Our results show that using intensity augmentation in addition to geometric augmentation is a suitable method to overcome the intensity domain shift and we expect it to be useful for a wide range of segmentation tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge