Graeme Glass

CleftGAN: Adapting A Style-Based Generative Adversarial Network To Create Images Depicting Cleft Lip Deformity

Oct 12, 2023

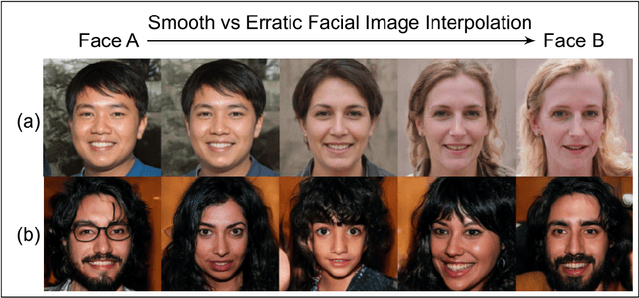

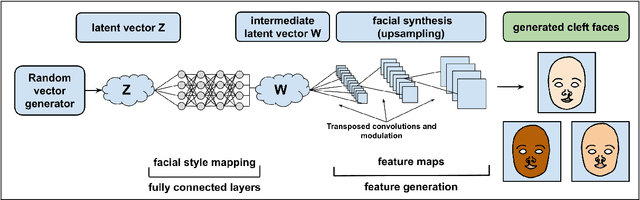

Abstract:A major obstacle when attempting to train a machine learning system to evaluate facial clefts is the scarcity of large datasets of high-quality, ethics board-approved patient images. In response, we have built a deep learning-based cleft lip generator designed to produce an almost unlimited number of artificial images exhibiting high-fidelity facsimiles of cleft lip with wide variation. We undertook a transfer learning protocol testing different versions of StyleGAN-ADA (a generative adversarial network image generator incorporating adaptive data augmentation (ADA)) as the base model. Training images depicting a variety of cleft deformities were pre-processed to adjust for rotation, scaling, color adjustment and background blurring. The ADA modification of the primary algorithm permitted construction of our new generative model while requiring input of a relatively small number of training images. Adversarial training was carried out using 514 unique frontal photographs of cleft-affected faces to adapt a pre-trained model based on 70,000 normal faces. The Frechet Inception Distance (FID) was used to measure the similarity of the newly generated facial images to the cleft training dataset, while Perceptual Path Length (PPL) and the novel Divergence Index of Severity Histograms (DISH) measures were also used to assess the performance of the image generator that we dub CleftGAN. We found that StyleGAN3 with translation invariance (StyleGAN3-t) performed optimally as a base model. Generated images achieved a low FID reflecting a close similarity to our training input dataset of genuine cleft images. Low PPL and DISH measures reflected a smooth and semantically valid interpolation of images through the transfer learning process and a similar distribution of severity in the training and generated images, respectively.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge