Gordon Plotkin

Unraveling the iterative CHAD

May 21, 2025Abstract:Combinatory Homomorphic Automatic Differentiation (CHAD) was originally formulated as a semantics-driven source transformation for reverse-mode AD in total programming languages. We extend this framework to partial languages with features such as potentially non-terminating operations, real-valued conditionals, and iteration constructs like while-loops, while preserving CHAD's structure-preserving semantics principle. A key contribution is the introduction of iteration-extensive indexed categories, which allow iteration in the base category to lift to parameterized initial algebras in the indexed category. This enables iteration to be interpreted in the Grothendieck construction of the target language in a principled way. The resulting fibred iterative structure cleanly models iteration in the categorical semantics. Consequently, the extended CHAD transformation remains the unique structure-preserving functor (an iterative Freyd category morphism) from the freely generated iterative Freyd category of the source language to the Grothendieck construction of the target's syntactic semantics, mapping each primitive operation to its derivative. We prove the correctness of this transformation using the universal property of the source language's syntax, showing that the transformed programs compute correct reverse-mode derivatives. Our development also contributes to understanding iteration constructs within dependently typed languages and categories of containers. As our primary motivation and application, we generalize CHAD to languages with data types, partial features, and iteration, providing the first rigorous categorical semantics for reverse-mode CHAD in such settings and formally guaranteeing the correctness of the source-to-source CHAD technique.

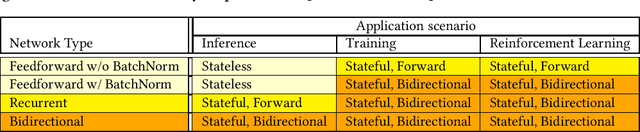

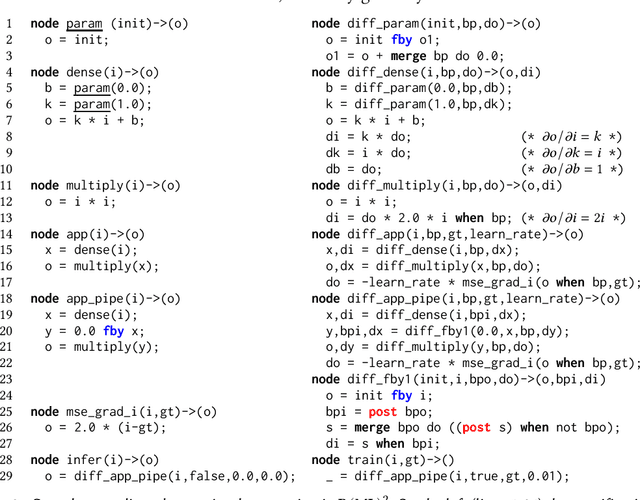

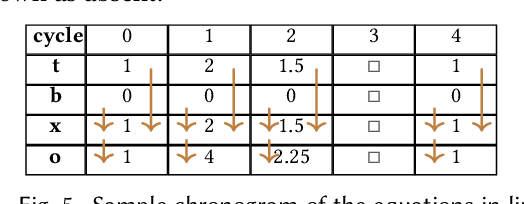

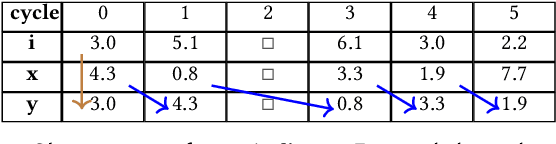

Bidirectional Reactive Programming for Machine Learning

Nov 28, 2023

Abstract:Reactive languages are dedicated to the programming of systems which interact continuously and concurrently with their environment. Values take the form of unbounded streams modeling the (discrete) passing of time or the sequence of concurrent interactions. While conventional reactivity models recurrences forward in time, we introduce a symmetric reactive construct enabling backward recurrences. Constraints on the latter allow to make the implementation practical. Machine Learning (ML) systems provide numerous motivations for all of this: we demonstrate that reverse-mode automatic differentiation, backpropagation, batch normalization, bidirectional recurrent neural networks, training and reinforcement learning algorithms, are all naturally captured as bidirectional reactive programs.

Smart Choices and the Selection Monad

Jul 17, 2020Abstract:Describing systems in terms of choices and of the resulting costs and rewards offers the promise of freeing algorithm designers and programmers from specifying how those choices should be made; in implementations, the choices can be realized by optimization techniques and, increasingly, by machine learning methods. We study this approach from a programming-language perspective. We define two small languages that support decision-making abstractions: one with choices and rewards, and the other additionally with probabilities. We give both operational and denotational semantics. The operational semantics combine the usual semantics of standard constructs with optimization over a space of possible executions. The denotational semantics, which are compositional and can also be viewed as an implementation by translation to a simpler language, rely on the selection monad. We establish that the two semantics coincide in both cases.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge