Giosuè Lo Bosco

The GECo algorithm for Graph Neural Networks Explanation

Nov 18, 2024

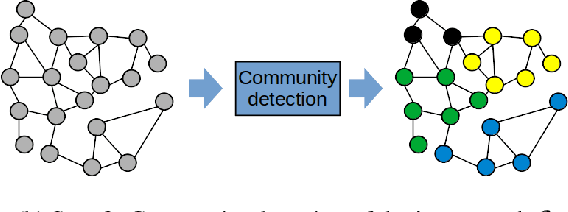

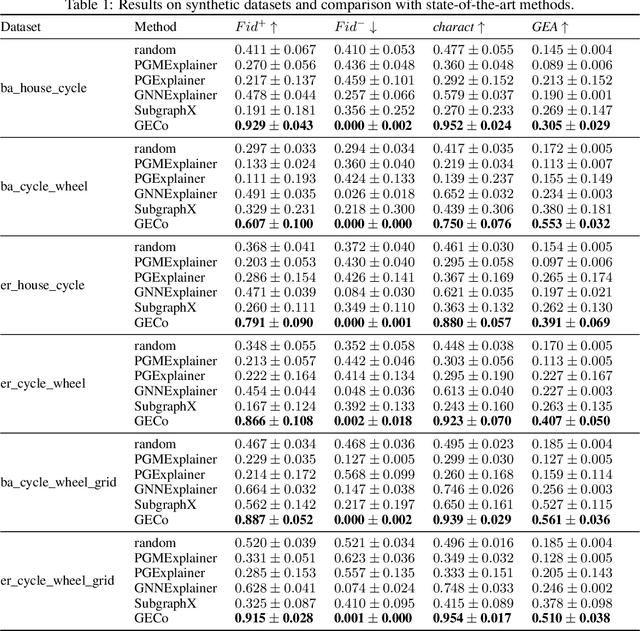

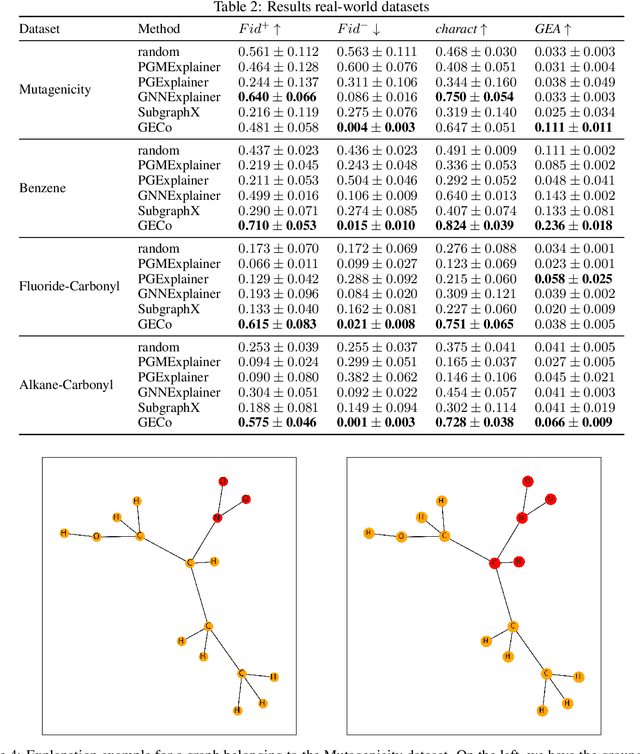

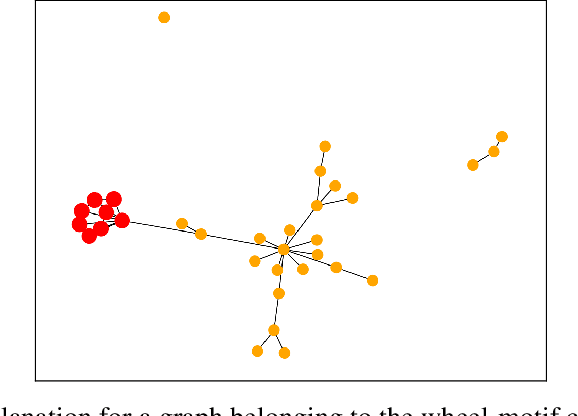

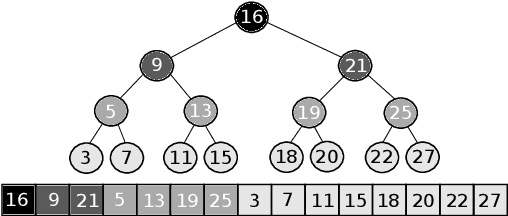

Abstract:Graph Neural Networks (GNNs) are powerful models that can manage complex data sources and their interconnection links. One of GNNs' main drawbacks is their lack of interpretability, which limits their application in sensitive fields. In this paper, we introduce a new methodology involving graph communities to address the interpretability of graph classification problems. The proposed method, called GECo, exploits the idea that if a community is a subset of graph nodes densely connected, this property should play a role in graph classification. This is reasonable, especially if we consider the message-passing mechanism, which is the basic mechanism of GNNs. GECo analyzes the contribution to the classification result of the communities in the graph, building a mask that highlights graph-relevant structures. GECo is tested for Graph Convolutional Networks on six artificial and four real-world graph datasets and is compared to the main explainability methods such as PGMExplainer, PGExplainer, GNNExplainer, and SubgraphX using four different metrics. The obtained results outperform the other methods for artificial graph datasets and most real-world datasets.

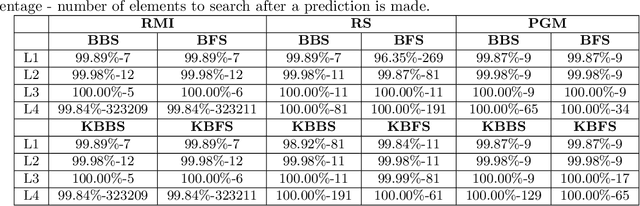

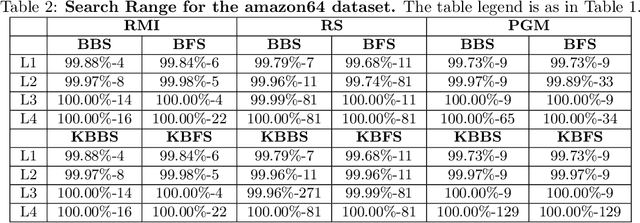

Standard Vs Uniform Binary Search and Their Variants in Learned Static Indexing: The Case of the Searching on Sorted Data Benchmarking Software Platform

Jan 05, 2022

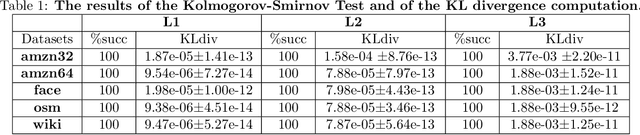

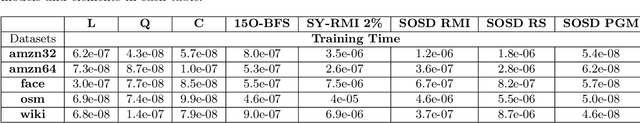

Abstract:The Searching on Sorted Data ({\bf SOSD}, in short) is a highly engineered software platform for benchmarking Learned Indexes, those latter being a novel and quite effective proposal of how to search in a sorted table by combining Machine Learning techniques with classic Algorithms. In such a platform and in the related benchmarking experiments, following a natural and intuitive choice, the final search stage is performed via the Standard (textbook) Binary Search procedure. However, recent studies, that do not use Machine Learning predictions, indicate that Uniform Binary Search, streamlined to avoid \vir{branching} in the main loop, is superior in performance to its Standard counterpart when the table to be searched into is relatively small, e.g., fitting in L1 or L2 cache. Analogous results hold for k-ary Search, even on large tables. One would expect an analogous behaviour within Learned Indexes. Via a set of extensive experiments, coherent with the State of the Art, we show that for Learned Indexes, and as far as the {\bf SOSD} software is concerned, the use of the Standard routine (either Binary or k-ary Search) is superior to the Uniform one, across all the internal memory levels. This fact provides a quantitative justification of the natural choice made so far. Our experiments also indicate that Uniform Binary and k-ary Search can be advantageous to use in order to save space in Learned Indexes, while granting a good performance in time. Our findings are of methodological relevance for this novel and fast-growing area and informative to practitioners interested in using Learned Indexes in application domains, e.g., Data Bases and Search Engines.

Learned Sorted Table Search and Static Indexes in Small Space: Methodological and Practical Insights via an Experimental Study

Jul 23, 2021

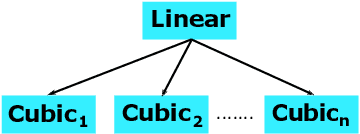

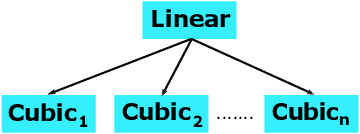

Abstract:Sorted Table Search Procedures are the quintessential query-answering tool, still very useful, e.g, Search Engines (Google Chrome). Speeding them up, in small additional space with respect to the table being searched into, is still a quite significant achievement. Static Learned Indexes have been very successful in achieving such a speed-up, but leave open a major question: To what extent one can enjoy the speed-up of Learned Indexes while using constant or nearly constant additional space. By generalizing the experimental methodology of a recent benchmarking study on Learned Indexes, we shed light on this question, by considering two scenarios. The first, quite elementary, i.e., textbook code, and the second using advanced Learned Indexing algorithms and the supporting sophisticated software platforms. Although in both cases one would expect a positive answer, its achievement is not as simple as it seems. Indeed, our extensive set of experiments reveal a complex relationship between query time and model space. The findings regarding this relationship and the corresponding quantitative estimates, across memory levels, can be of interest to algorithm designers and of use to practitioners as well. As an essential part of our research, we introduce two new models that are of interest in their own right. The first is a constant space model that can be seen as a generalization of $k$-ary search, while the second is a synoptic {\bf RMI}, in which we can control model space usage.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge