Giacomo Lastrucci

ENFORCE: Exact Nonlinear Constrained Learning with Adaptive-depth Neural Projection

Feb 10, 2025

Abstract:Ensuring neural networks adhere to domain-specific constraints is crucial for addressing safety and ethical concerns while also enhancing prediction accuracy. Despite the nonlinear nature of most real-world tasks, existing methods are predominantly limited to affine or convex constraints. We introduce ENFORCE, a neural network architecture that guarantees predictions to satisfy nonlinear constraints exactly. ENFORCE is trained with standard unconstrained gradient-based optimizers (e.g., Adam) and leverages autodifferentiation and local neural projections to enforce any $\mathcal{C}^1$ constraint to arbitrary tolerance $\epsilon$. We build an adaptive-depth neural projection (AdaNP) module that dynamically adjusts its complexity to suit the specific problem and the required tolerance levels. ENFORCE guarantees satisfaction of equality constraints that are nonlinear in both inputs and outputs of the neural network with minimal (and adjustable) computational cost.

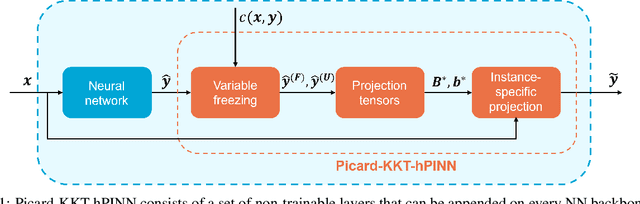

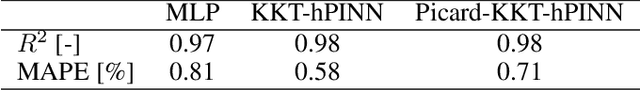

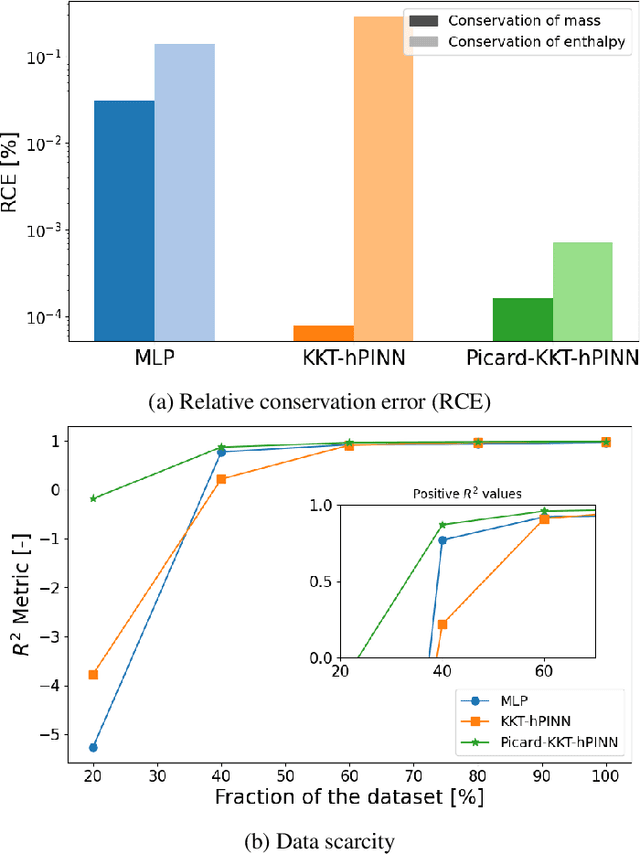

Picard-KKT-hPINN: Enforcing Nonlinear Enthalpy Balances for Physically Consistent Neural Networks

Jan 29, 2025

Abstract:Neural networks are widely used as surrogate models but they do not guarantee physically consistent predictions thereby preventing adoption in various applications. We propose a method that can enforce NNs to satisfy physical laws that are nonlinear in nature such as enthalpy balances. Our approach, inspired by Picard successive approximations method, aims to enforce multiplicatively separable constraints by sequentially freezing and projecting a set of the participating variables. We demonstrate our PicardKKThPINN for surrogate modeling of a catalytic packed bed reactor for methanol synthesis. Our results show that the method efficiently enforces nonlinear enthalpy and linear atomic balances at machine-level precision. Additionally, we show that enforcing conservation laws can improve accuracy in data-scarce conditions compared to vanilla multilayer perceptron.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge