George Barmpalias

Compression of enumerations and gain

Apr 06, 2023

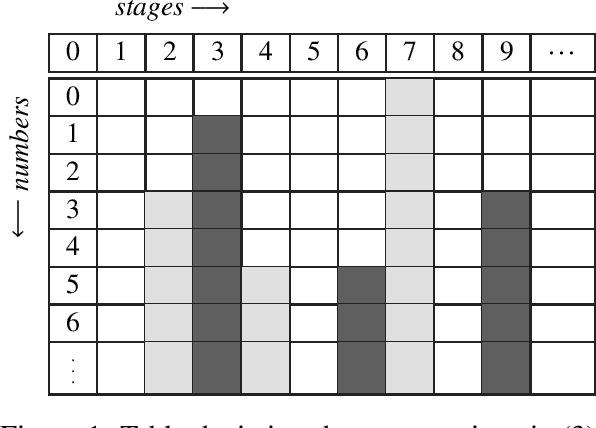

Abstract:We study the compressibility of enumerations, and its role in the relative Kolmogorov complexity of computably enumerable sets, with respect to density. With respect to a strong and a weak form of compression, we examine the gain: the amount of auxiliary information embedded in the compressed enumeration. Strong compression and weak gainless compression is shown for any computably enumerable set, and a positional game is studied toward understanding strong gainless compression.

Algorithmic learning of probability distributions from random data in the limit

Mar 13, 2018Abstract:We study the problem of identifying a probability distribution for some given randomly sampled data in the limit, in the context of algorithmic learning theory as proposed recently by Vinanyi and Chater. We show that there exists a computable partial learner for the computable probability measures, while by Bienvenu, Monin and Shen it is known that there is no computable learner for the computable probability measures. Our main result is the characterization of the oracles that compute explanatory learners for the computable (continuous) probability measures as the high oracles. This provides an analogue of a well-known result of Adleman and Blum in the context of learning computable probability distributions. We also discuss related learning notions such as behaviorally correct learning and orther variations of explanatory learning, in the context of learning probability distributions from data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge