Ge Han

DeepStego: Protecting Intellectual Property of Deep Neural Networks by Steganography

Mar 13, 2019

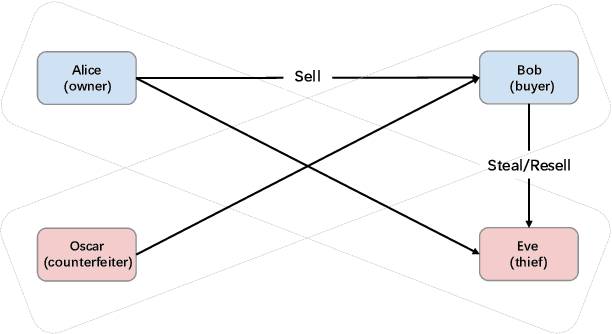

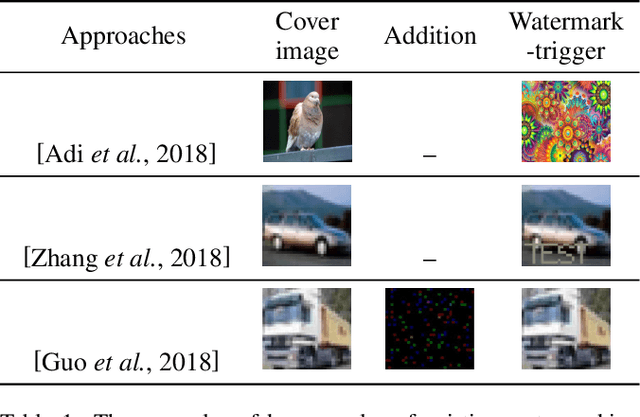

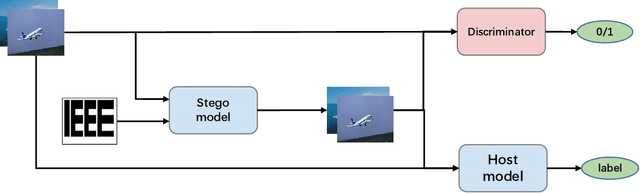

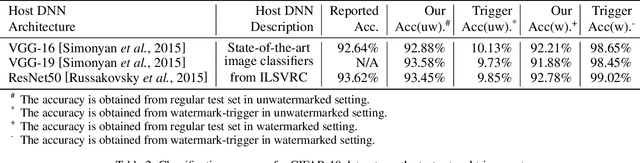

Abstract:Deep Neural Networks (DNNs) has shown great success in various challenging tasks. Training these networks is computationally expensive and requires vast amounts of training data. Therefore, it is necessary to design a technology to protect the intellectual property (IP) of the model and externally verify the ownership of the model in a black-box way. Previous studies either fail to meet the black-box requirement or have not dealt with several forms of security and legal problems. In this paper, we firstly propose a novel steganographic scheme for watermarking Deep Neural Networks in the process of training. This scheme is the first feasible scheme to protect DNNs which perfectly solves the problems of safety and legality. We demonstrate experimentally that such a watermark has no obvious influence on the main task of model design and can successfully verify the ownership of the model. Furthermore, we show a rather robustness by simulating our scheme in a real situation.

Learning Symmetric and Asymmetric Steganography via Adversarial Training

Mar 13, 2019

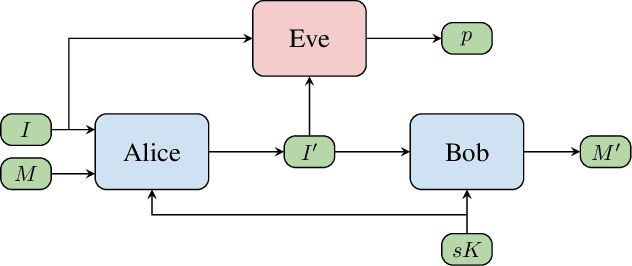

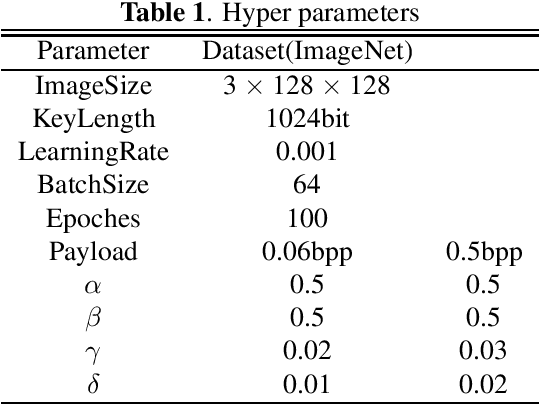

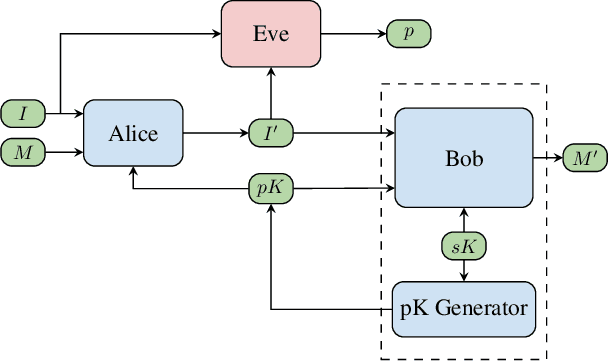

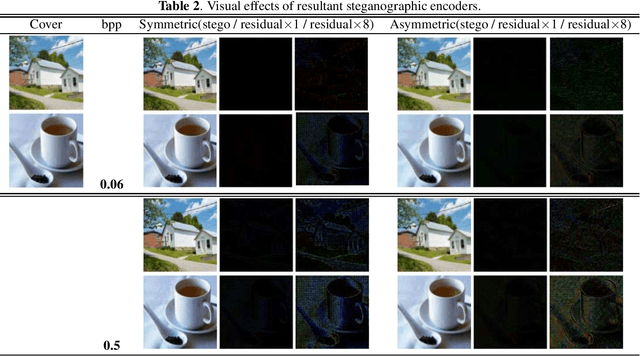

Abstract:Steganography refers to the art of concealing secret messages within multiple media carriers so that an eavesdropper is unable to detect the presence and content of the hidden messages. In this paper, we firstly propose a novel key-dependent steganographic scheme that achieves steganographic objectives with adversarial training. Symmetric (secret-key) and Asymmetric (public-key) steganographic scheme are separately proposed and each scheme is successfully designed and implemented. We show that these encodings produced by our scheme improve the invisibility by 20% than previous deep-leanring-based work, and further that perform competitively remarkable undetectability 25% better than classic steganographic algorithms. Finally, we simulated our scheme in a real situation where the decoder achieved an accuracy of more than 98% of the original message.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge